READING SUBTLY

This

was the domain of my Blogger site from 2009 to 2018, when I moved to this domain and started

The Storytelling Ape

. The search option should help you find any of the old posts you're looking for.

Too Psyched for Sherlock: A Review of Maria Konnikova’s “Mastermind: How to Think like Sherlock Holmes”—with Some Thoughts on Science Education

Maria Konnikova’s book “Mastermind: How to Think Like Sherlock Holmes” got me really excited because if the science of psychology is ever brought up in discussions of literature, it’s usually the pseudoscience of Sigmund Freud. Konnikova, whose blog went a long way toward remedying that tragedy, wanted to offer up an alternative approach. However, though the book shows great promise, it’s ultimately disappointing.

Whenever he gets really drunk, my brother has the peculiar habit of reciting the plot of one or another of his favorite shows or books. His friends and I like to tease him about it—“Watch out, Dan’s drunk, nobody mention The Wire!”—and the quirk can certainly be annoying, especially if you’ve yet to experience the story first-hand. But I have to admit, given how blotto he usually is when he first sets out on one of his grand retellings, his ability to recall intricate plotlines right down to their minutest shifts and turns is extraordinary. One recent night, during a timeout in an epic shellacking of Notre Dame’s football team, he took up the tale of Django Unchained, which incidentally I’d sat next to him watching just the week before. Tuning him out, I let my thoughts shift to a post I’d read on The New Yorker’s cinema blog The Front Row.

In “The Riddle of Tarantino,” film critic Richard Brody analyzes the director-screenwriter’s latest work in an attempt to tease out the secrets behind the popular appeal of his creations and to derive insights into the inner workings of his mind. The post is agonizingly—though also at points, I must admit, exquisitely—overwritten, almost a parody of the grandiose type of writing one expects to find within the pages of the august weekly. Bemused by the lavish application of psychoanalytic jargon, I finished the essay pitying Brody for, in all his writerly panache, having nothing of real substance to say about the movie or the mind behind it. I wondered if he knows the scientific consensus on Freud is that his influence is less in the line of, say, a Darwin or an Einstein than of an L. Ron Hubbard.

What Brody and my brother have in common is that they were both moved enough by their cinematic experience to feel an urge to share their enthusiasm, complicated though that enthusiasm may have been. Yet they both ended up doing the story a disservice, succeeding less in celebrating the work than in blunting its impact. Listening to my brother’s rehearsal of the plot with Brody’s essay in mind, I wondered what better field there could be than psychology for affording enthusiasts discussion-worthy insights to help them move beyond simple plot references. How tragic, then, that the only versions of psychology on offer in educational institutions catering to those who would be custodians of art, whether in academia or on the mastheads of magazines like The New Yorker, are those in thrall to Freud’s cultish legacy.

There’s just something irresistibly seductive about the promise of a scientific paradigm that allows us to know more about another person than he knows about himself. In this spirit of privileged knowingness, Brody faults Django for its lack of moral complexity before going on to make a silly accusation. Watching the movie, you know who the good guys are, who the bad guys are, and who you want to see prevail in the inevitably epic climax. “And yet,” Brody writes,

the cinematic unconscious shines through in moments where Tarantino just can’t help letting loose his own pleasure in filming pain. In such moments, he never seems to be forcing himself to look or to film, but, rather, forcing himself not to keep going. He’s not troubled by representation but by a visual superego that restrains it. The catharsis he provides in the final conflagration is that of purging the world of miscreants; it’s also a refining fire that blasts away suspicion of any peeping pleasure at misdeeds and fuses aesthetic, moral, and political exultation in a single apotheosis.

The strained stateliness of the prose provides a ready distraction from the stark implausibility of the assessment. Applying Occam’s Razor rather than Freud’s at once insanely elaborate and absurdly reductionist ideology, we might guess that what prompted Tarantino to let the camera linger discomfortingly long on the violent misdeeds of the black hats is that he knew we in the audience would be anticipating that “final conflagration.”

The more outrageous the offense, the more pleasurable the anticipation of comeuppance—but the experimental findings that support this view aren’t covered in film or literary criticism curricula, mired as they are in century-old pseudoscience.

I’ve been eagerly awaiting the day when scientific psychology supplants psychoanalysis (as well as other equally, if not more, absurd ideologies) in academic and popular literary discussions. Coming across the blog Literally Psyched on Scientific American’s website about a year ago gave me a great sense of hope. The tagline, “Conceived in literature, tested in psychology,” as well as the credibility conferred by the host site, promised that the most fitting approach to exploring the resonance and beauty of stories might be undergoing a long overdue renaissance, liberated at last from the dominion of crackpot theorists. So when the author, Maria Konnikova, a doctoral candidate at Columbia, released her first book, I made a point to have Amazon deliver it as early as possible.

Mastermind: How to Think Like Sherlock Holmes does indeed follow the conceived-in-literature-tested-in-psychology formula, taking the principles of sound reasoning expounded by what may be the most recognizable fictional character in history and attempting to show how modern psychology proves their soundness. In what she calls a “Prelude” to her book, Konnikova explains that she’s been a Holmes fan since her father read Conan Doyle’s stories to her and her siblings as children.

The one demonstration of the detective’s abilities that stuck with Konnikova the most comes when he explains to his companion and chronicler Dr. Watson the difference between seeing and observing, using as an example the number of stairs leading up to their famous flat at 221B Baker Street. Watson, naturally, has no idea how many stairs there are because he isn’t in the habit of observing. Holmes, preternaturally, knows there are seventeen steps. Ever since being made aware of Watson’s—and her own—cognitive limitations through this vivid illustration (which had a similar effect on me when I first read “A Scandal in Bohemia” as a teenager), Konnikova has been trying to find the secret to becoming a Holmesian observer as opposed to a mere Watsonian seer. Already in these earliest pages, we encounter some of the principle shortcomings of the strategy behind the book. Konnikova wastes no time on the question of whether or not a mindset oriented toward things like the number of stairs in your building has any actual advantages—with regard to solving crimes or to anything else—but rather assumes old Sherlock is saying something instructive and profound.

Mastermind is, for the most part, an entertaining read. Its worst fault in the realm of simple page-by-page enjoyment is that Konnikova often belabors points that upon reflection expose themselves as mere platitudes. The overall theme is the importance of mindfulness—an important message, to be sure, in this age of rampant multitasking. But readers get more endorsement than practical instruction. You can only be exhorted to pay attention to what you’re doing so many times before you stop paying attention to the exhortations. The book’s problems in both the literary and psychological domains, however, are much more serious. I came to the book hoping it would hold some promise for opening the way to more scientific literary discussions by offering at least a glimpse of what they might look like, but while reading I came to realize there’s yet another obstacle to any substantive analysis of stories. Call it the TED effect. For anything to be read today, or for anything to get published for that matter, it has to promise to uplift readers, reveal to them some secret about how to improve their lives, help them celebrate the horizonless expanse of human potential.

Naturally enough, with the cacophony of competing information outlets, we all focus on the ones most likely to offer us something personally useful. Though self-improvement is a worthy endeavor, the overlooked corollary to this trend is that the worthiness intrinsic to enterprises and ideas is overshadowed and diminished. People ask what’s in literature for me, or what can science do for me, instead of considering them valuable in their own right—and instead of thinking, heaven forbid, we may have a duty to literature and science as institutions serving as essential parts of the foundation of civilized society.

In trying to conceive of a book that would operate as a vehicle for her two passions, psychology and Sherlock Holmes, while at the same time catering to readers’ appetite for life-enhancement strategies and spiritual uplift, Konnikova has produced a work in the grip of a bewildering and self-undermining identity crisis. The organizing conceit of Mastermind is that, just as Sherlock explains to Watson in the second chapter of A Study in Scarlet, the brain is like an attic. For Konnikova, this means the mind is in constant danger of becoming cluttered and disorganized through carelessness and neglect. That this interpretation wasn’t what Conan Doyle had in mind when he put the words into Sherlock’s mouth—and that the meaning he actually had in mind has proven to be completely wrong—doesn’t stop her from making her version of the idea the centerpiece of her argument. “We can,” she writes,

learn to master many aspects of our attic’s structure, throwing out junk that got in by mistake (as Holmes promises to forget Copernicus at the earliest opportunity), prioritizing those things we want to and pushing back those that we don’t, learning how to take the contours of our unique attic into account so that they don’t unduly influence us as they otherwise might. (27)

This all sounds great—a little too great—from a self-improvement perspective, but the attic metaphor is Sherlock’s explanation for why he doesn’t know the earth revolves around the sun and not the other way around. He states quite explicitly that he believes the important point of similarity between attics and brains is their limited capacity. “Depend upon it,” he insists, “there comes a time when for every addition of knowledge you forget something that you knew before.” Note here his topic is knowledge, not attention.

It is possible that a human mind could reach and exceed its storage capacity, but the way we usually avoid this eventuality is that memories that are seldom referenced are forgotten. Learning new facts may of course exhaust our resources of time and attention. But the usual effect of acquiring knowledge is quite the opposite of what Sherlock suggests. In the early 1990’s, a research team led by Patricia Alexander demonstrated that having background knowledge in a subject area actually increased participants’ interest in and recall for details in an unfamiliar text. One of the most widely known replications of this finding was a study showing that chess experts have much better recall for the positions of pieces on a board than novices. However, Sherlock was worried about information outside of his area of expertise. Might he have a point there?

The problem is that Sherlock’s vocation demands a great deal of creativity, and it’s never certain at the outset of a case what type of knowledge may be useful in solving it. In the story “The Lion’s Mane,” he relies on obscure information about a rare species of jellyfish to wrap up the mystery. Konnikova cites this as an example of “The Importance of Curiosity and Play.” She goes on to quote Sherlock’s endorsement for curiosity in The Valley of Fear: “Breadth of view, my dear Mr. Mac, is one of the essentials of our profession. The interplay of ideas and the oblique uses of knowledge are often of extraordinary interest” (151). How does she account for the discrepancy? Could Conan Doyle’s conception of the character have undergone some sort of evolution? Alas, Konnikova isn’t interested in questions like that. “As with most things,” she writes about the earlier reference to the attic theory, “it is safe to assume that Holmes was exaggerating for effect” (150). I’m not sure what other instances she may have in mind—it seems to me that the character seldom exaggerates for effect. In any case, he was certainly not exaggerating his ignorance of Copernican theory in the earlier story.

If Konnikova were simply privileging the science at the expense of the literature, the measure of Mastermind’s success would be in how clearly the psychological theories and findings are laid out. Unfortunately, her attempt to stitch science together with pronouncements from the great detective often leads to confusing tangles of ideas. Following her formula, she prefaces one of the few example exercises from cognitive research provided in the book with a quote from “The Crooked Man.” After outlining the main points of the case, she writes,

How to make sense of these multiple elements? “Having gathered these facts, Watson,” Holmes tells the doctor, “I smoked several pipes over them, trying to separate those which were crucial from others which were merely incidental.” And that, in one sentence, is the first step toward successful deduction: the separation of those factors that are crucial to your judgment from those that are just incidental, to make sure that only the truly central elements affect your decision. (169)

So far she hasn’t gone beyond the obvious. But she does go on to cite a truly remarkable finding that emerged from research by Amos Tversky and Daniel Kahneman in the early 1980’s. People who read a description of a man named Bill suggesting he lacks imagination tended to feel it was less likely that Bill was an accountant than that he was an accountant who plays jazz for a hobby—even though the two points of information in that second description make in inherently less likely than the one point of information in the first. The same result came when people were asked whether it was more likely that a woman named Linda was a bank teller or both a bank teller and an active feminist. People mistook the two-item choice as more likely. Now, is this experimental finding an example of how people fail to sift crucial from incidental facts?

The findings of this study are now used as evidence of a general cognitive tendency known as the conjunction fallacy. In his book Thinking, Fast and Slow, Kahneman explains how more detailed descriptions (referring to Tom instead of Bill) can seem more likely, despite the actual probabilities, than shorter ones. He writes,

The judgments of probability that our respondents offered, both in the Tom W and Linda problems, corresponded precisely to judgments of representativeness (similarity to stereotypes). Representativeness belongs to a cluster of closely related basic assessments that are likely to be generated together. The most representative outcomes combine with the personality description to produce the most coherent stories. The most coherent stories are not necessarily the most probable, but they are plausible, and the notions of coherence, plausibility, and probability are easily confused by the unwary. (159)

So people are confused because the less probable version is actually easier to imagine. But here’s how Konnikova tries to explain the point by weaving it together with Sherlock’s ideas:

Holmes puts it this way: “The difficulty is to detach the framework of fact—of absolute undeniable fact—from the embellishments of theorists and reporters. Then, having established ourselves upon this sound basis, it is our duty to see what inferences may be drawn and what are the special points upon which the whole mystery turns.” In other words, in sorting through the morass of Bill and Linda, we would have done well to set clearly in our minds what were the actual facts, and what were the embellishments or stories in our minds. (173)

But Sherlock is not referring to our minds’ tendency to mistake coherence for probability, the tendency that has us seeing more detailed and hence less probable stories as more likely. How could he have been? Instead, he’s talking about the importance of independently assessing the facts instead of passively accepting the assessments of others. Konnikova is fudging, and in doing so she’s shortchanging the story and obfuscating the science.

As the subtitle implies, though, Mastermind is about how to think; it is intended as a self-improvement guide. The book should therefore be judged based on the likelihood that readers will come away with a greater ability to recognize and avoid cognitive biases, as well as the ability to sustain the conviction to stay motivated and remain alert. Konnikova emphasizes throughout that becoming a better thinker is a matter of determinedly forming better habits of thought. And she helpfully provides countless illustrative examples from the Holmes canon, though some of these precepts and examples may not be as apt as she’d like. You must have clear goals, she stresses, to help you focus your attention. But the overall purpose of her book provides a great example of a vague and unrealistic end-point. Think better? In what domain? She covers examples from countless areas, from buying cars and phones, to sizing up strangers we meet at a party. Sherlock, of course, is a detective, so he focuses his attention of solving crimes. As Konnikova dutifully points out, in domains other than his specialty, he’s not such a mastermind.

What Mastermind works best as is a fun introduction to modern psychology. But it has several major shortcomings in that domain, and these same shortcomings diminish the likelihood that reading the book will lead to any lasting changes in thought habits. Concepts are covered too quickly, organized too haphazardly, and no conceptual scaffold is provided to help readers weigh or remember the principles in context. Konnikova’s strategy is to take a passage from Conan Doyle’s stories that seems to bear on noteworthy findings in modern research, discuss that research with sprinkled references back to the stories, and wrap up with a didactic and sententious paragraph or two. Usually, the discussion begins with one of Watson’s errors, moves on to research showing we all tend to make similar errors, and then ends admonishing us not to be like Watson. Following Kahneman’s division of cognition into two systems—one fast and intuitive, the other slower and demanding of effort—Konnikova urges us to get out of our “System Watson” and rely instead on our “System Holmes.” “But how do we do this in practice?” she asks near the end of the book,

How do we go beyond theoretically understanding this need for balance and open-mindedness and applying it practically, in the moment, in situations where we might not have as much time to contemplate our judgments as we do in the leisure of our reading?

The answer she provides: “It all goes back to the very beginning: the habitual mindset that we cultivate, the structure that we try to maintain for our brain attic no matter what” (240). Unfortunately, nowhere in her discussion of built-in biases and the correlates to creativity did she offer any step-by-step instruction on how to acquire new habits. Konnikova is running us around in circles to hide the fact that her book makes an empty promise.

Tellingly, Kahneman, whose work on biases Konnikova cites on several occasions, is much more pessimistic about our prospects for achieving Holmesian thought habits. In the introduction to Thinking, Fast and Slow, he says his goal is merely to provide terms and labels for the regular pitfalls of thinking to facilitate more precise gossiping. He writes,

Why be concerned with gossip? Because it is much easier, as well as far more enjoyable, to identify and label the mistakes of others than to recognize our own. Questioning what we believe and want is difficult at the best of times, and especially difficult when we most need to do it, but we can benefit from the informed opinions of others. Many of us spontaneously anticipate how friends and colleagues will evaluate our choices; the quality and content of these anticipated judgments therefore matters. The expectation of intelligent gossip is a powerful motive for serious self-criticism, more powerful than New Year resolutions to improve one’s decision making at work and home. (3)

The worshipful attitude toward Sherlock in Mastermind is designed to pander to our vanity, and so the suggestion that we need to rely on others to help us think is too mature to appear in its pages. The closest Konnikova comes to allowing for the importance of input and criticism from other people is when she suggests that Watson is an indispensable facilitator of Sherlock’s process because he “serves as a constant reminder of what errors are possible” (195), and because in walking him through his reasoning Sherlock is forced to be more mindful. “It may be that you are not yourself luminous,” Konnikova quotes from The Hound of the Baskervilles, “but you are a conductor of light. Some people without possessing genius have a remarkable power of stimulating it. I confess, my dear fellow, that I am very much in your debt” (196).

That quote shows one of the limits of Sherlock’s mindfulness that Konnikova never bothers to address. At times throughout Mastermind, it’s easy to forget that we probably wouldn’t want to live the way Sherlock is described as living. Want to be a great detective? Abandon your spouse and your kids, move into a cheap flat, work full-time reviewing case histories of past crimes, inject some cocaine, shoot holes in the wall of your flat where you’ve drawn a smiley face, smoke a pipe until the air is unbreathable, and treat everyone, including your best (only?) friend with casual contempt. Conan Doyle made sure his character casts a shadow. The ideal character Konnikova holds up, with all his determined mindfulness, often bears more resemblance to Kwai Chang Caine from Kung Fu. This isn’t to say that Sherlock isn’t morally complex—readers love him because he’s so clearly a good guy, as selfish and eccentric as he may be. Konnikova cites an instance in which he holds off on letting the police know who committed a crime. She quotes:

Once that warrant was made out, nothing on earth would save him. Once or twice in my career I feel that I have done more real harm by my discovery of the criminal than ever he had done by his crime. I have learned caution now, and I had rather play tricks with the law of England than with my own conscience. Let us know more before we act.

But Konnikova isn’t interested in morality, complex or otherwise, no matter how central moral intuitions are to our enjoyment of fiction. The lesson she draws from this passage shows her at her most sententious and platitudinous:

You don’t mindlessly follow the same preplanned set of actions that you had determined early on. Circumstances change, and with them so does the approach. You have to think before you leap to act, or judge someone, as the case may be. Everyone makes mistakes, but some may not be mistakes as such, when taken in the context of the time and the situation. (243)

Hard to disagree, isn’t it?

To be fair, Konnikova does mention some of Sherlock’s peccadilloes in passing. And she includes a penultimate chapter titled “We’re Only Human,” in which she tells the story of how Conan Doyle was duped by a couple of young girls into believing they had photographed some real fairies. She doesn’t, however, take the opportunity afforded by this episode in the author’s life to explore the relationship between the man and his creation. She effectively says he got tricked because he didn’t do what he knew how to do, it can happen to any of us, so be careful you don’t let it happen to you. Aren’t you glad that’s cleared up? She goes on to end the chapter with an incongruous lesson about how you should think like a hunter. Maybe we should, but how exactly, and when, and at what expense, we’re never told.

Konnikova clearly has a great deal of genuine enthusiasm for both literature and science, and despite my disappointment with her first book I plan to keep following her blog. I’m even looking forward to her next book—confident she’ll learn from the negative reviews she’s bound to get on this one. The tragic blunder she made in eschewing nuanced examinations of how stories work, how people relate to characters, or how authors create them for a shallow and one-dimensional attempt at suggesting a 100 year-old fictional character somehow divined groundbreaking research findings from the end of the Twentieth and beginning of the Twenty-First Centuries calls to mind an exchange you can watch on YouTube between Neil Degrasse Tyson and Richard Dawkins. Tyson, after hearing Dawkins speak in the way he’s known to, tries to explain why many scientists feel he’s not making the most of his opportunities to reach out to the public.

You’re professor of the public understanding of science, not the professor of delivering truth to the public. And these are two different exercises. One of them is putting the truth out there and they either buy your book or they don’t. That’s not being an educator; that’s just putting it out there. Being an educator is not only getting the truth right; there’s got to be an act of persuasion in there as well. Persuasion isn’t “Here’s the facts—you’re either an idiot or you’re not.” It’s “Here are the facts—and here is a sensitivity to your state of mind.” And it’s the facts and the sensitivity when convolved together that creates impact. And I worry that your methods, and how articulately barbed you can be, ends up being simply ineffective when you have much more power of influence than is currently reflected in your output.

Dawkins begins his response with an anecdote that shows that he’s not the worst offender when it comes to simple and direct presentations of the facts.

A former and highly successful editor of New Scientist Magazine, who actually built up New Scientist to great new heights, was asked “What is your philosophy at New Scientist?” And he said, “Our philosophy at New Scientist is this: science is interesting, and if you don’t agree you can fuck off.”

I know the issue is a complicated one, but I can’t help thinking Tyson-style persuasion too often has the opposite of its intended impact, conveying as it does the implicit message that science has to somehow be sold to the masses, that it isn’t intrinsically interesting. At any rate, I wish that Konnikova hadn’t dressed up her book with false promises and what she thought would be cool cross-references. Sherlock Holmes is interesting. Psychology is interesting. If you don’t agree, you can fuck off.

Also read

FROM DARWIN TO DR. SEUSS: DOUBLING DOWN ON THE DUMBEST APPROACH TO COMBATTING RACISM

And

THE STORYTELLING ANIMAL: A LIGHT READ WITH WEIGHTY IMPLICATIONS

And

LAB FLIES: JOSHUA GREENE’S MORAL TRIBES AND THE CONTAMINATION OF WALTER WHITE

Also a propos is

Sympathizing with Psychos: Why We Want to See Alex Escape His Fate as A Clockwork Orange

Especially in this age where everything, from novels to social media profiles, are scrutinized for political wrong think, it’s important to ask how so many people can enjoy stories with truly reprehensible protagonists. Anthony Burgess’s “A Clockwork Orange” provides a perfect test case. How can readers possible sympathize with Alex?

Phil Connors, the narcissistic weatherman played by Bill Murray in Groundhog Day, is, in the words of Larry, the cameraman played by Chris Elliott, a “prima donna,” at least at the beginning of the movie. He’s selfish, uncharitable, and condescending. As the plot progresses, however, Phil undergoes what is probably the most plausible transformation in all of cinema—having witnessed what he’s gone through over the course of the movie, we’re more than willing to grant the possibility that even the most narcissistic of people might be redeemed through such an ordeal. The odd thing, though, is that when you watch Groundhog Day you don’t exactly hate Phil at the beginning of the movie. Somehow, even as we take note of his most off-putting characteristics, we’re never completely put off. As horrible as he is, he’s not really even unpleasant. The pleasure of watching the movie must to some degree stem from our desire to see Phil redeemed. We want him to learn his lesson so we don’t have to condemn him or write him off.

In a recent article for the New Yorker, Jonathan Franzen explores what he calls “the problem of sympathy” by considering his own responses to the novels of Edith Wharton, who herself strikes him as difficult to sympathize with. Lily Bart, the protagonist of The House of Mirth, is similar to Wharton in many respects, the main difference being that Lily is beautiful (and of course Franzen was immediately accused of misogyny for pointing this out). Of Lily, Franzen writes,

She is, basically, the worst sort of party girl, and Wharton, in much the same way that she didn’t even try to be soft or charming in her personal life, eschews the standard novelistic tricks for warming or softening Lily’s image—the book is devoid of pet-the-dog moments. So why is it so hard to stop reading Lily’s story? (63)

Franzen weighs several hypotheses: her beauty, her freedom to act on impulses we would never act on, her financial woes, her aging. But ultimately he settles on the conclusion that all of these factors are incidental.

What determines whether we sympathize with a fictional character, according to Franzen, is the strength and immediacy of his or her desire. What propels us through the story then is our curiosity about whether or not the character will succeed in satisfying that desire. He explains,

One of the great perplexities of fiction—and the quality that makes the novel the quintessentially liberal art form—is that we experience sympathy so readily for characters we wouldn’t like in real life. Becky Sharp may be a soulless social climber, Tom Ripley may be a sociopath, the Jackal may want to assassinate the French President, Mickey Sabbath may be a disgustingly self-involved old goat, and Raskolnikov may want to get away with murder, but I find myself rooting for each of them. This is sometimes, no doubt, a function of the lure of the forbidden, the guilty pleasure of imagining what it would be like to be unburdened by scruples. In every case, though, the alchemical agent by which fiction transmutes my secret envy or my ordinary dislike of “bad” people into sympathy is desire. Apparently, all a novelist has to do is give a character a powerful desire (to rise socially, to get away with murder) and I, as a reader, become helpless not to make that desire my own. (63)

While I think Franzen here highlights a crucial point about the intersection between character and plot, namely that it is easier to assess how well characters fare at the story’s end if we know precisely what they want—and also what they dread—it’s clear nonetheless that he’s being flip in his dismissal of possible redeeming qualities. Emily Gould, writing for The Awl, expostulates in a parenthetical to her statement that her response to Lily was quite different from Franzen’s that “she was so trapped! There were no right choices! How could anyone find watching that ‘delicious!’ I cry every time!”

Focusing on any single character in a story the way Franzen does leaves out important contextual cues about personality. In a story peopled with horrible characters, protagonists need only send out the most modest of cues signaling their altruism or redeemability for readers to begin to want to see them prevail. For Milton’s Satan to be sympathetic, readers have to see God as significantly less so. In Groundhog Day, you have creepy and annoying characters like Larry and Ned Ryerson to make Phil look slightly better. And here is Franzen on the denouement of House of Mirth, describing his response to Lily reflecting on the timestamp placed on her youthful beauty:

But only at the book’s very end, when Lily finds herself holding another woman’s baby and experiencing a host of unfamiliar emotions, does a more powerful sort of urgency crash into view. The financial potential of her looks is revealed to have been an artificial value, in contrast to their authentic value in the natural scheme of human reproduction. What has been simply a series of private misfortunes for Lily suddenly becomes something larger: the tragedy of a New York City social world whose priorities are so divorced from nature that they kill the emblematically attractive female who ought, by natural right, to thrive. The reader is driven to search for an explanation of the tragedy in Lily’s appallingly deforming social upbringing—the kind of upbringing that Wharton herself felt deformed by—and to pity her for it, as, per Aristotle, a tragic protagonist must be pitied. (63)

As Gould points out, though, Franzen is really late in coming to an appreciation of the tragedy, even though his absorption with Lily’s predicament suggests he feels sympathy for her all along. Launching into a list of all the qualities that supposedly make the character unsympathetic, he writes, “The social height that she’s bent on securing is one that she herself acknowledges is dull and sterile” (62), a signal of ambivalence that readers like Gould take as a hopeful sign that she might eventually be redeemed. In any case, few of the other characters seem willing to acknowledge anything of the sort.

Perhaps the most extreme instance in which a bad character wins the sympathy of readers and viewers by being cast with a character or two who are even worse is that of Alex in Anthony Burgess’s novella A Clockwork Orange and the Stanley Kubrick film based on it. (Patricia Highsmith’s Mr. Ripley is another clear contender.) How could we possibly like Alex? He’s a true sadist who matter-of-factly describes the joyous thrill he gets from committing acts of “ultraviolence” against his victims, and he’s a definite candidate for a clinical diagnosis of antisocial personality disorder.

He’s also probably the best evidence for Franzen’s theory that sympathy is reducible to desire. It should be noted, however, that, in keeping with William Flesch’s theory of narrative interest, A Clockwork Orange is nothing if not a story of punishment. In his book Comeuppance, Flesch suggests that when we become emotionally enmeshed with stories we’re monitoring the characters for evidence of either altruism or selfishness and henceforth attending to the plot, anxious to see the altruists rewarded and the selfish get their comeuppance. Alex seems to strain the theory, though, because all he seems to want to do is hurt people, and yet audiences tend to be more disturbed than gratified by his drawn-out, torturous punishment. For many, there’s even some relief at the end of the movie and the original American version of the book when Alex makes it through all of his ordeals with his taste for ultraviolence restored.

Many obvious factors mitigate the case against Alex, perhaps foremost among them the whimsical tone of his narration, along with the fictional dialect which lends to the story a dream-like quality, which is also brilliantly conveyed in the film. There’s something cartoonish about all the characters who suffer at the hands of Alex and his droogs, and almost all of them return to the story later to exact their revenge. You might even say there’s a Groundhogesque element of repetition in the plot. The audience quickly learns too that all the characters who should be looking out for Alex—he’s only fifteen, we find out after almost eighty pages—are either feckless zombies like his parents, who have been sapped of all vitality by their clockwork occupations, or only see him as a means to furthering their own ambitions. “If you have no consideration for your own horrible self you at least might have some for me, who have sweated over you,” his Post-Corrective Advisor P.R. Deltoid says to him. “A big black mark, I tell you in confidence, for every one we don’t reclaim, a confession of failure for every one of you that ends up in the stripy hole” (42). Even the prison charlie (he’s a chaplain, get it?) who serves as a mouthpiece to deliver Burgess’s message treats him as a means to an end. Alex explains,

The idea was, I knew, that this charlie was after becoming a very great holy chelloveck in the world of Prison Religion, and he wanted a real horrorshow testimonial from the Governor, so he would go and govoreet quietly to the Governor now and then about what dark plots were brewing among the plennies, and he would get a lot of this cal from me. (91)

Alex ends up receiving his worst punishment at the hands of the man against whom he’s committed his worst crime. F. Alexander is the author of the metafictionally titled A Clockwork Orange, a treatise against the repressive government, and in the first part of the story Alex and his droogs, wearing masks, beat him mercilessly before forcing him to watch them gang rape his wife, who ends up dying from wounds she sustains in the attack. Later, when Alex gets beaten up himself and inadvertently stumbles back to the house that was the scene of the crime, F. Alexander recognizes him only as the guinea pig for a government experiment in brainwashing criminals he’s read about in newspapers. He takes Alex in and helps him, saying, “I think you can be used, poor boy. I think you can help dislodge this overbearing Government” (175). After he recognizes Alex from his nadsat dialect as the ringleader of the gang who killed his wife, he decides the boy will serve as a better propaganda tool if he commits suicide. Locking him in a room and blasting the Beethoven music he once loved but was conditioned in prison to find nauseating to the point of wishing for death, F. Alexander leaves Alex no escape but to jump out of a high window.

The desire for revenge is understandable, but before realizing who it is he’s dealing with F. Alexander reveals himself to be conniving and manipulative, like almost every other adult Alex knows. When he wakes up in the hospital after his suicide attempt, he discovers that the Minister of the Inferior, as he calls him, has had the conditioning procedure he originally ordered be carried out on Alex reversed and is now eager for Alex to tell everyone how F. Alexander and his fellow conspirators tried to kill him. Alex is nothing but a pawn to any of them. That’s why it’s possible to be relieved when his capacity for violent behavior has been restored.

Of course, the real villain of A Clockwork Orange is the Ludovico Technique, the treatment used to cure Alex of his violent impulses. Strapped into a chair with his head locked in place and his glazzies braced open, Alex is forced to watch recorded scenes of torture, murder, violence, and rape, the types of things he used to enjoy. Only now he’s been given a shot that makes him feel so horrible he wants to snuff it (kill himself), and over the course of the treatment sessions he becomes conditioned to associate his precious ultraviolence with this dreadful feeling. Next to the desecration of a man’s soul—the mechanistic control obviating his free will—the antisocial depredations of a young delinquent are somewhat less horrifying. As the charlie says to Alex, addressing him by his prison ID number,

It may not be nice to be good, little 6655321. It may be horrible to be good. And when I say that to you I realize how self-contradictory that sounds. I know I shall have many sleepless nights about this. What does God want? Does God want goodness or the choice of goodness? Is a man who chooses the bad perhaps in some way better than a man who has the good imposed upon him? Deep and hard questions, little 6655321. (107)

At the same time, though, one of the consequences of the treatment is that Alex becomes not just incapable of preying on others but also of defending himself. Immediately upon his release from prison, he finds himself at the mercy of everyone he’s wronged and everyone who feels justified in abusing or exploiting him owing to his past crimes. Before realizing who Alex is, F. Alexander says to him,

You’ve sinned, I suppose, but your punishment has been out of all proportion. They have turned you into something other than a human being. You have no power of choice any longer. You’re committed to socially acceptable acts, a little machine capable only of good. (175)

To tally the mitigating factors: Alex is young (though the actor in the movie was twenty-eight), he’s surrounded by other bizarre and unlikable characters, and he undergoes dehumanizing torture. But does this really make up for his participating in gang rape and murder? Personally, as strange and unsavory as F. Alexander seems, I have to say I can’t fault him in the least for taking revenge on Alex. As someone who believes all behaviors are ultimately determined by countless factors outside the individual’s control, from genes to education to social norms, I don’t have that much of a problem with the Ludovico Technique either. Psychopathy is a primarily genetic condition that makes people incapable of experiencing moral emotions such as would prevent them from harming others. If aversion therapy worked to endow psychopaths with negative emotions similar to those the rest of us feel in response to Alex’s brand of ultraviolence, then it doesn’t seem like any more of a desecration than, say, a brain operation to remove a tumor with deleterious effects on moral reasoning. True, the prospect of a corrupt government administering the treatment is unsettling, but this kid was going around beating, raping, and killing people.

And yet, I also have to admit (confess?), my own response to Alex, even at the height of his delinquency, before his capture and punishment, was to like him and root for him—this despite the fact that, contra Franzen, I couldn’t really point to any one thing he desires more than anything else.

For those of us who sympathize with Alex, every instance in which he does something unconscionable induces real discomfort, like when he takes two young ptitsas back to his room after revealing they “couldn’t have been more than ten” (47) (but he earlier says the girl Billyboy's gang is "getting ready to perform on" is "not more than ten" [18] - is he serious?). We don’t like him, in other words, because he does bad things but in spite of it. At some point near the beginning of the story, Alex must give some convincing indications that by the end he will have learned the error of his ways. He must provide readers with some evidence that he is at least capable of learning to empathize with other people’s suffering and willing to behave in such a way as to avoid it, so when we see him doing something horrible we view it as an anxiety-inducing setback rather than a deal-breaking harbinger of his true life trajectory. But what is it exactly that makes us believe this psychopath is redeemable?

Phil Connors in Groundhog Day has one obvious saving grace. When viewers are first introduced to him, he’s doing his weather report—and he has a uniquely funny way of doing it. “Uh-oh, look out. It’s one of these big blue things!” he jokes when the graphic of a storm front appears on the screen. “Out in California they're going to have some warm weather tomorrow, gang wars, and some very overpriced real estate,” he says drolly. You could argue he’s only being funny in an attempt to further his career, but he continues trying to make people laugh, usually at the expense of weird or annoying characters, even when the cameras are off (not those cameras). Successful humor requires some degree of social acuity, and the effort that goes into it suggests at least a modicum of generosity. You could say, in effect, Phil goes out of his way to give the other characters, and us, a few laughs. Alex, likewise, offers us a laugh before the end of the first page, as he describes how the Korova Milkbar, where he and his droogs hang out, doesn’t have a liquor license but can sell moloko with drugs added to it “which would give you a nice quiet horrorshow fifteen minutes admiring Bog And All His Holy Angels And Saints in your left shoe with lights bursting all over your mozg” (3-4). Even as he’s assaulting people, Alex keeps up his witty banter and dazzling repartee. He’s being cruel, but he’s having fun. Moreover, he seems to be inviting us to have fun with him.

Probably the single most important factor behind our desire (and I understand “our” here doesn’t include everyone in the audience) to see Alex redeemed is the fact that he’s being kind enough to tell us his story, to invite us into his life, as it were. This is the magic of first person narration. And like most magic it’s based on a psychological principle describing a mental process most of us go about our lives completely oblivious to. The Jewish psychologist Henri Tajfel was living in France at the beginning of World War II, and he was in a German prisoner-of-war camp for most of its duration. Afterward, he went to college in England, where in the 1960s and 70s he would conduct a series of experiments that are today considered classics in social psychology. Many other scientists at the time were trying to understand how an atrocity like the Holocaust could have happened. One theory was that the worst barbarism was committed by a certain type of individual who had what was called an authoritarian personality. Others, like Muzafer Sherif, pointed to a universal human tendency to form groups and discriminate on their behalf.

Tajfel knew about Sherif’s Robber’s Cave Experiment in which groups of young boys were made to compete with each other in sports and over territory. Under those conditions, the groups of boys quickly became antagonistic toward one another, so much so that the experiment had to be moved into its reconciliation phase earlier than planned to prevent violence. But Tajfel suspected that group rivalries could be sparked even without such an elaborate setup. To test his theory, he developed what is known as the minimal group paradigm, in which test subjects engage in some task or test of their preferences and are subsequently arranged into groups based on the outcome. In the original experiments, none of the participants knew anything about their groupmates aside from the fact that they’d been assigned to the same group. And yet, even when the group assignments were based on nothing but a coin toss, subjects asked how much money other people in the experiment deserved as a reward for their participation suggested much lower dollar amounts for people in rival groups. “Apparently,” Tajfel writes in a 1970 Scientific American article about his experiments, “the mere fact of division into groups is enough to trigger discriminatory behavior” (96).

Once divisions into us and them have been established, considerations of fairness are reserved for members of the ingroup. While the subjects in Tajfel’s tests aren’t displaying fully developed tribal animosity, they do demonstrate that the seeds of tribalism are disturbingly easily to sow. As he explains,

Unfortunately it is only too easy to think of examples in real life where fairness would go out the window, since groupness is often based on criteria much more weighty than either preferring a painter one has never heard of before or resembling someone else in one's way of counting dots. (102)

I’m unaware of any studies on the effects of various styles of narration on perceptions of group membership, but I hypothesize that we can extrapolate the minimal group paradigm into the realm of first-person narrative accounts of violence. The reason some of us like Alex despite his horrendous behavior is that he somehow manages to make us think of him as a member of our tribe—or rather as ourselves as a member of his—while everyone he wrongs belongs to a rival group. Even as he’s telling us about all the horrible things he’s done to other people, he takes time out to to introduce us to his friends, describe places like the Korova Milkbar and the Duke of York, even the flat at Municipal Flatblock 18A where he and his parents live. He tells us jokes. He shares with us his enthusiasm for classical music. Oh yeah, he also addresses us, “Oh my brothers,” beginning seven lines down on the first page and again at intervals throughout the book, making us what anthropologists call his fictive kin.

There’s something altruistic, or at least generous, about telling jokes or stories. Alex really is our humble narrator, as he frequently refers to himself. Beyond that, though, most stories turn on some moral point, so when we encounter a narrator who immediately begins recounting his crimes we can’t help but anticipate the juncture in the story at which he experiences some moral enlightenment. In the twenty-first and last chapter of A Clockwork Orange, Alex does indeed undergo just this sort of transformation. But American publishers, along with Stanley Kubrick, cut this part of the book because it struck them as a somewhat cowardly turning away from the reality of human evil. Burgess defends the original version in an introduction to the 1986 edition,

The twenty-first chapter gives the novel the quality of genuine fiction, an art founded on the principle that human beings change. There is, in fact, not much point in writing a novel unless you can show the possibility of moral transformation, or an increase in wisdom, operating in your chief character or characters. Even trashy bestsellers show people changing. When a fictional work fails to show change, when it merely indicates that human character is set, stony, unregenerable, then you are out of the field of the novel and into that of the fable or the allegory. (xii)

Indeed, it’s probably this sense of the story being somehow unfinished or cut off in the middle that makes the film so disturbing and so nightmarishly memorable. With regard to the novel, readers could be forgiven for wondering what the hell Alex’s motivation was in telling his story in the first place if there was no lesson or no intuitive understanding he thought he could convey with it.

But is the twenty-first chapter believable? Would it have been possible for Alex to transform into a good man? The Nobel Prize-winning psychologist Daniel Kahneman, in his book Thinking, Fast and Slow, shares with his own readers an important lesson from his student days that bears on Alex’s case:

As a graduate student I attended some courses on the art and science of psychotherapy. During one of these lectures our teacher imparted a morsel of clinical wisdom. This is what he told us: “You will from time to time meet a patient who shares a disturbing tale of multiple mistakes in his previous treatment. He has been seen by several clinicians, and all failed him. The patient can lucidly describe how his therapists misunderstood him, but he has quickly perceived that you are different. You share the same feeling, are convinced that you understand him, and will be able to help.” At this point my teacher raised his voice as he said, “Do not even think of taking on this patient! Throw him out of the office! He is most likely a psychopath and you will not be able to help him.” (27-28)

Also read

SABBATH SAYS: PHILIP ROTH AND THE DILEMMAS OF IDEOLOGICAL CASTRATION

And

LET'S PLAY KILL YOUR BROTHER: FICTION AS A MORAL DILEMMA GAME

Stories, Social Proof, & Our Two Selves

Robert Cialdini describes the phenomenon of social proof, whereby we look to how others are responding to something before we form an opinion ourselves. What are the implications of social proof for our assessments of literature? Daniel Kahneman describes two competing “selves,” the experiencing self and the remembering self. Which one should we trust to let us know how we truly feel about a story?

You’ll quickly come up with a justification for denying it, but your response to a story is influenced far more by other people’s responses to it than by your moment-to-moment experience of reading or watching it. The impression that we either enjoy an experience or we don’t, that our enjoyment or disappointment emerges directly from the scenes, sensations, and emotions of the production itself, results from our cognitive blindness to several simultaneously active processes that go into our final verdict. We’re only ever aware of the output of the various algorithms, never the individual functions.

None of us, for instance, directly experiences the operation of what psychologist and marketing expert Robert Cialdini calls social proof, but its effects on us are embarrassingly easy to measure. Even the way we experience pain depends largely on how we perceive others to be experiencing it. Subjects receiving mild shocks not only report them to be more painful when they witness others responding to them more dramatically, but they also show physiological signs of being in greater distress.

Cialdini opens the chapter on social proof in his classic book Influence: Science and Practice by pointing to the bizarre practice of setting television comedies to laugh tracks. Most people you talk to will say canned laughter is annoying—and they’ll emphatically deny the mechanically fake chuckles and guffaws have any impact on how funny the jokes seem to them. The writers behind those jokes, for their part, probably aren’t happy about the implicit suggestion that their audiences need to be prompted to laugh at the proper times. So why do laugh tracks accompany so many shows? “What can it be about canned laughter that is so attractive to television executives?” Cialdini asks.

Why are these shrewd and tested people championing a practice that their potential watchers find disagreeable and their most creative talents find personally insulting? The answer is both simple and intriguing: They know what the research says. (98)

As with all the other “weapons of influence” Cialdini writes about in the book, social proof seems as obvious to people as it is dismissible. “I understand how it’s supposed to work,” we all proclaim, “but you’d have to be pretty stupid to fall for it.” And yet it still works—and it works on pretty much every last one of us. Cialdini goes on to discuss the finding that even suicide rates increase after a highly publicized story of someone killing themselves. The simple, inescapable reality is that when we see someone else doing something, we become much more likely to do it ourselves, whether it be writhing in genuine pain, laughing in genuine hilarity, or finding life genuinely intolerable.

Another factor that complicates our responses to stories is that, unlike momentary shocks or the telling of jokes, they usually last long enough to place substantial demands on working memory. Movies last a couple hours. Novels can take weeks. What this means is that when we try to relate to someone else what we thought of a movie or a book we’re relying on a remembered abstraction as opposed to a real-time recording of how much we enjoyed the experience. In his book Thinking, Fast and Slow, Daniel Kahneman suggests that our memories of experiences can diverge so much from our feelings at any given instant while actually having those experiences that we effectively have two selves: the experiencing self and the remembering self. To illustrate, he offers the example of a man who complains that a scratch at the end of a disc of his favorite symphony ruined the listening experience for him.“But the experience was not actually ruined, only the memory of it,” Kahneman points out. “The experiencing self had had an experience that was almost entirely good, and the bad end could not undo it, because it had already happened” (381). But the distinction usually only becomes apparent when the two selves disagree—and such disagreements usually require some type of objective recording to discover. Kahneman explains,

Confusing experience with the memory of it is a compelling cognitive illusion—and it is the substitution that makes us believe a past experience can be ruined. The experiencing self does not have a voice. The remembering self is sometimes wrong, but it is the one that keeps score and governs what we learn from living, and it is the one that makes decisions. What we learn from the past is to maximize the qualities of our future memories, not necessarily of our future experiences. This is the tyranny of the remembering self. (381)

Kahneman suggests the priority we can’t help but give to the remembering self explains why tourists spend so much time taking pictures. The real objective of a vacation is not to have a pleasurable or fun experience; it’s to return home with good vacation stories.

Kahneman reports the results of a landmark study he designed with Don Redelmeier that compared moment-to-moment pain recordings of men undergoing colonoscopies to global pain assessments given by the patients after the procedure. The outcome demonstrated that the remembering self was remarkably unaffected by the duration of the procedure or the total sum of pain experienced, as gauged by adding up the scores given moment-to-moment during the procedure. Men who actually experienced more pain nevertheless rated the procedure as less painful when the discomfort tapered off gradually as opposed to dropping off precipitously after reaching a peak. The remembering self is reliably guilty of what Kahneman calls “duration neglect,” and it assesses experiences based on a “peak-end rule,” whereby the “global retrospective rating” will be “well predicted by the average level of pain reported at the worst moment of the experience and at its end” (380). Duration neglect and the peak-end rule probably account for the greater risk of addiction for users of certain drugs like heroine or crystal meth, which result in rapid, intense highs and precipitous drop-offs, as opposed to drugs like marijuana whose effects are longer-lasting but less intense.

We’ve already seen that pain in real time can be influenced by how other people are responding to it, and we can probably extrapolate and assume that the principle applies to pleasurable experiences as well. How does the divergence between experience and memory factor into our response to stories as expressed by our decisions about further reading or viewing, or in things like reviews or personal recommendations? For one thing, we can see that most good stories are structured in a way that serves not so much as a Jamesian “direct impression of life,”i.e. as reports from the experiencing self, but much more like the tamed abstractions Stevenson described in his “Humble Remonstrance” to James. As Kahneman explains,

A story is about significant events and memorable moments, not about time passing. Duration neglect is normal in a story, and the ending often defines its character. The same core features appear in the rules of narratives and in the memories of colonoscopies, vacations, and films. This is how the remembering self works: it composes stories and keeps them for future reference. (387)

Now imagine that you’re watching a movie in a crowded theater. Are you influenced by the responses of your fellow audience members? Are you more likely to laugh if everyone else is laughing, wince if everyone else is wincing, cheer if everyone else is cheering? These are the effects on your experiencing self. What happens, though, in the hours and days and weeks after the movie is over—or after you’re done reading the book? Does your response to the story start to become intertwined with and indistinguishable from the cognitive schema you had in place before ever watching or reading it? Are your impressions influenced by the opinions of critics or friends whose opinions you respect? Do you give a celebrated classic the benefit of the doubt, assuming it has some merit even if you enjoyed it much less than some less celebrated work? Do you read into it virtues whose real source may be external to the story itself? Do you miss virtues that actually are present in less celebrated stories?

Taken to its extreme, this focus on social proof leads to what’s known as social constructivism. In the realm of stories, this would be the idea that there are no objective criteria at all with which to assess merit; it’s all based on popular opinion or the dictates of authorities. Much of the dissatisfaction with the so-called canon is based on this type of thinking. If we collectively decide some work of literature is really good and worth celebrating, the reasoning goes, then it magically becomes really good and worth celebrating. There’s an undeniable kernel of truth to this—and there’s really no reason to object to the idea that one of the things that makes a work of art attention-worthy is that a lot of people are attending to it. Art serves a social function after all; part of the fun comes from sharing the experience and having conversations about it. But I personally can’t credit the absolutist version of social constructivism. I don’t think you’re anything but a literary tourist until you can make a convincing case for why a few classics don’t deserve the distinction—even though I acknowledge that any such case will probably be based largely on the ideas of other people.

The research on the experiencing versus the remembering self also suggests a couple criteria we can apply to our assessments of stories so that they’re more meaningful to people who haven’t been initiated into the society and culture of highbrow literature. Too often, the classics are dismissed as works only English majors can appreciate. And too often, they're written in a way that justifies that dismissal. One criterion should be based on how well the book satisfies the experiencing self: I propose that a story should be considered good insofar as it induces a state of absorption. You forget yourself and become completely immersed in the plot. Mihaly Csikszentmihalyi calls this state flow, and has found that the more time people spend in it the happier and more fulfilled they tend to be. But the total time a reader or viewer spends in a state of flow will likely be neglected if the plot never reaches a peak of intensity, or if it ends on note of tedium. So the second criterion should be how memorable the story is. Assessments based on either of these criteria are of course inevitably vulnerable to social proof and idiosyncratic factors of the individual audience member (whether I find Swann’s Way tedious or absorbing depends on how much sleep and caffeine I’ve had). And yet knowing what the effects are that make for a good aesthetic experience, in real time and in our memories, can help us avoid the trap of merely academic considerations. And knowing that our opinions will always be somewhat contaminated by outside influences shouldn’t keep us from trying to be objective any more than knowing that surgical theaters can never be perfectly sanitized should keep doctors from insisting they be as well scrubbed and scoured as possible.

Also read:

LET'S PLAY KILL YOUR BROTHER: FICTION AS A MORAL DILEMMA GAME

And:

And:

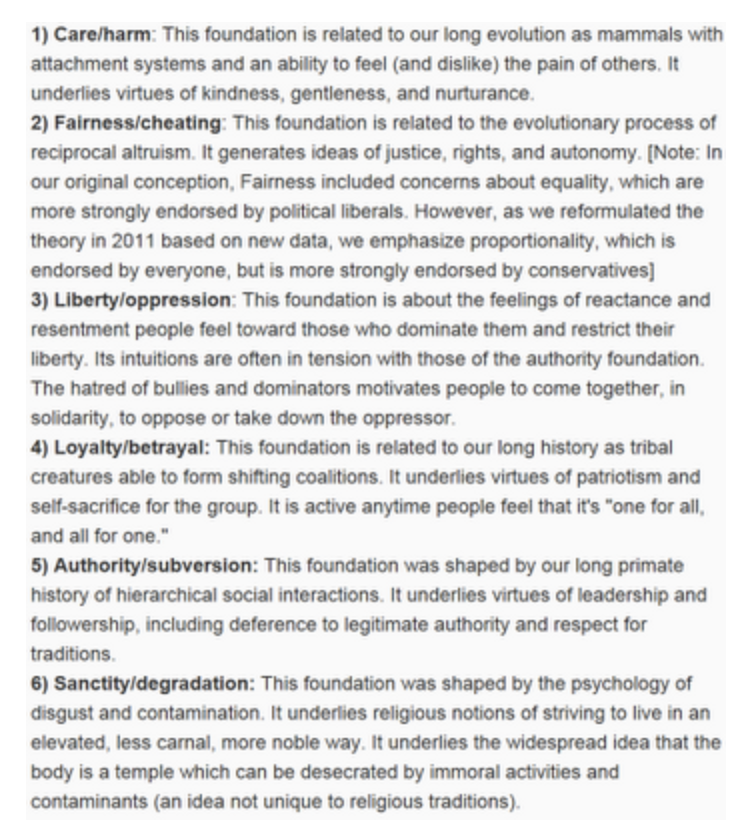

The Enlightened Hypocrisy of Jonathan Haidt's Righteous Mind

Jonathan Haidt extends an olive branch to conservatives by acknowledging their morality has more dimensions than the morality of liberals. But is he mistaking what’s intuitive for what’s right? A critical, yet admiring review of The Righteous Mind.

A Review of Jonathan Haidt's new book,

The Righteous Mind: Why Good People are Divided by Politics and Religion

Back in the early 1950s, Muzafer Sherif and his colleagues conducted a now-infamous experiment that validated the central premise of Lord of the Flies. Two groups of 12-year-old boys were brought to a camp called Robber’s Cave in southern Oklahoma where they were observed by researchers as the members got to know each other. Each group, unaware at first of the other’s presence at the camp, spontaneously formed a hierarchy, and they each came up with a name for themselves, the Eagles and the Rattlers. That was the first stage of the study. In the second stage, the two groups were gradually made aware of each other’s presence, and then they were pitted against each other in several games like baseball and tug-o-war. The goal was to find out if animosity would emerge between the groups. This phase of the study had to be brought to an end after the groups began staging armed raids on each other’s territory, wielding socks they’d filled with rocks. Prepubescent boys, this and several other studies confirm, tend to be highly tribal.

So do conservatives.

This is what University of Virginia psychologist Jonathan Haidt heroically avoids saying explicitly for the entirety of his new 318-page, heavily endnoted The Righteous Mind: Why Good People Are Divided by Politics and Religion. In the first of three parts, he takes on ethicists like John Stuart Mill and Immanuel Kant, along with the so-called New Atheists like Sam Harris and Richard Dawkins, because, as he says in a characteristically self-undermining pronouncement, “Anyone who values truth should stop worshipping reason” (89). Intuition, Haidt insists, is more worthy of focus. In part two, he lays out evidence from his own research showing that all over the world judgments about behaviors rely on a total of six intuitive dimensions, all of which served some ancestral, adaptive function. Conservatives live in “moral matrices” that incorporate all six, while liberal morality rests disproportionally on just three. At times, Haidt intimates that more dimensions is better, but then he explicitly disavows that position. He is, after all, a liberal himself. In part three, he covers some of the most fascinating research to emerge from the field of human evolutionary anthropology over the past decade and a half, concluding that tribalism emerged from group selection and that without it humans never would have become, well, human. Again, the point is that tribal morality—i.e. conservatism—cannot be all bad.

One of Haidt’s goals in writing The Righteous Mind, though, was to improve understanding on each side of the central political divide by exploring, and even encouraging an appreciation for, the moral psychology of those on the rival side. Tribalism can’t be all bad—and yet we need much less of it in the form of partisanship. “My hope,” Haidt writes in the introduction, “is that this book will make conversations about morality, politics, and religion more common, more civil, and more fun, even in mixed company” (xii). Later he identifies the crux of his challenge, “Empathy is an antidote to righteousness, although it’s very difficult to empathize across a moral divide” (49). There are plenty of books by conservative authors which gleefully point out the contradictions and errors in the thinking of naïve liberals, and there are plenty by liberals returning the favor. What Haidt attempts is a willful disregard of his own politics for the sake of transcending the entrenched divisions, even as he’s covering some key evidence that forms the basis of his beliefs. Not surprisingly, he gives the impression at several points throughout the book that he’s either withholding the conclusions he really draws from the research or exercising great discipline in directing his conclusions along paths amenable to his agenda of bringing about greater civility.

Haidt’s focus is on intuition, so he faces the same challenge Daniel Kahneman did in writing Thinking, Fast and Slow: how to convey all these different theories and findings in a book people will enjoy reading from first page to last? Kahneman’s attempt was unsuccessful, but his encyclopedic book is still readable because its topic is so compelling. Haidt’s approach is to discuss the science in the context of his own story of intellectual development. The product reads like a postmodern hero’s journey in which the unreliable narrator returns right back to where he started, but with a heightened awareness of how small his neighborhood really is. It’s a riveting trip down the rabbit hole of self-reflection where the distinction between is and ought gets blurred and erased and reinstated, as do the distinctions between intuition and reason, and even self and other. Since, as Haidt reports, liberals tend to score higher on the personality trait called openness to new ideas and experiences, he seems to have decided on a strategy of uncritically adopting several points of conservative rhetoric—like suggesting liberals are out-of-touch with most normal people—in order to subtly encourage less open members of his audience to read all the way through. Who, after all, wants to read a book by a liberal scientist pointing out all the ways conservatives go wrong in their thinking?

The Elephant in the Room

Haidt’s first move is to challenge the primacy of thinking over intuiting. If you’ve ever debated someone into a corner, you know simply demolishing the reasons behind a position will pretty much never be enough to change anyone’s mind. Citing psychologist Tom Gilovich, Haidt explains that when we want to believe something, we ask ourselves, “Can I believe it?” We begin a search, “and if we find even a single piece of pseudo-evidence, we can stop thinking. We now have permission to believe. We have justification, in case anyone asks.” But if we don’t like the implications of, say, global warming, or the beneficial outcomes associated with free markets, we ask a different question: when we don’t want to believe something, we ask ourselves, “Must I believe it?” Then we search for contrary evidence, and if we find a single reason to doubt the claim, we can dismiss it. You only need one key to unlock the handcuffs of must. Psychologists now have file cabinets full of findings on “motivated reasoning,” showing the many tricks people use to reach the conclusions they want to reach. (84)

Haidt’s early research was designed to force people into making weak moral arguments so that he could explore the intuitive foundations of judgments of right and wrong. When presented with stories involving incest, or eating the family dog, which in every case were carefully worded to make it clear no harm would result to anyone—the incest couldn’t result in pregnancy; the dog was already dead—“subjects tried to invent victims” (24). It was clear that they wanted there to be a logical case based on somebody getting hurt so they could justify their intuitive answer that a wrong had been done.

They said things like ‘I know it’s wrong, but I just can’t think of a reason why.’ They seemed morally dumbfounded—rendered speechless by their inability to explain verbally what they knew intuitively. These subjects were reasoning. They were working quite hard reasoning. But it was not reasoning in search of truth; it was reasoning in support of their emotional reactions. (25)

Reading this section, you get the sense that people come to their beliefs about the world and how to behave in it by asking the same three questions they’d ask before deciding on a t-shirt: how does it feel, how much does it cost, and how does it make me look? Quoting political scientist Don Kinder, Haidt writes, “Political opinions function as ‘badges of social membership.’ They’re like the array of bumper stickers people put on their cars showing the political causes, universities, and sports teams they support” (86)—or like the skinny jeans showing everybody how hip you are.

Kahneman uses the metaphor of two systems to explain the workings of the mind. System 1, intuition, does most of the work most of the time. System 2 takes a lot more effort to engage and can never manage to operate independently of intuition. Kahneman therefore proposes educating your friends about the common intuitive mistakes—because you’ll never recognize them yourself. Haidt uses the metaphor of an intuitive elephant and a cerebrating rider. He first used this image for an earlier book on happiness, so the use of the GOP mascot was accidental. But because of the more intuitive nature of conservative beliefs it’s appropriate. Far from saying that republicans need to think more, though, Haidt emphasizes the point that rational thought is never really rational and never anything but self-interested. He argues,

the rider acts as the spokesman for the elephant, even though it doesn’t necessarily know what the elephant is really thinking. The rider is skilled at fabricating post hoc explanations for whatever the elephant has just done, and it is good at finding reasons to justify whatever the elephant wants to do next. Once human beings developed language and began to use it to gossip about each other, it became extremely valuable for elephants to carry around on their backs a full-time public relations firm. (46)

The futility of trying to avoid motivated reasoning provides Haidt some justification of his own to engage in what can only be called pandering. He cites cultural psychologists Joe Henrich, Steve Heine, and Ara Noenzayan, who argued in their 2010 paper “The Weirdest People in the World?”that researchers need to do more studies with culturally diverse subjects. Haidt commandeers the acronym WEIRD—western, educated, industrial, rich, and democratic—and applies it somewhat derisively for most of his book, even though it applies both to him and to his scientific endeavors. Of course, he can’t argue that what’s popular is necessarily better. But he manages to convey that attitude implicitly, even though he can’t really share the attitude himself.

Haidt is at his best when he’s synthesizing research findings into a holistic vision of human moral nature; he’s at his worst, his cringe-inducing worst, when he tries to be polemical. He succumbs to his most embarrassingly hypocritical impulses in what are transparently intended to be concessions to the religious and the conservative. WEIRD people are more apt to deny their intuitive, judgmental impulses—except where harm or oppression are involved—and insist on the fair application of governing principles derived from reasoned analysis. But apparently there’s something wrong with this approach:

Western philosophy has been worshipping reason and distrusting the passions for thousands of years. There’s a direct line running from Plato through Immanuel Kant to Lawrence Kohlberg. I’ll refer to this worshipful attitude throughout this book as the rationalist delusion. I call it a delusion because when a group of people make something sacred, the members of the cult lose the ability to think clearly about it. (28)

This is disingenuous. For one thing, he doesn’t refer to the rationalist delusion throughout the book; it only shows up one other time. Both instances implicate the New Atheists. Haidt coins the term rationalist delusion in response to Dawkins’s The God Delusion. An atheist himself, Haidt is throwing believers a bone. To make this concession, though, he’s forced to seriously muddle his argument. “I’m not saying,” he insists,

we should all stop reasoning and go with our gut feelings. Gut feelings are sometimes better guides than reasoning for making consumer choices and interpersonal judgments, but they are often disastrous as a basis for public policy, science, and law. Rather, what I’m saying is that we must be wary of any individual’s ability to reason. We should see each individual as being limited, like a neuron. (90)

As far as I know, neither Harris nor Dawkins has ever declared himself dictator of reason—nor, for that matter, did Mill or Rawls (Hitchens might have). Haidt, in his concessions, is guilty of making points against arguments that were never made. He goes on to make a point similar to Kahneman’s.

We should not expect individuals to produce good, open-minded, truth-seeking reasoning, particularly when self-interest or reputational concerns are in play. But if you put individuals together in the right way, such that some individuals can use their reasoning powers to disconfirm the claims of others, and all individuals feel some common bond or shared fate that allows them to interact civilly, you can create a group that ends up producing good reasoning as an emergent property of the social system. (90)

What Haidt probably realizes but isn’t saying is that the environment he’s describing is a lot like scientific institutions in academia. In other words, if you hang out in them, you’ll be WEIRD.

A Taste for Self-Righteousness

The divide over morality can largely be reduced to the differences between the urban educated and the poor not-so-educated. As Haidt says of his research in South America, “I had flown five thousand miles south to search for moral variation when in fact there was more to be found a few blocks west of campus, in the poor neighborhood surrounding my university” (22). One of the major differences he and his research assistants serendipitously discovered was that educated people think it’s normal to discuss the underlying reasons for moral judgments while everyone else in the world—who isn’t WEIRD—thinks it’s odd:

But what I didn’t expect was that these working-class subjects would sometimes find my request for justifications so perplexing. Each time someone said that the people in a story had done something wrong, I asked, “Can you tell me why that was wrong?” When I had interviewed college students on the Penn campus a month earlier, this question brought forth their moral justifications quite smoothly. But a few blocks west, this same question often led to long pauses and disbelieving stares. Those pauses and stares seemed to say,

You mean you don’t know why it’s wrong to do that to a chicken? I have to explain it to you? What planet are you from? (95)

The Penn students “were unique in their unwavering devotion to the ‘harm principle,’” Mill’s dictum that laws are only justified when they prevent harm to citizens. Haidt quotes one of the students as saying, “It’s his chicken, he’s eating it, nobody is getting hurt” (96). (You don’t want to know what he did before cooking it.)