READING SUBTLY

This

was the domain of my Blogger site from 2009 to 2018, when I moved to this domain and started

The Storytelling Ape

. The search option should help you find any of the old posts you're looking for.

Lab Flies: Joshua Greene’s Moral Tribes and the Contamination of Walter White

Joshua Greene’s book “Moral Tribes” posits a dual-system theory of morality, where a quick, intuitive system 1 makes judgments based on deontological considerations—”it’s just wrong—whereas the slower, more deliberative system 2 takes time to calculate the consequences of any given choice. Audiences can see these two systems on display in the series “Breaking Bad,” as well as in critics’ and audiences’ responses.

Walter White’s Moral Math

In an episode near the end of Breaking Bad’s fourth season, the drug kingpin Gus Fring gives his meth cook Walter White an ultimatum. Walt’s brother-in-law Hank is a DEA agent who has been getting close to discovering the high-tech lab Gus has created for Walt and his partner Jesse, and Walt, despite his best efforts, hasn’t managed to put him off the trail. Gus decides that Walt himself has likewise become too big a liability, and he has found that Jesse can cook almost as well as his mentor. The only problem for Gus is that Jesse, even though he too is fed up with Walt, will refuse to cook if anything happens to his old partner. So Gus has Walt taken at gunpoint to the desert where he tells him to stay away from both the lab and Jesse. Walt, infuriated, goads Gus with the fact that he’s failed to turn Jesse against him completely, to which Gus responds, “For now,” before going on to say,

In the meantime, there’s the matter of your brother-in-law. He is a problem you promised to resolve. You have failed. Now it’s left to me to deal with him. If you try to interfere, this becomes a much simpler matter. I will kill your wife. I will kill your son. I will kill your infant daughter.

In other words, Gus tells Walt to stand by and let Hank be killed or else he will kill his wife and kids. Once he’s released, Walt immediately has his lawyer Saul Goodman place an anonymous call to the DEA to warn them that Hank is in danger. Afterward, Walt plans to pay a man to help his family escape to a new location with new, untraceable identities—but he soon discovers the money he was going to use to pay the man has already been spent (by his wife Skyler). Now it seems all five of them are doomed. This is when things get really interesting.

Walt devises an elaborate plan to convince Jesse to help him kill Gus. Jesse knows that Gus would prefer for Walt to be dead, and both Walt and Gus know that Jesse would go berserk if anyone ever tried to hurt his girlfriend’s nine-year-old son Brock. Walt’s plan is to make it look like Gus is trying to frame him for poisoning Brock with risin. The idea is that Jesse would suspect Walt of trying to kill Brock as punishment for Jesse betraying him and going to work with Gus. But Walt will convince Jesse that this is really just Gus’s ploy to trick Jesse into doing what he has forbidden Gus to do up till now—and kill Walt himself. Once Jesse concludes that it was Gus who poisoned Brock, he will understand that his new boss has to go, and he will accept Walt’s offer to help him perform the deed. Walt will then be able to get Jesse to give him the crucial information he needs about Gus to figure out a way to kill him.

It’s a brilliant plan. The one problem is that it involves poisoning a nine-year-old child. Walt comes up with an ingenious trick which allows him to use a less deadly poison while still making it look like Brock has ingested the ricin, but for the plan to work the boy has to be made deathly ill. So Walt is faced with a dilemma: if he goes through with his plan, he can save Hank, his wife, and his two kids, but to do so he has to deceive his old partner Jesse in just about the most underhanded way imaginable—and he has to make a young boy very sick by poisoning him, with the attendant risk that something will go wrong and the boy, or someone else, or everyone else, will die anyway. The math seems easy: either four people die, or one person gets sick. The option recommended by the math is greatly complicated, however, by the fact that it involves an act of violence against an innocent child.

In the end, Walt chooses to go through with his plan, and it works perfectly. In another ingenious move, though, this time on the part of the show’s writers, Walt’s deception isn’t revealed until after his plan has been successfully implemented, which makes for an unforgettable shock at the end of the season. Unfortunately, this revelation after the fact, at a time when Walt and his family are finally safe, makes it all too easy to forget what made the dilemma so difficult in the first place—and thus makes it all too easy to condemn Walt for resorting to such monstrous means to see his way through.

Fans of Breaking Bad who read about the famous thought-experiment called the footbridge dilemma in Harvard psychologist Joshua Greene’s multidisciplinary and momentously important book Moral Tribes: Emotion, Reason, and the Gap between Us and Them will immediately recognize the conflicting feelings underlying our responses to questions about serving some greater good by committing an act of violence. Here is how Greene describes the dilemma:

A runaway trolley is headed for five railway workmen who will be killed if it proceeds on its present course. You are standing on a footbridge spanning the tracks, in between the oncoming trolley and the five people. Next to you is a railway workman wearing a large backpack. The only way to save the five people is to push this man off the footbridge and onto the tracks below. The man will die as a result, but his body and backpack will stop the trolley from reaching the others. (You can’t jump yourself because you, without a backpack, are not big enough to stop the trolley, and there’s no time to put one on.) Is it morally acceptable to save the five people by pushing this stranger to his death? (113-4)

As was the case for Walter White when he faced his child-poisoning dilemma, the math is easy: you can save five people—strangers in this case—through a single act of violence. One of the fascinating things about common responses to the footbridge dilemma, though, is that the math is all but irrelevant to most of us; no matter how many people we might save, it’s hard for us to see past the murderous deed of pushing the man off the bridge. The answer for a large majority of people faced with this dilemma, even in the case of variations which put the number of people who would be saved much higher than five, is no, pushing the stranger to his death is not morally acceptable.

Another fascinating aspect of our responses is that they change drastically with the modification of a single detail in the hypothetical scenario. In the switch dilemma, a trolley is heading for five people again, but this time you can hit a switch to shift it onto another track where there happens to be a single person who would be killed. Though the math and the underlying logic are the same—you save five people by killing one—something about pushing a person off a bridge strikes us as far worse than pulling a switch. A large majority of people say killing the one person in the switch dilemma is acceptable. To figure out which specific factors account for the different responses, Greene and his colleagues tweak various minor details of the trolley scenario before posing the dilemma to test participants. By now, so many experiments have relied on these scenarios that Greene calls trolley dilemmas the fruit flies of the emerging field known as moral psychology.

The Automatic and Manual Modes of Moral Thinking

One hypothesis for why the footbridge case strikes us as unacceptable is that it involves using a human being as an instrument, a means to an end. So Greene and his fellow trolleyologists devised a variation called the loop dilemma, which still has participants pulling a hypothetical switch, but this time the lone victim on the alternate track must stop the trolley from looping back around onto the original track. In other words, you’re still hitting the switch to save the five people, but you’re also using a human being as a trolley stop. People nonetheless tend to respond to the loop dilemma in much the same way they do the switch dilemma. So there must be some factor other than the prospect of using a person as an instrument that makes the footbridge version so objectionable to us.

Greene’s own theory for why our intuitive responses to these dilemmas are so different begins with what Daniel Kahneman, one of the founders of behavioral economics, labeled the two-system model of the mind. The first system, a sort of autopilot, is the one we operate in most of the time. We only use the second system when doing things that require conscious effort, like multiplying 26 by 47. While system one is quick and intuitive, system two is slow and demanding. Greene proposes as an analogy the automatic and manual settings on a camera. System one is point-and-click; system two, though more flexible, requires several calibrations and adjustments. We usually only engage our manual systems when faced with circumstances that are either completely new or particularly challenging.

According to Greene’s model, our automatic settings have functions that go beyond the rapid processing of information to encompass our basic set of moral emotions, from indignation to gratitude, from guilt to outrage, which motivates us to behave in ways that over evolutionary history have helped our ancestors transcend their selfish impulses to live in cooperative societies. Greene writes,

According to the dual-process theory, there is an automatic setting that sounds the emotional alarm in response to certain kinds of harmful actions, such as the action in the footbridge case. Then there’s manual mode, which by its nature tends to think in terms of costs and benefits. Manual mode looks at all of these cases—switch, footbridge, and loop—and says “Five for one? Sounds like a good deal.” Because manual mode always reaches the same conclusion in these five-for-one cases (“Good deal!”), the trend in judgment for each of these cases is ultimately determined by the automatic setting—that is, by whether the myopic module sounds the alarm. (233)

What makes the dilemmas difficult then is that we experience them in two conflicting ways. Most of us, most of the time, follow the dictates of the automatic setting, which Greene describes as myopic because its speed and efficiency come at the cost of inflexibility and limited scope for extenuating considerations.

The reason our intuitive settings sound an alarm at the thought of pushing a man off a bridge but remain silent about hitting a switch, Greene suggests, is that our ancestors evolved to live in cooperative groups where some means of preventing violence between members had to be in place to avoid dissolution—or outright implosion. One of the dangers of living with a bunch of upright-walking apes who possess the gift of foresight is that any one of them could at any time be plotting revenge for some seemingly minor slight, or conspiring to get you killed so he can move in on your spouse or inherit your belongings. For a group composed of individuals with the capacity to hold grudges and calculate future gains to function cohesively, the members must have in place some mechanism that affords a modicum of assurance that no one will murder them in their sleep. Greene writes,

To keep one’s violent behavior in check, it would help to have some kind of internal monitor, an alarm system that says “Don’t do that!” when one is contemplating an act of violence. Such an action-plan inspector would not necessarily object to all forms of violence. It might shut down, for example, when it’s time to defend oneself or attack one’s enemies. But it would, in general, make individuals very reluctant to physically harm one another, thus protecting individuals from retaliation and, perhaps, supporting cooperation at the group level. My hypothesis is that the myopic module is precisely this action-plan inspecting system, a device for keeping us from being casually violent. (226)

Hitting a switch to transfer a train from one track to another seems acceptable, even though a person ends up being killed, because nothing our ancestors would have recognized as violence is involved.

Many philosophers cite our different responses to the various trolley dilemmas as support for deontological systems of morality—those based on the inherent rightness or wrongness of certain actions—since we intuitively know the choices suggested by a consequentialist approach are immoral. But Greene points out that this argument begs the question of how reliable our intuitions really are. He writes,

I’ve called the footbridge dilemma a moral fruit fly, and that analogy is doubly appropriate because, if I’m right, this dilemma is also a moral pest. It’s a highly contrived situation in which a prototypically violent action is guaranteed (by stipulation) to promote the greater good. The lesson that philosophers have, for the most part, drawn from this dilemma is that it’s sometimes deeply wrong to promote the greater good. However, our understanding of the dual-process moral brain suggests a different lesson: Our moral intuitions are generally sensible, but not infallible. As a result, it’s all but guaranteed that we can dream up examples that exploit the cognitive inflexibility of our moral intuitions. It’s all but guaranteed that we can dream up a hypothetical action that is actually good but that seems terribly, horribly wrong because it pushes our moral buttons. I know of no better candidate for this honor than the footbridge dilemma. (251)

The obverse is that many of the things that seem morally acceptable to us actually do cause harm to people. Greene cites the example of a man who lets a child drown because he doesn’t want to ruin his expensive shoes, which most people agree is monstrous, even though we think nothing of spending money on things we don’t really need when we could be sending that money to save sick or starving children in some distant country. Then there are crimes against the environment, which always seem to rank low on our list of priorities even though their future impact on real human lives could be devastating. We have our justifications for such actions or omissions, to be sure, but how valid are they really? Is distance really a morally relevant factor when we let children die? Does the diffusion of responsibility among so many millions change the fact that we personally could have a measurable impact?

These black marks notwithstanding, cooperation, and even a certain degree of altruism, come natural to us. To demonstrate this, Greene and his colleagues have devised some clever methods for separating test subjects’ intuitive responses from their more deliberate and effortful decisions. The experiments begin with a multi-participant exchange scenario developed by economic game theorists called the Public Goods Game, which has a number of anonymous players contribute to a common bank whose sum is then doubled and distributed evenly among them. Like the more famous game theory exchange known as the Prisoner’s Dilemma, the outcomes of the Public Goods Game reward cooperation, but only when a threshold number of fellow cooperators is reached. The flip side, however, is that any individual who decides to be stingy can get a free ride from everyone else’s contributions and make an even greater profit. What tends to happen is, over multiple rounds, the number of players opting for stinginess increases until the game is ruined for everyone, a process analogical to a phenomenon in economics known as the Tragedy of the Commons. Everyone wants to graze a few more sheep on the commons than can be sustained fairly, so eventually the grounds are left barren.

The Biological and Cultural Evolution of Morality

Greene believes that humans evolved emotional mechanisms to prevent the various analogs of the Tragedy of the Commons from occurring so that we can live together harmoniously in tight-knit groups. The outcomes of multiple rounds of the Public Goods Game, for instance, tend to be far less dismal when players are given the opportunity to devote a portion of their own profits to punishing free riders. Most humans, it turns out, will be motivated by the emotion of anger to expend their own resources for the sake of enforcing fairness. Over several rounds, cooperation becomes the norm. Such an outcome has been replicated again and again, but researchers are always interested in factors that influence players’ strategies in the early rounds. Greene describes a series of experiments he conducted with David Rand and Martin Nowak, which were reported in an article in Nature in 2012. He writes,

…we conducted our own Public Goods Games, in which we forced some people to decide quickly (less than ten seconds) and forced others to decide slowly (more than ten seconds). As predicted, forcing people to decide faster made them more cooperative and forcing people to slow down made them less cooperative (more likely to free ride). In other experiments, we asked people, before playing the Public Goods Game, to write about a time in which their intuitions served them well, or about a time in which careful reasoning led them astray. Reflecting on the advantages of intuitive thinking (or the disadvantages of careful reflection) made people more cooperative. Likewise, reflecting on the advantages of careful reasoning (or the disadvantages of intuitive thinking) made people less cooperative. (62)

These results offer strong support for Greene’s dual-process theory of morality, and they even hint at the possibility that the manual mode is fundamentally selfish or amoral—in other words, that the philosophers have been right all along in deferring to human intuitions about right and wrong.

As good as our intuitive moral sense is for preventing the Tragedy of the Commons, however, when given free rein in a society comprised of large groups of people who are strangers to one another, each with its own culture and priorities, our natural moral settings bring about an altogether different tragedy. Greene labels it the Tragedy of Commonsense Morality. He explains,

Morality evolved to enable cooperation, but this conclusion comes with an important caveat. Biologically speaking, humans were designed for cooperation, but only with some people. Our moral brains evolved for cooperation within groups, and perhaps only within the context of personal relationships. Our moral brains did not evolve for cooperation between groups (at least not all groups). (23)

Expanding on the story behind the Tragedy of the Commons, Greene describes what would happen if several groups, each having developed its own unique solution for making sure the commons were protected from overgrazing, were suddenly to come into contact with one another on a transformed landscape called the New Pastures. Each group would likely harbor suspicions against the others, and when it came time to negotiate a new set of rules to govern everybody the groups would all show a significant, though largely unconscious, bias in favor of their own members and their own ways.

The origins of moral psychology as a field can be traced to both developmental and evolutionary psychology. Seminal research conducted at Yale’s Infant Cognition Center, led by Karen Wynn, Kiley Hamlin, and Paul Bloom (and which Bloom describes in a charming and highly accessible book called Just Babies), has demonstrated that children as young as six months possess what we can easily recognize as a rudimentary moral sense. These findings suggest that much of the behavior we might have previously ascribed to lessons learned from adults is actually innate. Experiments based on game theory scenarios and thought-experiments like the trolley dilemmas are likewise thought to tap into evolved patterns of human behavior. Yet when University of British Columbia psychologist Joseph Henrich teamed up with several anthropologists to see how people living in various small-scale societies responded to game theory scenarios like the Prisoner’s Dilemma and the Public Goods Game they discovered a great deal of variation. On the one hand, then, human moral intuitions seem to be rooted in emotional responses present at, or at least close to, birth, but on the other hand cultures vary widely in their conventional responses to classic dilemmas. These differences between cultural conceptions of right and wrong are in large part responsible for the conflict Greene envisions in his Parable of the New Pastures.

But how can a moral sense be both innate and culturally variable? “As you might expect,” Greene explains, “the way people play these games reflects the way they live.” People in some cultures rely much more heavily on cooperation to procure their sustenance, as is the case with the Lamelara of Indonesia, who live off the meat of whales they hunt in groups. Cultures also vary in how much they rely on market economies as opposed to less abstract and less formal modes of exchange. Just as people adjust the way they play economic games in response to other players’ moves, people acquire habits of cooperation based on the circumstances they face in their particular societies. Regarding the differences between small-scale societies in common game theory strategies, Greene writes,

Henrich and colleagues found that payoffs to cooperation and market integration explain more than two thirds of the variation across these cultures. A more recent study shows that, across societies, market integration is an excellent predictor of altruism in the Dictator Game. At the same time, many factors that you might expect to be important predictors of cooperative behavior—things like an individual’s sex, age, and relative wealth, or the amount of money at stake—have little predictive power. (72)

In much the same way humans are programmed to learn a language and acquire a set of religious beliefs, they also come into the world with a suite of emotional mechanisms that make up the raw material for what will become a culturally calibrated set of moral intuitions. The specific language and religion we end up with is of course dependent on the social context of our upbringing, just as our specific moral settings will reflect those of other people in the societies we grow up in.

Jonathan Haidt and Tribal Righteousness

In our modern industrial society, we actually have some degree of choice when it comes to our cultural affiliations, and this freedom opens the way for heritable differences between individuals to play a larger role in our moral development. Such differences are nowhere as apparent as in the realm of politics, where nearly all citizens occupy some point on a continuum between conservative and liberal. According to Greene’s fellow moral psychologist Jonathan Haidt, we have precious little control over our moral responses because, in his view, reason only comes into play to justify actions and judgments we’ve already made. In his fascinating 2012 book The Righteous Mind, Haidt insists,

Moral reasoning is part of our lifelong struggle to win friends and influence people. That’s why I say that “intuitions come first, strategic reasoning second.” You’ll misunderstand moral reasoning if you think about it as something people do by themselves in order to figure out the truth. (50)

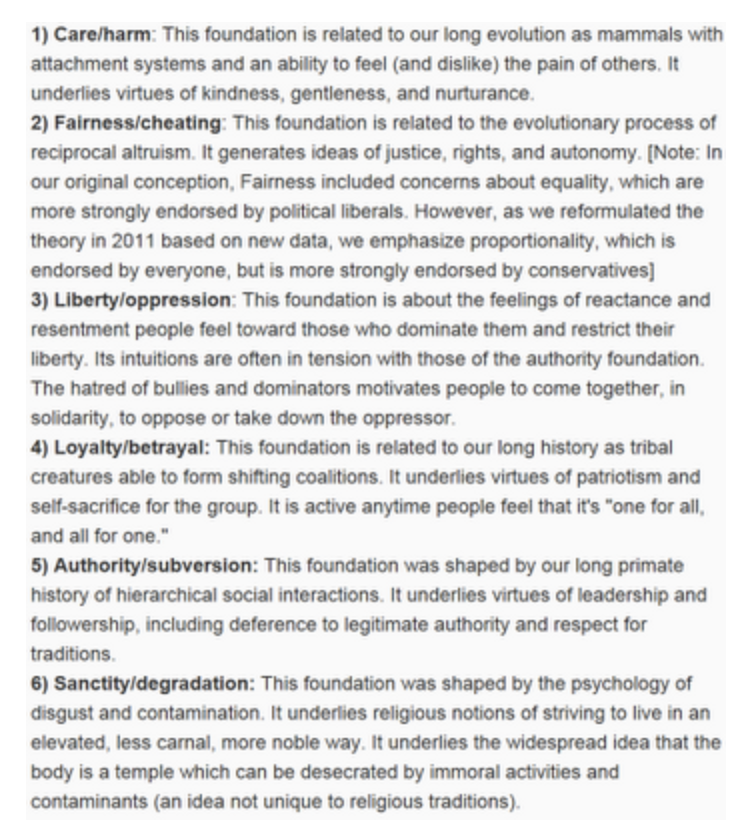

To explain the moral divide between right and left, Haidt points to the findings of his own research on what he calls Moral Foundations, six dimensions underlying our intuitions about moral and immoral actions. Conservatives tend to endorse judgments based on all six of the foundations, valuing loyalty, authority, and sanctity much more than liberals, who focus more exclusively on care for the disadvantaged, fairness, and freedom from oppression. Since our politics emerge from our moral intuitions and reason merely serves as a sort of PR agent to rationalize judgments after the fact, Haidt enjoins us to be more accepting of rival political groups— after all, you can’t reason with them.

Greene objects both to Haidt’s Moral Foundations theory and to his prescription for a politics of complementarity. The responses to questions representing all the moral dimensions in Haidt’s studies form two clusters on a graph, Greene points out, not six, suggesting that the differences between conservatives and liberals are attributable to some single overarching variable as opposed to several individual tendencies. Furthermore, the specific content of the questions Haidt uses to flesh out the values of his respondents have a critical limitation. Greene writes,

According to Haidt, American social conservatives place greater value on respect for authority, and that’s true in a sense. Social conservatives feel less comfortable slapping their fathers, even as a joke, and so on. But social conservatives do not respect authority in a general way. Rather, they have great respect for authorities recognized by their tribe (from the Christian God to various religious and political leaders to parents). American social conservatives are not especially respectful of Barack Hussein Obama, whose status as a native-born American, and thus a legitimate president, they have persistently challenged. (339)

The same limitation applies to the loyalty and sanctity foundations. Conservatives feel little loyalty toward the atheists and Muslims among their fellow Americans. Nor do they recognize the sanctity of Mosques or Hindu holy texts. Greene goes on,

American social conservatives are not best described as people who place special value on authority, sanctity, and loyalty, but rather as tribal loyalists—loyal to their own authorities, their own religion, and themselves. This doesn’t make them evil, but it does make them parochial, tribal. In this they’re akin to the world’s other socially conservative tribes, from the Taliban in Afghanistan to European nationalists. According to Haidt, liberals should be more open to compromise with social conservatives. I disagree. In the short term, compromise may be necessary, but in the long term, our strategy should not be to compromise with tribal moralists, but rather to persuade them to be less tribalistic. (340)

Greene believes such persuasion is possible, even with regard to emotionally and morally charged controversies, because he sees our manual-mode thinking as playing a potentially much greater role than Haidt sees it playing.

Metamorality on the New Pastures

Throughout The Righteous Mind, Haidt argues that the moral philosophers who laid the foundations of modern liberal and academic conceptions of right and wrong gave short shrift to emotions and intuitions—that they gave far too much credit to our capacity for reason. To be fair, Haidt does honor the distinction between descriptive and prescriptive theories of morality, but he nonetheless gives the impression that he considers liberal morality to be somewhat impoverished. Greene sees this attitude as thoroughly wrongheaded. Responding to Haidt’s metaphor comparing his Moral Foundations to taste buds—with the implication that the liberal palate is more limited in the range of flavors it can appreciate—Greene writes,

The great philosophers of the Enlightenment wrote at a time when the world was rapidly shrinking, forcing them to wonder whether their own laws, their own traditions, and their own God(s) were any better than anyone else’s. They wrote at a time when technology (e.g., ships) and consequent economic productivity (e.g., global trade) put wealth and power into the hands of a rising educated class, with incentives to question the traditional authorities of king and church. Finally, at this time, natural science was making the world comprehensible in secular terms, revealing universal natural laws and overturning ancient religious doctrines. Philosophers wondered whether there might also be universal moral laws, ones that, like Newton’s law of gravitation, applied to members of all tribes, whether or not they knew it. Thus, the Enlightenment philosophers were not arbitrarily shedding moral taste buds. They were looking for deeper, universal moral truths, and for good reason. They were looking for moral truths beyond the teachings of any particular religion and beyond the will of any earthly king. They were looking for what I’ve called a metamorality: a pan-tribal, or post-tribal, philosophy to govern life on the new pastures. (338-9)

While Haidt insists we must recognize the centrality of intuitions even in this civilization nominally ruled by reason, Greene points out that it was skepticism of old, seemingly unassailable and intuitive truths that opened up the world and made modern industrial civilization possible in the first place.

As Haidt explains, though, conservative morality serves people well in certain regards. Christian churches, for instance, encourage charity and foster a sense of community few secular institutions can match. But these advantages at the level of parochial groups have to be weighed against the problems tribalism inevitably leads to at higher levels. This is, in fact, precisely the point Greene created his Parable of the New Pastures to make. He writes,

The Tragedy of the Commons is averted by a suite of automatic settings—moral emotions that motivate and stabilize cooperation within limited groups. But the Tragedy of Commonsense Morality arises because of automatic settings, because different tribes have different automatic settings, causing them to see the world through different moral lenses. The Tragedy of the Commons is a tragedy of selfishness, but the Tragedy of Commonsense Morality is a tragedy of moral inflexibility. There is strife on the new pastures not because herders are hopelessly selfish, immoral, or amoral, but because they cannot step outside their respective moral perspectives. How should they think? The answer is now obvious: They should shift into manual mode. (172)

Greene argues that whenever we, as a society, are faced with a moral controversy—as with issues like abortion, capital punishment, and tax policy—our intuitions will not suffice because our intuitions are the very basis of our disagreement.

Watching the conclusion to season four of Breaking Bad, most viewers probably responded to finding out that Walt had poisoned Brock by thinking that he’d become a monster—at least at first. Indeed, the currently dominant academic approach to art criticism involves taking a stance, both moral and political, with regard to a work’s models and messages. Writing for The New Yorker, Emily Nussbaum, for instance, disparages viewers of Breaking Bad for failing to condemn Walt, writing,

When Brock was near death in the I.C.U., I spent hours arguing with friends about who was responsible. To my surprise, some of the most hard-nosed cynics thought it inconceivable that it could be Walt—that might make the show impossible to take, they said. But, of course, it did nothing of the sort. Once the truth came out, and Brock recovered, I read posts insisting that Walt was so discerning, so careful with the dosage, that Brock could never have died. The audience has been trained by cable television to react this way: to hate the nagging wives, the dumb civilians, who might sour the fun of masculine adventure. “Breaking Bad” increases that cognitive dissonance, turning some viewers into not merely fans but enablers. (83)

To arrive at such an assessment, Nussbaum must reduce the show to the impact she assumes it will have on less sophisticated fans’ attitudes and judgments. But the really troubling aspect of this type of criticism is that it encourages scholars and critics to indulge their impulse toward self-righteousness when faced with challenging moral dilemmas; in other words, it encourages them to give voice to their automatic modes precisely when they should be shifting to manual mode. Thus, Nussbaum neglects outright the very details that make Walt’s scenario compelling, completely forgetting that by making Brock sick—and, yes, risking his life—he was able to save Hank, Skyler, and his own two children.

But how should we go about arriving at a resolution to moral dilemmas and political controversies if we agree we can’t always trust our intuitions? Greene believes that, while our automatic modes recognize certain acts as wrong and certain others as a matter of duty to perform, in keeping with deontological ethics, whenever we switch to manual mode, the focus shifts to weighing the relative desirability of each option's outcomes. In other words, manual mode thinking is consequentialist. And, since we tend to assess outcomes according their impact on other people, favoring those that improve the quality of their experiences the most, or detract from it the least, Greene argues that whenever we slow down and think through moral dilemmas deliberately we become utilitarians. He writes,

If I’m right, this convergence between what seems like the right moral philosophy (from a certain perspective) and what seems like the right moral psychology (from a certain perspective) is no accident. If I’m right, Bentham and Mill did something fundamentally different from all of their predecessors, both philosophically and psychologically. They transcended the limitations of commonsense morality by turning the problem of morality (almost) entirely over to manual mode. They put aside their inflexible automatic settings and instead asked two very abstract questions. First: What really matters? Second: What is the essence of morality? They concluded that experience is what ultimately matters, and that impartiality is the essence of morality. Combing these two ideas, we get utilitarianism: We should maximize the quality of our experience, giving equal weight to the experience of each person. (173)

If you cite an authority recognized only by your own tribe—say, the Bible—in support of a moral argument, then members of other tribes will either simply discount your position or counter it with pronouncements by their own authorities. If, on the other hand, you argue for a law or a policy by citing evidence that implementing it would mean longer, healthier, happier lives for the citizens it affects, then only those seeking to establish the dominion of their own tribe can discount your position (which of course isn’t to say they can’t offer rival interpretations of your evidence).

If we turn commonsense morality on its head and evaluate the consequences of giving our intuitions priority over utilitarian accounting, we can find countless circumstances in which being overly moral is to everyone’s detriment. Ideas of justice and fairness allow far too much space for selfish and tribal biases, whereas the math behind mutually optimal outcomes based on compromise tends to be harder to fudge. Greene reports, for instance, the findings of a series of experiments conducted by Fieke Harinick and colleagues at the University of Amsterdam in 2000. Negotiations by lawyers representing either the prosecution or the defense were told to either focus on serving justice or on getting the best outcome for their clients. The negotiations in the first condition almost always came to loggerheads. Greene explains,

Thus, two selfish and rational negotiators who see that their positions are symmetrical will be willing to enlarge the pie, and then split the pie evenly. However, if negotiators are seeking justice, rather than merely looking out for their bottom lines, then other, more ambiguous, considerations come into play, and with them the opportunity for biased fairness. Maybe your clients really deserve lighter penalties. Or maybe the defendants you’re prosecuting really deserve stiffer penalties. There is a range of plausible views about what’s truly fair in these cases, and you can choose among them to suit your interests. By contrast, if it’s just a matter of getting the best deal you can from someone who’s just trying to get the best deal for himself, there’s a lot less wiggle room, and a lot less opportunity for biased fairness to create an impasse. (88)

Framing an argument as an effort to establish who was right and who was wrong is like drawing a line in the sand—it activates tribal attitudes pitting us against them, while treating negotiations more like an economic exchange circumvents these tribal biases.

Challenges to Utilitarianism

But do we really want to suppress our automatic moral reactions in favor of deliberative accountings of the greatest good for the greatest number? Deontologists have posed some effective challenges to utilitarianism in the form of thought-experiments that seem to show efforts to improve the quality of experiences would lead to atrocities. For instance, Greene recounts how in a high school debate, he was confronted by a hypothetical surgeon who could save five sick people by killing one healthy one. Then there’s the so-called Utility Monster, who experiences such happiness when eating humans that it quantitatively outweighs the suffering of those being eaten. More down-to-earth examples feature a scapegoat convicted of a crime to prevent rioting by people who are angry about police ineptitude, and the use of torture to extract information from a prisoner that could prevent a terrorist attack. The most influential challenge to utilitarianism, however, was leveled by the political philosopher John Rawls when he pointed out that it could be used to justify the enslavement of a minority by a majority.

Greene’s responses to these criticisms make up one of the most surprising, important, and fascinating parts of Moral Tribes. First, highly contrived thought-experiments about Utility Monsters and circumstances in which pushing a guy off a bridge is guaranteed to stop a trolley may indeed prove that utilitarianism is not true in any absolute sense. But whether or not such moral absolutes even exist is a contentious issue in its own right. Greene explains,

I am not claiming that utilitarianism is the absolute moral truth. Instead I’m claiming that it’s a good metamorality, a good standard for resolving moral disagreements in the real world. As long as utilitarianism doesn’t endorse things like slavery in the real world, that’s good enough. (275-6)

One source of confusion regarding the slavery issue is the equation of happiness with money; slave owners probably could make profits in excess of the losses sustained by the slaves. But money is often a poor index of happiness. Greene underscores this point by asking us to consider how much someone would have to pay us to sell ourselves into slavery. “In the real world,” he writes, “oppression offers only modest gains in happiness to the oppressors while heaping misery upon the oppressed” (284-5).

Another failing of the thought-experiments thought to undermine utilitarianism is the shortsightedness of the supposedly obvious responses. The crimes of the murderous doctor and the scapegoating law officers may indeed produce short-term increases in happiness, but if the secret gets out healthy and innocent people will live in fear, knowing they can’t trust doctors and law officers. The same logic applies to the objection that utilitarianism would force us to become infinitely charitable, since we can almost always afford to be more generous than we currently are. But how long could we serve as so-called happiness pumps before burning out, becoming miserable, and thus lose the capacity for making anyone else happier? Greene writes,

If what utilitarianism asks of you seems absurd, then it’s not what utilitarianism actually asks of you. Utilitarianism is, once again, an inherently practical philosophy, and there’s nothing more impractical than commanding free people to do things that strike them as absurd and that run counter to their most basic motivations. Thus, in the real world, utilitarianism is demanding, but not overly demanding. It can accommodate our basic human needs and motivations, but it nonetheless calls for substantial reform of our selfish habits. (258)

Greene seems to be endorsing what philosophers call "rule utilitarianism." We can approach every choice by calculating the likely outcomes, but as a society we would be better served deciding on some rules for everyone to adhere to. It just may be possible for a doctor to increase happiness through murder in a particular set of circumstances—but most people would vociferously object to a rule legitimizing the practice.

The concept of human rights may present another challenge to Greene in his championing of consequentialism over deontology. It is our duty, after all, to recognize the rights of every human, and we ourselves have no right to disregard someone else’s rights no matter what benefit we believe might result from doing so. In his book The Better Angels of our Nature, Steven Pinker, Greene’s colleague at Harvard, attributes much of the steep decline in rates of violent death over the past three centuries to a series of what he calls Rights Revolutions, the first of which began during the Enlightenment. But the problem with arguments that refer to rights, Greene explains, is that controversies arise for the very reason that people don’t agree which rights we should recognize. He writes,

Thus, appeals to “rights” function as an intellectual free pass, a trump card that renders evidence irrelevant. Whatever you and your fellow tribespeople feel, you can always posit the existence of a right that corresponds to your feelings. If you feel that abortion is wrong, you can talk about a “right to life.” If you feel that outlawing abortion is wrong, you can talk about a “right to choose.” If you’re Iran, you can talk about your “nuclear rights,” and if you’re Israel you can talk about your “right to self-defense.” “Rights” are nothing short of brilliant. They allow us to rationalize our gut feelings without doing any additional work. (302)

The only way to resolve controversies over which rights we should actually recognize and which rights we should prioritize over others, Greene argues, is to apply utilitarian reasoning.

Ideology and Epistemology

In his discussion of the proper use of the language of rights, Greene comes closer than in other section of Moral Tribes to explicitly articulating what strikes me as the most revolutionary idea that he and his fellow moral psychologists are suggesting—albeit as of yet only implicitly. In his advocacy for what he calls “deep pragmatism,” Greene isn’t merely applying evolutionary theories to an old philosophical debate; he’s actually posing a subtly different question. The numerous thought-experiments philosophers use to poke holes in utilitarianism may not have much relevance in the real world—but they do undermine any claim utilitarianism may have on absolute moral truth. Greene’s approach is therefore to eschew any effort to arrive at absolute truths, including truths pertaining to rights. Instead, in much the same way scientists accept that our knowledge of the natural world is mediated by theories, which only approach the truth asymptotically, never capturing it with any finality, Greene intimates that the important task in the realm of moral philosophy isn’t to arrive at a full accounting of moral truths but rather to establish a process for resolving moral and political dilemmas.

What’s needed, in other words, isn’t a rock solid moral ideology but a workable moral epistemology. And, just as empiricism serves as the foundation of the epistemology of science, Greene makes a convincing case that we could use utilitarianism as the basis of an epistemology of morality. Pursuing the analogy between scientific and moral epistemologies even farther, we can compare theories, which stand or fall according to their empirical support, to individual human rights, which we afford and affirm according to their impact on the collective happiness of every individual in the society. Greene writes,

If we are truly interested in persuading our opponents with reason, then we should eschew the language of rights. This is, once again, because we have no non-question-begging (and utilitarian) way of figuring out which rights really exist and which rights take precedence over others. But when it’s not worth arguing—either because the question has been settled or because our opponents can’t be reasoned with—then it’s time to start rallying the troops. It’s time to affirm our moral commitments, not with wonky estimates of probabilities but with words that stir our souls. (308-9)

Rights may be the closest thing we have to moral truths, just as theories serve as our stand-ins for truths about the natural world, but even more important than rights or theories are the processes we rely on to establish and revise them.

A New Theory of Narrative

As if a philosophical revolution weren’t enough, moral psychology is also putting in place what could be the foundation of a new understanding of the role of narratives in human lives. At the heart of every story is a conflict between competing moral ideals. In commercial fiction, there tends to be a character representing each side of the conflict, and audiences can be counted on to favor one side over the other—the good, altruistic guys over the bad, selfish guys. In more literary fiction, on the other hand, individual characters are faced with dilemmas pitting various modes of moral thinking against each other. In season one of Breaking Bad, for instance, Walter White famously writes a list on a notepad of the pros and cons of murdering the drug dealer restrained in Jesse’s basement. Everyone, including Walt, feels that killing the man is wrong, but if they let him go Walt and his family will at risk of retaliation. This dilemma is in fact quite similar to ones he faces in each of the following seasons, right up until he has to decide whether or not to poison Brock. Trying to work out what Walt should do, and anxiously anticipating what he will do, are mental exercises few can help engaging in as they watch the show.

The current reigning conception of narrative in academia explains the appeal of stories by suggesting it derives from their tendency to fulfill conscious and unconscious desires, most troublesomely our desires to have our prejudices reinforced. We like to see men behaving in ways stereotypically male, women stereotypically female, minorities stereotypically black or Hispanic, and so on. Cultural products like works of art, and even scientific findings, are embraced, the reasoning goes, because they cement the hegemony of various dominant categories of people within the society. This tradition in arts scholarship and criticism can in large part be traced back to psychoanalysis, but it has developed over the last century to incorporate both the predominant view of language in the humanities and the cultural determinism espoused by many scholars in the service of various forms of identity politics.

The postmodern ideology that emerged from the convergence of these schools is marked by a suspicion that science is often little more than a veiled effort at buttressing the political status quo, and its preeminent thinkers deliberately set themselves apart from the humanist and Enlightenment traditions that held sway in academia until the middle of the last century by writing in byzantine, incoherent prose. Even though there could be no rational way either to support or challenge postmodern ideas, scholars still take them as cause for leveling accusations against both scientists and storytellers of using their work to further reactionary agendas.

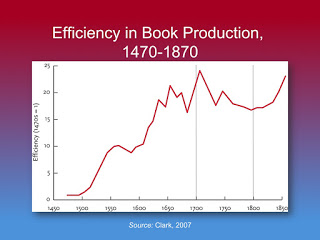

For anyone who recognizes the unparalleled power of science both to advance our understanding of the natural world and to improve the conditions of human lives, postmodernism stands out as a catastrophic wrong turn, not just in academic history but in the moral evolution of our civilization. The equation of narrative with fantasy is a bizarre fantasy in its own right. Attempting to explain the appeal of a show like Breaking Bad by suggesting that viewers have an unconscious urge to be diagnosed with cancer and to subsequently become drug manufacturers is symptomatic of intractable institutional delusion. And, as Pinker recounts in Better Angels, literature, and novels in particular, were likely instrumental in bringing about the shift in consciousness toward greater compassion for greater numbers of people that resulted in the unprecedented decline in violence beginning in the second half of the nineteenth century.

Yet, when it comes to arts scholarship, postmodernism is just about the only game in town. Granted, the writing in this tradition has progressed a great deal toward greater clarity, but the focus on identity politics has intensified to the point of hysteria: you’d be hard-pressed to find a major literary figure who hasn’t been accused of misogyny at one point or another, and any scientist who dares study something like gender differences can count on having her motives questioned and her work lampooned by well intentioned, well indoctrinated squads of well-poisoning liberal wags.

When Emily Nussbaum complains about viewers of Breaking Bad being lulled by the “masculine adventure” and the digs against “nagging wives” into becoming enablers of Walt’s bad behavior, she’s doing exactly what so many of us were taught to do in academic courses on literary and film criticism, applying a postmodern feminist ideology to the show—and completely missing the point. As the series opens, Walt is deliberately portrayed as downtrodden and frustrated, and Skyler’s bullying is an important part of that dynamic. But the pleasure of watching the show doesn’t come so much from seeing Walt get out from under Skyler’s thumb—he never really does—as it does from anticipating and fretting over how far Walt will go in his criminality, goaded on by all that pent-up frustration. Walt shows a similar concern for himself, worrying over what effect his exploits will have on who he is and how he will be remembered. We see this in season three when he becomes obsessed by the “contamination” of his lab—which turns out to be no more than a house fly—and at several other points as well. Viewers are not concerned with Walt because he serves as an avatar acting out their fantasies (or else the show would have a lot more nude scenes with the principal of the school he teaches in). They’re concerned because, at least at the beginning of the show, he seems to be a good person and they can sympathize with his tribulations.

The much more solidly grounded view of narrative inspired by moral psychology suggests that common themes in fiction are not reflections or reinforcements of some dominant culture, but rather emerge from aspects of our universal human psychology. Our feelings about characters, according to this view, aren’t determined by how well they coincide with our abstract prejudices; on the contrary, we favor the types of people in fiction we would favor in real life. Indeed, if the story is any good, we will have to remind ourselves that the people whose lives we’re tracking really are fictional. Greene doesn’t explore the intersection between moral psychology and narrative in Moral Tribes, but he does give a nod to what we can only hope will be a burgeoning field when he writes,

Nowhere is our concern for how others treat others more apparent than in our intense engagement with fiction. Were we purely selfish, we wouldn’t pay good money to hear a made-up story about a ragtag group of orphans who use their street smarts and quirky talents to outfox a criminal gang. We find stories about imaginary heroes and villains engrossing because they engage our social emotions, the ones that guide our reactions to real-life cooperators and rogues. We are not disinterested parties. (59)

Many people ask why we care so much about people who aren’t even real, but we only ever reflect on the fact that what we’re reading or viewing is a simulation when we’re not sufficiently engrossed by it.

Was Nussbaum completely wrong to insist that Walt went beyond the pale when he poisoned Brock? She certainly showed the type of myopia Greene attributes to the automatic mode by forgetting Walt saved at least four lives by endangering the one. But most viewers probably had a similar reaction. The trouble wasn’t that she was appalled; it was that her postmodernism encouraged her to unquestioningly embrace and give voice to her initial feelings. Greene writes,

It’s good that we’re alarmed by acts of violence. But the automatic emotional gizmos in our brains are not infinitely wise. Our moral alarm systems think that the difference between pushing and hitting a switch is of great moral significance. More important, our moral alarm systems see no difference between self-serving murder and saving a million lives at the cost of one. It’s a mistake to grant these gizmos veto power in our search for a universal moral philosophy. (253)

Greene doesn’t reveal whether he’s a Breaking Bad fan or not, but his discussion of the footbridge dilemma gives readers a good indication of how he’d respond to Walt’s actions.

If you don’t feel that it’s wrong to push the man off the footbridge, there’s something wrong with you. I, too, feel that it’s wrong, and I doubt that I could actually bring myself to push, and I’m glad that I’m like this. What’s more, in the real world, not pushing would almost certainly be the right decision. But if someone with the best of intentions were to muster the will to push the man off the footbridge, knowing for sure that it would save five lives, and knowing for sure that there was no better alternative, I would approve of this action, although I might be suspicious of the person who chose to perform it. (251)

The overarching theme of Breaking Bad, which Nussbaum fails utterly to comprehend, is the transformation of a good man into a bad one. When he poisons Brock, we’re glad he succeeded in saving his family, some of us are even okay with methods, but we’re worried—suspicious even—about what his ability to go through with it says about him. Over the course of the series, we’ve found ourselves rooting for Walt, and we’ve come to really like him. We don’t want to see him break too bad. And however bad he does break we can’t help hoping for his redemption. Since he’s a valuable member of our tribe, we’re loath to even consider it might be time to start thinking he has to go.

Also read:

The Criminal Sublime: Walter White's Brutally Plausible Journey to the Heart of Darkness in Breaking Bad

And

LET'S PLAY KILL YOUR BROTHER: FICTION AS A MORAL DILEMMA GAME

And

The Self-Righteousness Instinct: Steven Pinker on the Better Angels of Modernity and the Evils of Morality

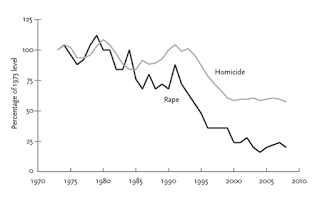

Is violence really declining? How can that be true? What could be causing it? Why are so many of us convinced the world is going to hell in a hand basket? Steven Pinker attempts to answer these questions in his magnificent and mind-blowing book.

Steven Pinker is one of the few scientists who can write a really long book and still expect a significant number of people to read it. But I have a feeling many who might be vaguely intrigued by the buzz surrounding his 2011 book The Better Angels of Our Nature: Why Violence Has Declined wonder why he had to make it nearly seven hundred outsized pages long. Many curious folk likely also wonder why a linguist who proselytizes for psychological theories derived from evolutionary or Darwinian accounts of human nature would write a doorstop drawing on historical and cultural data to describe the downward trajectories of rates of the worst societal woes. The message that violence of pretty much every variety is at unprecedentedly low rates comes as quite a shock, as it runs counter to our intuitive, news-fueled sense of being on a crash course for Armageddon. So part of the reason behind the book’s heft is that Pinker has to bolster his case with lots of evidence to get us to rethink our views. But flipping through the book you find that somewhere between half and a third of its mass is devoted, not to evidence of the decline, but to answering the questions of why the trend has occurred and why it gives every indication of continuing into the foreseeable future. So is this a book about how evolution has made us violent or about how culture is making us peaceful?

The first thing that needs to be said about Better Angels is that you should read it. Despite its girth, it’s at no point the least bit cumbersome to read, and at many points it’s so fascinating that, weighty as it is, you’ll have a hard time putting it down. Pinker has mastered a prose style that’s simple and direct to the point of feeling casual without ever wanting for sophistication. You can also rest assured that what you’re reading is timely and important because it explores aspects of history and social evolution that impact pretty much everyone in the world but that have gone ignored—if not censoriously denied—by most of the eminences contributing to the zeitgeist since the decades following the last world war.

Still, I suspect many people who take the plunge into the first hundred or so pages are going to feel a bit disoriented as they try to figure out what the real purpose of the book is, and this may cause them to falter in their resolve to finish reading. The problem is that the resistance Better Angels runs to such a prodigious page-count simultaneously anticipating and responding to doesn’t come from news media or the blinkered celebrities in the carnivals of sanctimonious imbecility that are political talk shows. It comes from Pinker’s fellow academics. The overall point of Better Angels remains obscure owing to some deliberate caginess on the author’s part when it comes to identifying the true targets of his arguments.

This evasiveness doesn’t make the book difficult to read, but a quality of diffuseness to the theoretical sections, a multitude of strands left dangling, does at points make you doubt whether Pinker had a clear purpose in writing, which makes you doubt your own purpose in reading. With just a little tying together of those strands, however, you start to see that while on the surface he’s merely righting the misperception that over the course of history our species has been either consistently or increasingly violent, what he’s really after is something different, something bigger. He’s trying to instigate, or at least play a part in instigating, a revolution—or more precisely a renaissance—in the way scholars and intellectuals think not just about human nature but about the most promising ways to improve the lot of human societies.

The longstanding complaint about evolutionary explanations of human behavior is that by focusing on our biology as opposed to our supposedly limitless capacity for learning they imply a certain level of fixity to our nature, and this fixedness is thought to further imply a limit to what political reforms can accomplish. The reasoning goes, if the explanation for the way things are is to be found in our biology, then, unless our biology changes, the way things are is the way they’re going to remain. Since biological change occurs at the glacial pace of natural selection, we’re pretty much stuck with the nature we have.

Historically, many scholars have made matters worse for evolutionary scientists today by applying ostensibly Darwinian reasoning to what seemed at the time obvious biological differences between human races in intelligence and capacity for acquiring the more civilized graces, making no secret of their conviction that the differences justified colonial expansion and other forms of oppressive rule. As a result, evolutionary psychologists of the past couple of decades have routinely had to defend themselves against charges that they’re secretly trying to advance some reactionary (or even genocidal) agenda. Considering Pinker’s choice of topic in Better Angels in light of this type of criticism, we can start to get a sense of what he’s up to—and why his efforts are discombobulating.

If you’ve spent any time on a university campus in the past forty years, particularly if it was in a department of the humanities, then you have been inculcated with an ideology that was once labeled postmodernism but that eventually became so entrenched in academia, and in intellectual culture more broadly, that it no longer requires a label. (If you took a class with the word "studies" in the title, then you got a direct shot to the brain.) Many younger scholars actually deny any espousal of it—“I’m not a pomo!”—with reference to a passé version marked by nonsensical tangles of meaningless jargon and the conviction that knowledge of the real world is impossible because “the real world” is merely a collective delusion or social construction put in place to perpetuate societal power structures. The disavowals notwithstanding, the essence of the ideology persists in an inescapable but unremarked obsession with those same power structures—the binaries of men and women, whites and blacks, rich and poor, the West and the rest—and the abiding assumption that texts and other forms of media must be assessed not just according to their truth content, aesthetic virtue, or entertainment value, but also with regard to what we imagine to be their political implications. Indeed, those imagined political implications are often taken as clear indicators of the author’s true purpose in writing, which we must sniff out—through a process called “deconstruction,” or its anemic offspring “rhetorical analysis”—lest we complacently succumb to the subtle persuasion.

In the late nineteenth and early twentieth centuries, faith in what we now call modernism inspired intellectuals to assume that the civilizations of Western Europe and the United States were on a steady march of progress toward improved lives for all their own inhabitants as well as the world beyond their borders. Democracy had brought about a new age of government in which rulers respected the rights and freedom of citizens. Medicine was helping ever more people live ever longer lives. And machines were transforming everything from how people labored to how they communicated with friends and loved ones. Everyone recognized that the driving force behind this progress was the juggernaut of scientific discovery. But jump ahead a hundred years to the early twenty-first century and you see a quite different attitude toward modernity. As Pinker explains in the closing chapter of Better Angels,

A loathing of modernity is one of the great constants of contemporary social criticism. Whether the nostalgia is for small-town intimacy, ecological sustainability, communitarian solidarity, family values, religious faith, primitive communism, or harmony with the rhythms of nature, everyone longs to turn back the clock. What has technology given us, they say, but alienation, despoliation, social pathology, the loss of meaning, and a consumer culture that is destroying the planet to give us McMansions, SUVs, and reality television? (692)

The social pathology here consists of all the inequities and injustices suffered by the people on the losing side of those binaries all us closet pomos go about obsessing over. Then of course there’s industrial-scale war and all the other types of modern violence. With terrorism, the War on Terror, the civil war in Syria, the Israel-Palestine conflict, genocides in the Sudan, Kosovo, and Rwanda, and the marauding bands of drugged-out gang rapists in the Congo, it seems safe to assume that science and democracy and capitalism have contributed to the construction of an unsafe global system with some fatal, even catastrophic design flaws. And that’s before we consider the two world wars and the Holocaust. So where the hell is this decline Pinker refers to in his title?

One way to think about the strain of postmodernism or anti-modernism with the most currency today (and if you’re reading this essay you can just assume your views have been influenced by it) is that it places morality and politics—identity politics in particular—atop a hierarchy of guiding standards above science and individual rights. So, for instance, concerns over the possibility that a negative image of Amazonian tribespeople might encourage their further exploitation trump objective reporting on their culture by anthropologists, even though there’s no evidence to support those concerns. And evidence that the disproportionate number of men in STEM fields reflects average differences between men and women in lifestyle preferences and career interests is ignored out of deference to a political ideal of perfect parity. The urge to grant moral and political ideals veto power over science is justified in part by all the oppression and injustice that abounds in modern civilizations—sexism, racism, economic exploitation—but most of all it’s rationalized with reference to the violence thought to follow in the wake of any movement toward modernity. Pinker writes,

“The twentieth century was the bloodiest in history” is a cliché that has been used to indict a vast range of demons, including atheism, Darwin, government, science, capitalism, communism, the ideal of progress, and the male gender. But is it true? The claim is rarely backed up by numbers from any century other than the 20th, or by any mention of the hemoclysms of centuries past. (193)

He gives the question even more gravity when he reports that all those other areas in which modernity is alleged to be such a colossal failure tend to improve in the absence of violence. “Across time and space,” he writes in the preface, “the more peaceable societies also tend to be richer, healthier, better educated, better governed, more respectful of their women, and more likely to engage in trade” (xxiii). So the question isn’t just about what the story with violence is; it’s about whether science, liberal democracy, and capitalism are the disastrous blunders we’ve learned to think of them as or whether they still just might hold some promise for a better world.

*******

It’s in about the third chapter of Better Angels that you start to get the sense that Pinker’s style of thinking is, well, way out of style. He seems to be marching to the beat not of his own drummer but of some drummer from the nineteenth century. In the chapter previous, he drew a line connecting the violence of chimpanzees to that in what he calls non-state societies, and the images he’s left you with are savage indeed. Now he’s bringing in the philosopher Thomas Hobbes’s idea of a government Leviathan that once established immediately works to curb the violence that characterizes us humans in states of nature and anarchy. According to sociologist Norbert Elias’s 1969 book, The Civilizing Process, a work whose thesis plays a starring role throughout Better Angels, the consolidation of a Leviathan in England set in motion a trend toward pacification, beginning with the aristocracy no less, before spreading down to the lower ranks and radiating out to the countries of continental Europe and onward thence to other parts of the world. You can measure your feelings of unease in response to Pinker’s civilizing scenario as a proxy for how thoroughly steeped you are in postmodernism.

The two factors missing from his account of the civilizing pacification of Europe that distinguish it from the self-congratulatory and self-exculpatory sagas of centuries past are the innate superiority of the paler stock and the special mission of conquest and conversion commissioned by a Christian god. In a later chapter, Pinker violates the contemporary taboo against discussing—or even thinking about—the potential role of average group (racial) differences in a propensity toward violence, but he concludes the case for any such differences is unconvincing: “while recent biological evolution may, in theory, have tweaked our inclinations toward violence and nonviolence, we have no good evidence that it actually has” (621). The conclusion that the Civilizing Process can’t be contingent on congenital characteristics follows from the observation of how readily individuals from far-flung regions acquire local habits of self-restraint and fellow-feeling when they’re raised in modernized societies. As for religion, Pinker includes it in a category of factors that are “Important but Inconsistent” with regard to the trend toward peace, dismissing the idea that atheism leads to genocide by pointing out that “Fascism happily coexisted with Catholicism in Spain, Italy, Portugal, and Croatia, and though Hitler had little use for Christianity, he was by no means an atheist, and professed that he was carrying out a divine plan.” Though he cites several examples of atrocities incited by religious fervor, he does credit “particular religious movements at particular times in history” with successfully working against violence (677).

Despite his penchant for blithely trampling on the taboos of the liberal intelligentsia, Pinker refuses to cooperate with our reflex to pigeonhole him with imperialists or far-right traditionalists past or present. He continually holds up to ridicule the idea that violence has any redeeming effects. In a section on the connection between increasing peacefulness and rising intelligence, he suggests that our violence-tolerant “recent ancestors” can rightly be considered “morally retarded” (658).

He singles out George W. Bush as an unfortunate and contemptible counterexample in a trend toward more complex political rhetoric among our leaders. And if it’s either gender that comes out not looking as virtuous in Better Angels it ain’t the distaff one. Pinker is difficult to categorize politically because he’s a scientist through and through. What he’s after are reasoned arguments supported by properly weighed evidence.

But there is something going on in Better Angels beyond a mere accounting for the ongoing decline in violence that most of us are completely oblivious of being the beneficiaries of. For one, there’s a challenge to the taboo status of topics like genetic differences between groups, or differences between individuals in IQ, or differences between genders. And there’s an implicit challenge as well to the complementary premises he took on more directly in his earlier book The Blank Slate that biological theories of human nature always lead to oppressive politics and that theories of the infinite malleability of human behavior always lead to progress (communism relies on a blank slate theory, and it inspired guys like Stalin, Mao, and Pol Pot to murder untold millions). But the most interesting and important task Pinker has set for himself with Better Angels is a restoration of the Enlightenment, with its twin pillars of science and individual rights, to its rightful place atop the hierarchy of our most cherished guiding principles, the position we as a society misguidedly allowed to be usurped by postmodernism, with its own dual pillars of relativism and identity politics.

But, while the book succeeds handily in undermining the moral case against modernism, it does so largely by stealth, with only a few explicit references to the ideologies whose advocates have dogged Pinker and his fellow evolutionary psychologists for decades. Instead, he explores how our moral intuitions and political ideals often inspire us to make profoundly irrational arguments for positions that rational scrutiny reveals to be quite immoral, even murderous. As one illustration of how good causes can be taken to silly, but as yet harmless, extremes, he gives the example of how “violence against children has been defined down to dodgeball” (415) in gym classes all over the US, writing that

The prohibition against dodgeball represents the overshooting of yet another successful campaign against violence, the century-long movement to prevent the abuse and neglect of children. It reminds us of how a civilizing offensive can leave a culture with a legacy of puzzling customs, peccadilloes, and taboos. The code of etiquette bequeathed to us by this and other Rights Revolutions is pervasive enough to have acquired a name. We call it political correctness. (381)

Such “civilizing offensives” are deliberately undertaken counterparts to the fortuitously occurring Civilizing Process Elias proposed to explain the jagged downward slope in graphs of relative rates of violence beginning in the Middle Ages in Europe. The original change Elias describes came about as a result of rulers consolidating their territories and acquiring greater authority. As Pinker explains,

Once Leviathan was in charge, the rules of the game changed. A man’s ticket to fortune was no longer being the baddest knight in the area but making a pilgrimage to the king’s court and currying favor with him and his entourage. The court, basically a government bureaucracy, had no use for hotheads and loose cannons, but sought responsible custodians to run its provinces. The nobles had to change their marketing. They had to cultivate their manners, so as not to offend the king’s minions, and their empathy, to understand what they wanted. The manners appropriate for the court came to be called “courtly” manners or “courtesy.” (75)

And this higher premium on manners and self-presentation among the nobles would lead to a cascade of societal changes.

Elias first lighted on his theory of the Civilizing Process as he was reading some of the etiquette guides which survived from that era. It’s striking to us moderns to see that knights of yore had to be told not to dispose of their snot by shooting it into their host’s table cloth, but that simply shows how thoroughly people today internalize these rules. As Elias explains, they’ve become second nature to us. Of course, we still have to learn them as children. Pinker prefaces his discussion of Elias’s theory with a recollection of his bafflement at why it was so important for him as a child to abstain from using his knife as a backstop to help him scoop food off his plate with a fork. Table manners, he concludes, reside on the far end of a continuum of self-restraint at the opposite end of which are once-common practices like cutting off the nose of a dining partner who insults you. Likewise, protecting children from the perils of flying rubber balls is the product of a campaign against the once-common custom of brutalizing them. The centrality of self-control is the common underlying theme: we control our urge to misuse utensils, including their use in attacking our fellow diners, and we control our urge to throw things at our classmates, even if it’s just in sport. The effect of the Civilizing Process in the Middle Ages, Pinker explains, was that “A culture of honor—the readiness to take revenge—gave way to a culture of dignity—the readiness to control one’s emotions” (72). In other words, diplomacy became more important than deterrence.

What we’re learning here is that even an evolved mind can adjust to changing incentive schemes. Chimpanzees have to control their impulses toward aggression, sexual indulgence, and food consumption in order to survive in hierarchical bands with other chimps, many of whom are bigger, stronger, and better-connected. Much of the violence in chimp populations takes the form of adult males vying for positions in the hierarchy so they can enjoy the perquisites males of lower status must forgo to avoid being brutalized. Lower ranking males meanwhile bide their time, hopefully forestalling their gratification until such time as they grow stronger or the alpha grows weaker. In humans, the capacity for impulse-control and the habit of delaying gratification are even more important because we live in even more complex societies. Those capacities can either lie dormant or they can be developed to their full potential depending on exactly how complex the society is in which we come of age. Elias noticed a connection between the move toward more structured bureaucracies, less violence, and an increasing focus on etiquette, and he concluded that self-restraint in the form of adhering to strict codes of comportment was both an advertisement of, and a type of training for, the impulse-control that would make someone a successful bureaucrat.

Aside from children who can’t fathom why we’d futz with our forks trying to capture recalcitrant peas, we normally take our society’s rules of etiquette for granted, no matter how inconvenient or illogical they are, seldom thinking twice before drawing unflattering conclusions about people who don’t bother adhering to them, the ones for whom they aren’t second nature. And the importance we place on etiquette goes beyond table manners. We judge people according to the discretion with which they dispose of any and all varieties of bodily effluent, as well as the delicacy with which they discuss topics sexual or otherwise basely instinctual.

Elias and Pinker’s theory is that, while the particular rules are largely arbitrary, the underlying principle of transcending our animal nature through the application of will, motivated by an appreciation of social convention and the sensibilities of fellow community members, is what marked the transition of certain constituencies of our species from a violent non-state existence to a relatively peaceful, civilized lifestyle. To Pinker, the uptick in violence that ensued once the counterculture of the 1960s came into full blossom was no coincidence. The squares may not have been as exciting as the rock stars who sang their anthems to hedonism and the liberating thrill of sticking it to the man. But a society of squares has certain advantages—a lower probability for each of its citizens of getting beaten or killed foremost among them.

The Civilizing Process as Elias and Pinker, along with Immanuel Kant, understand it picks up momentum as levels of peace conducive to increasingly complex forms of trade are achieved. To understand why the move toward markets or “gentle commerce” would lead to decreasing violence, us pomos have to swallow—at least momentarily—our animus for Wall Street and all the corporate fat cats in the top one percent of the wealth distribution. The basic dynamic underlying trade is that one person has access to more of something than they need, but less of something else, while another person has the opposite balance, so a trade benefits them both. It’s a win-win, or a positive-sum game. The hard part for educated liberals is to appreciate that economies work to increase the total wealth; there isn’t a set quantity everyone has to divvy up in a zero-sum game, an exchange in which every gain for one is a loss for another. And Pinker points to another benefit: