READING SUBTLY

This

was the domain of my Blogger site from 2009 to 2018, when I moved to this domain and started

The Storytelling Ape

. The search option should help you find any of the old posts you're looking for.

Capuchin-22: A Review of “The Bonobo and the Atheist: In Search of Humanism among the Primates” by Frans De Waal

Frans de Waal’s work is always a joy to read, insightful, surprising, and superbly humane. Unfortunately, in his mostly wonderful book, “The Bonobo and the Atheist,” he carts out a familiar series of straw men to level an attack on modern critics of religion—with whom, if he’d been more diligent in reading their work, he’d find much common ground with.

Whenever literary folk talk about voice, that supposedly ineffable but transcendently important quality of narration, they display an exasperating penchant for vagueness, as if so lofty a dimension to so lofty an endeavor couldn’t withstand being spoken of directly—or as if they took delight in instilling panic and self-doubt into the quivering hearts of aspiring authors. What the folk who actually know what they mean by voice actually mean by it is all the idiosyncratic elements of prose that give readers a stark and persuasive impression of the narrator as a character. Discussions of what makes for stark and persuasive characters, on the other hand, are vague by necessity. It must be noted that many characters even outside of fiction are neither. As a first step toward developing a feel for how character can be conveyed through writing, we may consider the nonfiction work of real people with real character, ones who also happen to be practiced authors.

The Dutch-American primatologist Frans de Waal is one such real-life character, and his prose stands as testament to the power of written language, lonely ink on colorless pages, not only to impart information, but to communicate personality and to make a contagion of states and traits like enthusiasm, vanity, fellow-feeling, bluster, big-heartedness, impatience, and an abiding wonder. De Waal is a writer with voice. Many other scientists and science writers explore this dimension to prose in their attempts to engage readers, but few avoid the traps of being goofy or obnoxious instead of funny—a trap David Pogue, for instance, falls into routinely as he hosts NOVA on PBS—and of expending far too much effort in their attempts at being distinctive, thus failing to achieve anything resembling grace.

The most striking quality of de Waal’s writing, however, isn’t that its good-humored quirkiness never seems strained or contrived, but that it never strays far from the man’s own obsession with getting at the stories behind the behaviors he so minutely observes—whether the characters are his fellow humans or his fellow primates, or even such seemingly unstoried creatures as rats or turtles. But to say that de Waal is an animal lover doesn’t quite capture the essence of what can only be described as a compulsive fascination marked by conviction—the conviction that when he peers into the eyes of a creature others might dismiss as an automaton, a bundle of twitching flesh powered by preprogrammed instinct, he sees something quite different, something much closer to the workings of his own mind and those of his fellow humans.

De Waal’s latest book, The Bonobo and the Atheist: In Search of Humanism among the Primates, reprises the main themes of his previous books, most centrally the continuity between humans and other primates, with an eye toward answering the questions of where does, and where should morality come from. Whereas in his books from the years leading up to the turn of the century he again and again had to challenge what he calls “veneer theory,” the notion that without a process of socialization that imposes rules on individuals from some outside source they’d all be greedy and selfish monsters, de Waal has noticed over the past six or so years a marked shift in the zeitgeist toward an awareness of our more cooperative and even altruistic animal urgings. Noting a sharp difference over the decades in how audiences at his lectures respond to recitations of the infamous quote by biologist Michael Ghiselin, “Scratch an altruist and watch a hypocrite bleed,” de Waal writes,

Although I have featured this cynical line for decades in my lectures, it is only since about 2005 that audiences greet it with audible gasps and guffaws as something so outrageous, so out of touch with how they see themselves, that they can’t believe it was ever taken seriously. Had the author never had a friend? A loving wife? Or a dog, for that matter? (43)

The assumption underlying veneer theory was that without civilizing influences humans’ deeper animal impulses would express themselves unchecked. The further assumption was that animals, the end products of the ruthless, eons-long battle for survival and reproduction, would reflect the ruthlessness of that battle in their behavior. De Waal’s first book, Chimpanzee Politics, which told the story of a period of intensified competition among the captive male chimps at the Arnhem Zoo for alpha status, with all the associated perks like first dibs on choice cuisine and sexually receptive females, was actually seen by many as lending credence to these assumptions. But de Waal himself was far from convinced that the primates he studied were invariably, or even predominantly, violent and selfish.

What he observed at the zoo in Arnhem was far from the chaotic and bloody free-for-all it would have been if the chimps took the kind of delight in violence for its own sake that many people imagine them being disposed to. As he pointed out in his second book, Peacemaking among Primates, the violence is almost invariably attended by obvious signs of anxiety on the part of those participating in it, and the tension surrounding any major conflict quickly spreads throughout the entire community. The hierarchy itself is in fact an adaptation that serves as a check on the incessant conflict that would ensue if the relative status of each individual had to be worked out anew every time one chimp encountered another. “Tightly embedded in society,” he writes in The Bonobo and the Atheist, “they respect the limits it puts on their behavior and are ready to rock the boat only if they can get away with it or if so much is at stake that it’s worth the risk” (154). But the most remarkable thing de Waal observed came in the wake of the fights that couldn’t successfully be avoided. Chimps, along with primates of several other species, reliably make reconciliatory overtures toward one another after they’ve come to blows—and bites and scratches. In light of such reconciliations, primate violence begins to look like a momentary, albeit potentially dangerous, readjustment to a regularly peaceful social order rather than any ongoing melee, as individuals with increasing or waning strength negotiate a stable new arrangement.

Part of the enchantment of de Waal’s writing is his judicious and deft balancing of anecdotes about the primates he works with on the one hand and descriptions of controlled studies he and his fellow researchers conduct on the other. In The Bonobo and the Atheist, he strikes a more personal note than he has in any of his previous books, at points stretching the bounds of the popular science genre and crossing into the realm of memoir. This attempt at peeling back the surface of that other veneer, the white-coated scientist’s posture of mechanistic objectivity and impassive empiricism, works best when de Waal is merging tales of his animal experiences with reports on the research that ultimately provides evidence for what was originally no more than an intuition. Discussing a recent, and to most people somewhat startling, experiment pitting the social against the alimentary preferences of a distant mammalian cousin, he recounts,

Despite the bad reputation of these animals, I have no trouble relating to its findings, having kept rats as pets during my college years. Not that they helped me become popular with the girls, but they taught me that rats are clean, smart, and affectionate. In an experiment at the University of Chicago, a rat was placed in an enclosure where it encountered a transparent container with another rat. This rat was locked up, wriggling in distress. Not only did the first rat learn how to open a little door to liberate the second, but its motivation to do so was astonishing. Faced with a choice between two containers, one with chocolate chips and another with a trapped companion, it often rescued its companion first. (142-3)

This experiment, conducted by Inbal Ben-Ami Bartal, Jean Decety, and Peggy Mason, actually got a lot of media coverage; Mason was even interviewed for an episode of NOVA Science NOW where you can watch a video of the rats performing the jailbreak and sharing the chocolate (and you can also see David Pogue being obnoxious.) This type of coverage has probably played a role in the shift in public opinion regarding the altruistic propensities of humans and animals. But if there’s one species who’s behavior can be said to have undermined the cynicism underlying veneer theory—aside from our best friend the dog of course—it would have to be de Waal’s leading character, the bonobo.

De Waal’s 1997 book Bonobo: The Forgotten Ape, on which he collaborated with photographer Frans Lanting, introduced this charismatic, peace-loving, sex-loving primate to the masses, and in the process provided behavioral scientists with a new model for what our own ancestors’ social lives might have looked like. Bonobo females dominate the males to the point where zoos have learned never to import a strange male into a new community without the protection of his mother. But for the most part any tensions, even those over food, even those between members of neighboring groups, are resolved through genito-genital rubbing—a behavior that looks an awful lot like sex and often culminates in vocalizations and facial expressions that resemble those of humans experiencing orgasms to a remarkable degree. The implications of bonobos’ hippy-like habits have even reached into politics. After an uncharacteristically ill-researched and ill-reasoned article in the New Yorker by Ian Parker which suggested that the apes weren’t as peaceful and erotic as we’d been led to believe, conservatives couldn’t help celebrating. De Waal writes in The Bonobo and the Atheist,

Given that this ape’s reputation has been a thorn in the side of homophobes as well as Hobbesians, the right-wing media jumped with delight. The bonobo “myth” could finally be put to rest, and nature remain red in tooth and claw. The conservative commentator Dinesh D’Souza accused “liberals” of having fashioned the bonobo into their mascot, and he urged them to stick with the donkey. (63)

But most primate researchers think the behavioral differences between chimps and bonobos are pretty obvious. De Waal points out that while violence does occur among the apes on rare occasions “there are no confirmed reports of lethal aggression among bonobos” (63). Chimps, on the other hand, have been observed doing all kinds of killing. Bonobos also outperform chimps in experiments designed to test their capacity for cooperation, as in the setup that requires two individuals to pull on a rope at the same time in order for either of them to get ahold of food placed atop a plank of wood. (Incidentally, the New Yorker’s track record when it comes to anthropology is suspiciously checkered—disgraced author Patrick Tierney’s discredited book on Napoleon Chagnon, for instance, was originally excerpted in the magazine.)

Bonobos came late to the scientific discussion of what ape behavior can tell us about our evolutionary history. The famous chimp researcher Robert Yerkes, whose name graces the facility de Waal currently directs at Emory University in Atlanta, actually wrote an entire book called Almost Human about what he believed was a rather remarkable chimp. A photograph from that period reveals that it wasn’t a chimp at all. It was a bonobo. Now, as this species is becoming better researched, and with the discovery of fossils like the 4.4 million-year-old Ardipethicus ramidus known as Ardi, a bipedal ape with fangs that are quite small when compared to the lethal daggers sported by chimps, the role of violence in our ancestry is ever more uncertain. De Waal writes,

What if we descend not from a blustering chimp-like ancestor but from a gentle, empathic bonobo-like ape? The bonobo’s body proportions—its long legs and narrow shoulders—seem to perfectly fit the descriptions of Ardi, as do its relatively small canines. Why was the bonobo overlooked? What if the chimpanzee, instead of being an ancestral prototype, is in fact a violent outlier in an otherwise relatively peaceful lineage? Ardi is telling us something, and there may exist little agreement about what she is saying, but I hear a refreshing halt to the drums of war that have accompanied all previous scenarios. (61)

De Waal is well aware of all the behaviors humans engage in that are more emblematic of chimps than of bonobos—in his 2005 book Our Inner Ape, he refers to humans as “the bipolar ape”—but the fact that our genetic relatedness to both species is exactly the same, along with the fact that chimps also have a surprising capacity for peacemaking and empathy, suggest to him that evolution has had plenty of time and plenty of raw material to instill in us the emotional underpinnings of a morality that emerges naturally—without having to be imposed by religion or philosophy. “Rather than having developed morality from scratch through rational reflection,” he writes in The Bonobo and the Atheist, “we received a huge push in the rear from our background as social animals" (17).

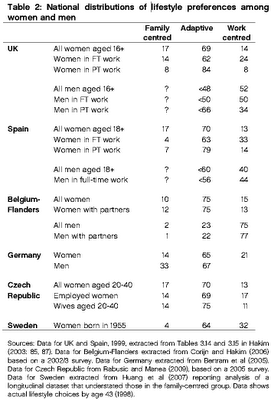

In the eighth and final chapter of The Bonobo and the Atheist, titled “Bottom-Up Morality,” de Waal describes what he believes is an alternative to top-down theories that attempt to derive morals from religion on the one hand and from reason on the other. Invisible beings threatening eternal punishment can frighten us into doing the right thing, and principles of fairness might offer slight nudges in the direction of proper comportment, but we must already have some intuitive sense of right and wrong for either of these belief systems to operate on if they’re to be at all compelling. Many people assume moral intuitions are inculcated in childhood, but experiments like the one that showed rats will come to the aid of distressed companions suggest something deeper, something more ingrained, is involved. De Waal has found that a video of capuchin monkeys demonstrating "inequity aversion"—a natural, intuitive sense of fairness—does a much better job than any charts or graphs at getting past the prejudices of philosophers and economists who want to insist that fairness is too complex a principle for mere monkeys to comprehend. He writes,

This became an immensely popular experiment in which one monkey received cucumber slices while another received grapes for the same task. The monkeys had no trouble performing if both received identical rewards of whatever quality, but rejected unequal outcomes with such vehemence that there could be little doubt about their feelings. I often show their reactions to audiences, who almost fall out of their chairs laughing—which I interpret as a sign of surprised recognition. (232)

What the capuchins do when they see someone else getting a better reward is throw the measly cucumber back at the experimenter and proceed to rattle the cage in agitation. De Waal compares it to the Occupy Wall Street protests. The poor monkeys clearly recognize the insanity of the human they’re working for.

There’s still a long way to travel, however, from helpful rats and protesting capuchins before you get to human morality. But that gap continues to shrink as researchers find new ways to explore the social behaviors of the primates that are even more closely related to us. Chimps, for instance, have been seen taking inequity aversion an important step beyond what monkeys display. Not only will certain individuals refuse to work for lesser rewards; they’ll refuse to work even for the superior rewards if they see their companions aren’t being paid equally. De Waal does acknowledge though that there still remains an important step between these behaviors and human morality. “I am reluctant to call a chimpanzee a ‘moral being,’” he writes.

This is because sentiments do not suffice. We strive for a logically coherent system and have debates about how the death penalty fits arguments for the sanctity of life, or whether an unchosen sexual orientation can be morally wrong. These debates are uniquely human. There is little evidence that other animals judge the appropriateness of actions that do not directly affect themselves. (17-8)

Moral intuitions can often inspire some behaviors that to people in modern liberal societies seem appallingly immoral. De Waal quotes anthropologist Christopher Boehm on the “special, pejorative moral ‘discount’ applied to cultural strangers—who often are not even considered fully human,” and he goes on to explain that “The more we expand morality’s reach, the more we need to rely on our intellect.” But the intellectual principles must be grounded in the instincts and emotions we evolved as social primates; this is what he means by bottom-up morality or “naturalized ethics” (235).

*****

In locating the foundations of morality in our evolved emotions—propensities we share with primates and even rats—de Waal seems to be taking a firm stand against any need for religion. But he insists throughout the book that this isn’t the case. And, while the idea that people are quite capable of playing fair and treating each other with compassion without any supernatural policing may seem to land him squarely in the same camp as prominent atheists like Richard Dawkins and Christopher Hitchens, whom he calls “neo-atheists,” he contends that they’re just as, if not more, misguided than the people of faith who believe the rules must be handed down from heaven. “Even though Dawkins cautioned against his own anthropomorphism of the gene,” de Waal wrote all the way back in his 1996 book Good Natured: The Origins of Right and Wrong in Humans and Other Animals, “with the passage of time, carriers of selfish genes became selfish by association” (14). Thus de Waal tries to find some middle ground between religious dogmatists on one side and those who are equally dogmatic in their opposition to religion and equally mistaken in their espousal of veneer theory on the other. “I consider dogmatism a far greater threat than religion per se,” he writes in The Bonobo and the Atheist.

I am particularly curious why anyone would drop religion while retaining the blinkers sometimes associated with it. Why are the “neo-atheists” of today so obsessed with God’s nonexistence that they go on media rampages, wear T-shirts proclaiming their absence of belief, or call for a militant atheism? What does atheism have to offer that’s worth fighting for? (84)

For de Waal, neo-atheism is an empty placeholder of a philosophy, defined not by any positive belief but merely by an obstinately negative attitude toward religion. It’s hard to tell early on in his book if this view is based on any actual familiarity with the books whose titles—The God Delusion, god is not Great—he takes issue with. What is obvious, though, is that he’s trying to appeal to some spirit of moderation so that he might reach an audience who may have already been turned off by the stridency of the debates over religion’s role in society. At any rate, we can be pretty sure that Hitchens, for one, would have had something to say about de Waal’s characterization.

De Waal’s expertise as a primatologist gave him what was in many ways an ideal perspective on the selfish gene debates, as well as on sociobiology more generally, much the way Sarah Blaffer Hrdy’s expertise has done for her. The monkeys and apes de Waal works with are a far cry from the ants and wasps that originally inspired the gene-centered approach to explaining behavior. “There are the bees dying for their hive,” he writes in The Bonobo and the Atheist,

and the millions of slime mold cells that build a single, sluglike organism that permits a few among them to reproduce. This kind of sacrifice was put on the same level as the man jumping into an icy river to rescue a stranger or the chimpanzee sharing food with a whining orphan. From an evolutionary perspective, both kinds of helping are comparable, but psychologically speaking they are radically different. (33)

At the same time, though, de Waal gets to see up close almost every day how similar we are to our evolutionary cousins, and the continuities leave no question as to the wrongheadedness of blank slate ideas about socialization. “The road between genes and behavior is far from straight,” he writes, sounding a note similar to that of the late Stephen Jay Gould, “and the psychology that produces altruism deserves as much attention as the genes themselves.” He goes on to explain,

Mammals have what I call an “altruistic impulse” in that they respond to signs of distress in others and feel an urge to improve their situation. To recognize the need of others, and react appropriately, is really not the same as a preprogrammed tendency to sacrifice oneself for the genetic good. (33)

We can’t discount the role of biology, in other words, but we must keep in mind that genes are at the distant end of a long chain of cause and effect that has countless other inputs before it links to emotion and behavior. De Waal angered both the social constructivists and quite a few of the gene-centered evolutionists, but by now the balanced view his work as primatologist helped him to arrive at has, for the most part, won the day. Now, in his other role as a scientist who studies the evolution of morality, he wants to strike a similar balance between extremists on both sides of the religious divide. Unfortunately, in this new arena, his perspective isn’t anywhere near as well informed.

The type of religion de Waal points to as evidence that the neo-atheists’ concerns are misguided and excessive is definitely moderate. It’s not even based on any actual beliefs, just some nice ideas and stories adherents enjoy hearing and thinking about in a spirit of play. We have to wonder, though, just how prevalent this New Age, Life-of-Pi type of religion really is. I suspect the passages in The Bonobo and the Atheist discussing it are going to be equally offensive to atheists and people of actual faith alike. Here’s one example of the bizarre way he writes about religion:

Neo-atheists are like people standing outside a movie theater telling us that Leonardo DiCaprio didn’t really go down with the Titanic. How shocking! Most of us are perfectly comfortable with the duality. Humor relies on it, too, lulling us into one way of looking at a situation only to hit us over the head with another. To enrich reality is one of the most delightful capacities we have, from pretend play in childhood to visions of an afterlife when we grow older. (294)

He seems to be suggesting that the religious know, on some level, their beliefs aren’t true. “Some realities exist,” he writes, “some we just like to believe in” (294). The problem is that while many readers may enjoy the innuendo about humorless and inveterately over-literal atheists, most believers aren’t joking around—even the non-extremists are more serious than de Waal seems to think.

As someone who’s been reading de Waal’s books for the past seventeen years, someone who wanted to strangle Ian Parker after reading his cheap smear piece in The New Yorker, someone who has admired the great primatologist since my days as an undergrad anthropology student, I experienced the sections of The Bonobo and the Atheist devoted to criticisms of neo-atheism, which make up roughly a quarter of this short book, as soul-crushingly disappointing. And I’ve agonized over how to write this part of the review. The middle path de Waal carves out is between a watered-down religion believers don’t really believe on one side and an egregious postmodern caricature of Sam Harris’s and Christopher Hitchens’s positions on the other. He focuses on Harris because of his book, The Moral Landscape, which explores how we might use science to determine our morals and values instead of religion, but he gives every indication of never having actually read the book and of instead basing his criticisms solely on the book’s reputation among Harris’s most hysterical detractors. And he targets Hitchens because he thinks he has the psychological key to understanding what he refers to as his “serial dogmatism.” But de Waal’s case is so flimsy a freshman journalism student could demolish it with no more than about ten minutes of internet fact-checking.

De Waal does acknowledge that we should be skeptical of “religious institutions and their ‘primates’,” but he wonders “what good could possibly come from insulting the many people who find value in religion?” (19). This is the tightrope he tries to walk throughout his book. His focus on the purely negative aspect of atheism juxtaposed with his strange conception of the role of belief seems designed to give readers the impression that if the atheists succeed society might actually suffer severe damage. He writes,

Religion is much more than belief. The question is not so much whether religion is true or false, but how it shapes our lives, and what might possibly take its place if we were to get rid of it the way an Aztec priest rips the beating heart out of a virgin. What could fill the gaping hole and take over the removed organ’s functions? (216)

The first problem is that many people who call themselves humanists, as de Waal does, might suggest that there are in fact many things that could fill the gap—science, literature, philosophy, music, cinema, human rights activism, just to name a few. But the second problem is that the militancy of the militant atheists is purely and avowedly rhetorical. In a debate with Hitchens, former British Prime Minister Tony Blair once held up the same straw man that de Waal drags through the pages of his book, the claim that neo-atheists are trying to extirpate religion from society entirely, to which Hitchens replied, “In fairness, no one was arguing that religion should or will die out of the world. All I’m arguing is that it would be better if there was a great deal more by way of an outbreak of secularism” (20:20). What Hitchens is after is an end to the deference automatically afforded religious ideas by dint of their supposed sacredness; religious ideas need to be critically weighed just like any other ideas—and when they are thus weighed they often don’t fare so well, in either logical or moral terms. It’s hard to understand why de Waal would have a problem with this view.

*****

De Waal’s position is even more incoherent with regard to Harris’s arguments about the potential for a science of morality, since they represent an attempt to answer, at least in part, the very question of what might take the place of religion in providing guidance in our lives that he poses again and again throughout The Bonobo and the Atheist. De Waal takes issue first with the book’s title, The Moral Landscape: How Science can Determine Human Values. The notion that science might determine any aspect of morality suggests to him a top-down approach as opposed to his favored bottom-up strategy that takes “naturalized ethics” as its touchstone. This is, however, unbeknownst to de Waal, a mischaracterization of Harris’s thesis. Rather than engage Harris’s arguments in any direct or meaningful way, de Waal contents himself with following in the footsteps of critics who apply the postmodern strategy of holding the book to account for all the analogies that can be drawn with it, no matter how tenuously or tendentiously, to historical evils. De Waal writes, for instance,

While I do welcome a science of morality—my own work is part of it—I can’t fathom calls for science to determine human values (as per the subtitle of Sam Harris’s The Moral Landscape). Is pseudoscience something of the past? Are modern scientists free from moral biases? Think of the Tuskegee syphilis study just a few decades ago, or the ongoing involvement of medical doctors in prisoner torture at Guantanamo Bay. I am profoundly skeptical of the moral purity of science, and feel that its role should never exceed that of morality’s handmaiden. (22)

(Great phrase that "morality's handmaiden.") But Harris never argues that scientists are any more morally pure than anyone else. His argument is for the application of that “science of morality,” which de Waal proudly contributes to, to attempts at addressing the big moral issues our society faces.

The guilt-by-association and guilt-by-historical-analogy tactics on display in The Bonobo and the Atheist extend all the way to that lodestar of postmodernism’s hysterical obsessions. We might hope that de Waal, after witnessing the frenzied insanity of the sociobiology controversy from the front row, would know better. But he doesn’t seem to grasp how toxic this type of rhetoric is to reasoned discourse and honest inquiry. After expressing his bafflement at how science and a naturalistic worldview could inspire good the way religion does (even though his main argument is that such external inspiration to do good is unnecessary), he writes,

It took Adolf Hitler and his henchmen to expose the moral bankruptcy of these ideas. The inevitable result was a precipitous drop of faith in science, especially biology. In the 1970s, biologists were still commonly equated with fascists, such as during the heated protest against “sociobiology.” As a biologist myself, I am glad those acrimonious days are over, but at the same time I wonder how anyone could forget this past and hail science as our moral savior. How did we move from deep distrust to naïve optimism? (22)

Was Nazism borne of an attempt to apply science to moral questions? It’s true some people use science in evil ways, but not nearly as commonly as people are directly urged by religion to perpetrate evils like inquisitions or holy wars. When science has directly inspired evil, as in the case of eugenics, the lifespan of the mistake was measurable in years or decades rather than centuries or millennia. Not to minimize the real human costs, but science wins hands down by being self-correcting and, certain individual scientists notwithstanding, undogmatic.

Harris intended for his book to begin a debate he was prepared to actively participate in. But he quickly ran into the problem that postmodern criticisms can’t really be dealt with in any meaningful way. The following long quote from Harris’s response to his battier critics in the Huffington Post will show both that de Waal’s characterization of his argument is way off-the-mark, and that it is suspiciously unoriginal:

How, for instance, should I respond to the novelist Marilynne Robinson’s paranoid, anti-science gabbling in the Wall Street Journal where she consigns me to the company of the lobotomists of the mid 20th century? Better not to try, I think—beyond observing how difficult it can be to know whether a task is above or beneath you. What about the science writer John Horgan, who was kind enough to review my book twice, once in Scientific American where he tarred me with the infamous Tuskegee syphilis experiments, the abuse of the mentally ill, and eugenics, and once in The Globe and Mail, where he added Nazism and Marxism for good measure? How does one graciously respond to non sequiturs? The purpose of The Moral Landscape is to argue that we can, in principle, think about moral truth in the context of science. Robinson and Horgan seem to imagine that the mere existence of the Nazi doctors counts against my thesis. Is it really so difficult to distinguish between a science of morality and the morality of science? To assert that moral truths exist, and can be scientifically understood, is not to say that all (or any) scientists currently understand these truths or that those who do will necessarily conform to them.

And we have to ask further what alternative source of ethical principles do the self-righteous grandstanders like Robinson and Horgan—and now de Waal—have to offer? In their eagerness to compare everyone to the Nazis, they seem to be deriving their own morality from Fox News.

De Waal makes three objections to Harris’s arguments that are of actual substance, but none of them are anywhere near as devastating to his overall case as de Waal makes out. First, Harris begins with the assumption that moral behaviors lead to “human flourishing,” but this is a presupposed value as opposed to an empirical finding of science—or so de Waal claims. But here’s de Waal himself on a level of morality sometimes seen in apes that transcends one-on-one interactions between individuals:

female chimpanzees have been seen to drag reluctant males toward each other to make up after a fight, while removing weapons from their hands. Moreover, high-ranking males regularly act as impartial arbiters to settle disputes in the community. I take these hints of community concern as a sign that the building blocks of morality are older than humanity, and that we don’t need God to explain how we got to where we are today. (20)

The similarity between the concepts of human flourishing and community concern highlights one of the main areas of confusion de Waal could have avoided by actually reading Harris’s book. The word “determine” in the title has two possible meanings. Science can determine values in the sense that it can guide us toward behaviors that will bring about flourishing. But it can also determine our values in the sense of discovering what we already naturally value and hence what conditions need to be met for us to flourish.

De Waal performs a sleight of hand late in The Bonobo and the Atheist, substituting another “utilitarian” for Harris, justifying the trick by pointing out that utilitarians also seek to maximize human flourishing—though Harris never claims to be one. This leads de Waal to object that strict utilitarianism isn’t viable because he’s more likely to direct his resources to his own ailing mother than to any stranger in need, even if those resources would benefit the stranger more. Thus de Waal faults Harris’s ethics for overlooking the role of loyalty in human lives. His third criticism is similar; he worries that utilitarians might infringe on the rights of a minority to maximize flourishing for a majority. But how, given what we know about human nature, could we expect humans to flourish—to feel as though they were flourishing—in a society that didn’t properly honor friendship and the bonds of family? How could humans be happy in a society where they had to constantly fear being sacrificed to the whim of the majority? It is in precisely this effort to discover—or determine—under which circumstances humans flourish that Harris believes science can be of the most help. And as de Waal moves up from his mammalian foundations of morality to more abstract ethical principles the separation between his approach and Harris’s starts to look suspiciously like a distinction without a difference.

Harris in fact points out that honoring family bonds probably leads to greater well-being on pages seventy-three and seventy-four of The Moral Landscape, and de Waal quotes from page seventy-four himself to chastise Harris for concentrating too much on "the especially low-hanging fruit of conservative Islam" (74). The incoherence of de Waal's argument (and the carelessness of his research) are on full display here as he first responds to a point about the genital mutilation of young girls by asking, "Isn't genital mutilation common in the United States, too, where newborn males are circumcised without their consent?" (90). So cutting off the foreskin of a male's penis is morally equivalent to cutting off a girl's clitoris? Supposedly, the equivalence implies that there can't be any reliable way to determine the relative moral status of religious practices. "Could it be that religion and culture interact to the point that there is no universal morality?" Perhaps, but, personally, as a circumcised male, I think this argument is a real howler.

*****

The slick scholarly laziness on display in The Bonobo and the Atheist is just as bad when it comes to the positions, and the personality, of Christopher Hitchens, whom de Waal sees fit to psychoanalyze instead of engaging his arguments in any substantive way—but whose memoir, Hitch-22, he’s clearly never bothered to read. The straw man about the neo-atheists being bent on obliterating religion entirely is, disappointingly, but not surprisingly by this point, just one of several errors and misrepresentations. De Waal’s main argument against Hitchens, that his atheism is just another dogma, just as much a religion as any other, is taken right from the list of standard talking points the most incurious of religious apologists like to recite against him. Theorizing that “activist atheism reflects trauma” (87)—by which he means that people raised under severe religions will grow up to espouse severe ideologies of one form or another—de Waal goes on to suggest that neo-atheism is an outgrowth of “serial dogmatism”:

Hitchens was outraged by the dogmatism of religion, yet he himself had moved from Marxism (he was a Trotskyist) to Greek Orthodox Christianity, then to American Neo-Conservatism, followed by an “antitheist” stance that blamed all of the world’s troubles on religion. Hitchens thus swung from the left to the right, from anti-Vietnam War to cheerleader of the Iraq War, and from pro to contra God. He ended up favoring Dick Cheney over Mother Teresa. (89)

This is truly awful rubbish, and it’s really too bad Hitchens isn’t around anymore to take de Waal to task for it himself. First, this passage allows us to catch out de Waal’s abuse of the term dogma; dogmatism is rigid adherence to beliefs that aren’t open to questioning. The test of dogmatism is whether you’re willing to adjust your views in light of new evidence or changing circumstances—it has nothing to do with how willing or eager you are to debate. What de Waal is labeling dogmatism is what we normally call outspokenness. Second, his facts are simply wrong. For one, though Hitchens was labeled a neocon by some of his fellows on the left simply because he supported the invasion of Iraq, he never considered himself one. When he was asked in an interview for the New Stateman if he was a neoconservative, he responded unequivocally, “I’m not a conservative of any kind.” Finally, can’t someone be for one war and against another, or agree with certain aspects of a religious or political leader’s policies and not others, without being shiftily dogmatic?

De Waal never really goes into much detail about what the “naturalized ethics” he advocates might look like beyond insisting that we should take a bottom-up approach to arriving at them. This evasiveness gives him space to criticize other nonbelievers regardless of how closely their ideas might resemble his own. “Convictions never follow straight from evidence or logic,” he writes. “Convictions reach us through the prism of human interpretation” (109). He takes this somewhat banal observation (but do they really never follow straight from evidence?) as a license to dismiss the arguments of others based on silly psychologizing. “In the same way that firefighters are sometimes stealth arsonists,” he writes, “and homophobes closet homosexuals, do some atheists secretly long for the certitude of religion?” (88). We could of course just as easily turn this Freudian rhetorical trap back against de Waal and his own convictions. Is he a closet dogmatist himself? Does he secretly hold the unconscious conviction that primates are really nothing like humans and that his research is all a big sham?

Christopher Hitchens was another real-life character whose personality shone through his writing, and like Yossarian in Joseph Heller’s Catch-22 he often found himself in a position where he knew being sane would put him at odds with the masses, thus convincing everyone of his insanity. Hitchens particularly identified with the exchange near the end of Heller’s novel in which an officer, Major Danby, says, “But, Yossarian, suppose everyone felt that way,” to which Yossarian replies, “Then I’d certainly be a damned fool to feel any other way, wouldn’t I?” (446). (The title for his memoir came from a word game he and several of his literary friends played with book titles.) It greatly saddens me to see de Waal pitting himself against such a ham-fisted caricature of a man in whom, had he taken the time to actually explore his writings, he would likely have found much to admire. Why did Hitch become such a strong advocate for atheism? He made no secret of his motivations. And de Waal, who faults Harris (wrongly) for leaving loyalty out of his moral equations, just might identify with them. It began when the theocratic dictator of Iran put a hit out on his friend, the author Salman Rushdie, because he thought one of his books was blasphemous. Hitchens writes in Hitch-22,

When the Washington Post telephoned me at home on Valentine’s Day 1989 to ask my opinion about the Ayatollah Khomeini’s fatwah, I felt at once that here was something that completely committed me. It was, if I can phrase it like this, a matter of everything I hated versus everything I loved. In the hate column: dictatorship, religion, stupidity, demagogy, censorship, bullying, and intimidation. In the love column: literature, irony, humor, the individual, and the defense of free expression. Plus, of course, friendship—though I like to think that my reaction would have been the same if I hadn’t known Salman at all. (268)

Suddenly, neo-atheism doesn’t seem like an empty place-holder anymore. To criticize atheists so harshly for having convictions that are too strong, de Waal has to ignore all the societal and global issues religion is on the wrong side of. But when we consider the arguments on each side of the abortion or gay marriage or capital punishment or science education debates it’s easy to see that neo-atheists are only against religion because they feel it runs counter to the positive values of skeptical inquiry, egalitarian discourse, free society, and the ascendency of reason and evidence.

De Waal ends The Bonobo and the Atheist with a really corny section in which he imagines how a bonobo would lecture atheists about morality and the proper stance toward religion. “Tolerance of religion,” the bonobo says, “even if religion is not always tolerant in return, allows humanism to focus on what is most important, which is to build a better society based on natural human abilities” (237). Hitchens is of course no longer around to respond to the bonobo, but many of the same issues came up in his debate with Tony Blair (I hope no one reads this as an insult to the former PM), who at one point also argued that religion might be useful in building better societies—look at all the charity work they do for instance. Hitch, already showing signs of physical deterioration from the treatment for the esophageal cancer that would eventually kill him, responds,

The cure for poverty has a name in fact. It’s called the empowerment of women. If you give women some control over the rate at which they reproduce, if you give them some say, take them off the animal cycle of reproduction to which nature and some doctrine, religious doctrine, condemns them, and then if you’ll throw in a handful of seeds perhaps and some credit, the flaw, the flaw of everything in that village, not just poverty, but education, health, and optimism, will increase. It doesn’t matter—try it in Bangladesh, try it in Bolivia. It works. It works all the time. Name me one religion that stands for that—or ever has. Wherever you look in the world and you try to remove the shackles of ignorance and disease and stupidity from women, it is invariably the clerisy that stands in the way. (23:05)

Later in the debate, Hitch goes on to argue in a way that sounds suspiciously like an echo of de Waal’s challenges to veneer theory and his advocacy for bottom-up morality. He says,

The injunction not to do unto others what would be repulsive if done to yourself is found in the Analects of Confucius if you want to date it—but actually it’s found in the heart of every person in this room. Everybody knows that much. We don’t require divine permission to know right from wrong. We don’t need tablets administered to us ten at a time in tablet form, on pain of death, to be able to have a moral argument. No, we have the reasoning and the moral suasion of Socrates and of our own abilities. We don’t need dictatorship to give us right from wrong. (25:43)

And as a last word in his case and mine I’ll quote this very de Waalian line from Hitch: “There’s actually a sense of pleasure to be had in helping your fellow creature. I think that should be enough” (35:42).

Also read:

TED MCCORMICK ON STEVEN PINKER AND THE POLITICS OF RATIONALITY

And:

NAPOLEON CHAGNON'S CRUCIBLE AND THE ONGOING EPIDEMIC OF MORALIZING HYSTERIA IN ACADEMIA

And:

THE ENLIGHTENED HYPOCRISY OF JONATHAN HAIDT'S RIGHTEOUS MIND

Napoleon Chagnon's Crucible and the Ongoing Epidemic of Moralizing Hysteria in Academia

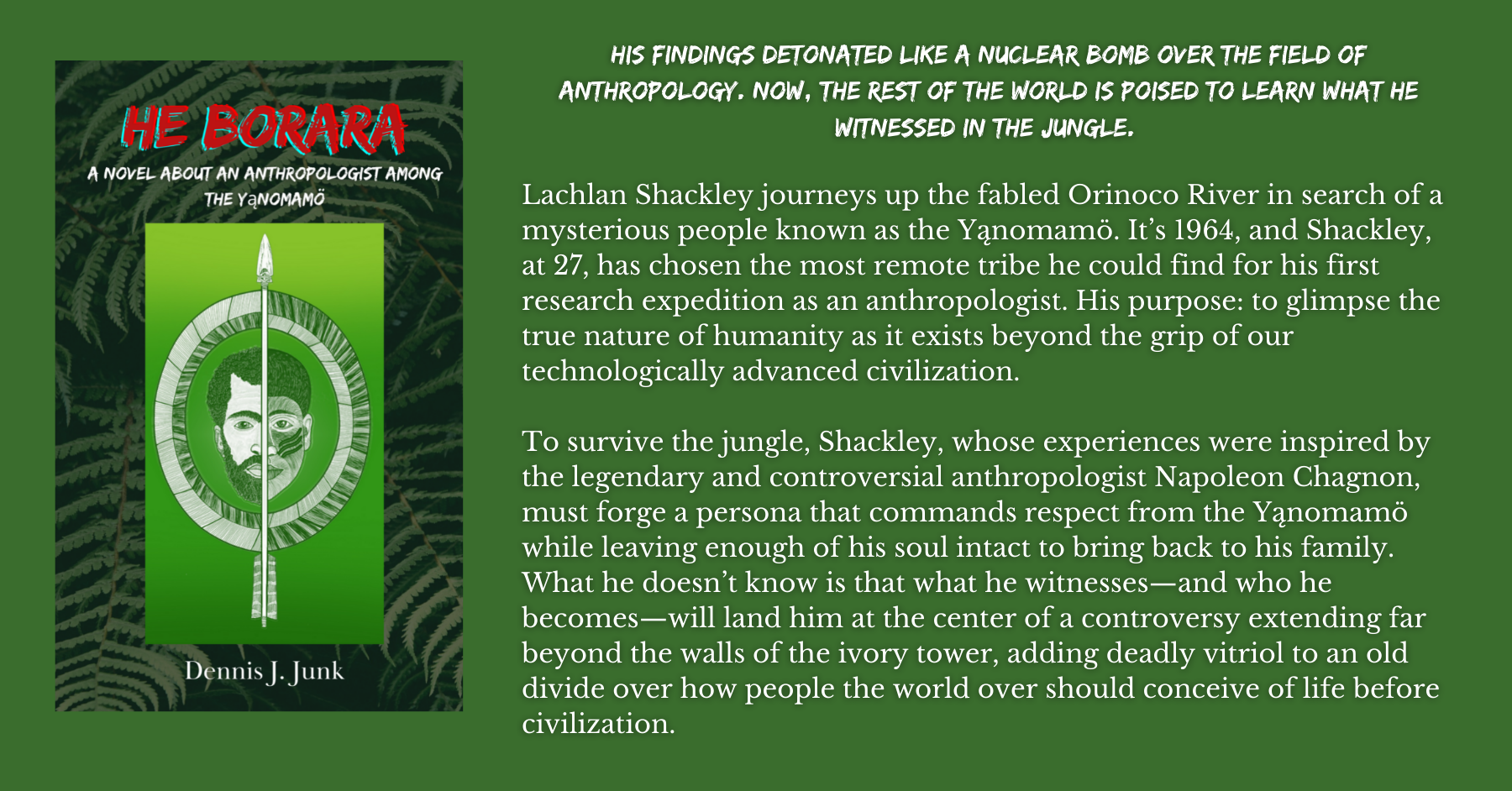

Napoleon Chagnon was targeted by postmodern activists and anthropologists, who trumped up charges against him and hoped to sacrifice his reputation on the altar of social justice. In retrospect, his case looks like an early warning sign of what would come to be called “cancel culture.” Fortunately, Chagnon was no pushover, and there were a lot of people who saw through the lies being spread about him. “Noble Savages” is in a part a great adventure story and in part his response to the tragic degradation of the field of anthropology as it succumbs to the lures of ideology.

When Arthur Miller adapted the script of The Crucible, his play about the Salem Witch Trials originally written in 1953, for the 1996 film version, he enjoyed additional freedom to work with the up-close visual dimensions of the tragedy. In one added scene, the elderly and frail George Jacobs, whom we first saw lifting one of his two walking sticks to wave an unsteady greeting to a neighbor, sits before a row of assembled judges as the young Ruth Putnam stands accusing him of assaulting her. The girl, ostensibly shaken from the encounter and frightened lest some further terror ensue, dramatically recounts her ordeal, saying,

He come through my window and then he lay down upon me. I could not take breath. His body crush heavy upon me, and he say in my ear, “Ruth Putnam, I will have your life if you testify against me in court.”

This quote she delivers in a creaky imitation of the old man’s voice. When one of the judges asks Jacobs what he has to say about the charges, he responds with the glaringly obvious objection: “But, your Honor, I must have these sticks to walk with—how may I come through a window?” The problem with this defense, Jacobs comes to discover, is that the judges believe a person can be in one place physically and in another in spirit. This poor tottering old man has no defense against so-called “spectral evidence.” Indeed, as judges in Massachusetts realized the year after Jacobs was hanged, no one really has any defense against spectral evidence. That’s part of the reason why it was deemed inadmissible in their courts, and immediately thereafter convictions for the crime of witchcraft ceased entirely.

Many anthropologists point to the low cost of making accusations as a factor in the evolution of moral behavior. People in small societies like the ones our ancestors lived in for millennia, composed of thirty or forty profoundly interdependent individuals, would have had to balance any payoff that might come from immoral deeds against the detrimental effects to their reputations of having those deeds discovered and word of them spread. As the generations turned over and over again, human nature adapted in response to the social enforcement of cooperative norms, and individuals came to experience what we now recognize as our moral emotions—guilt which is often preëmptive and prohibitive, shame, indignation, outrage, along with the more positive feelings associated with empathy, compassion, and loyalty.

The legacy of this process of reputational selection persists in our prurient fascination with the misdeeds of others and our frenzied, often sadistic, delectation in the spreading of salacious rumors. What Miller so brilliantly dramatizes in his play is the irony that our compulsion to point fingers, which once created and enforced cohesion in groups of selfless individuals, can in some environments serve as a vehicle for our most viciously selfish and inhuman impulses. This is why it is crucial that any accusation, if we as a society are to take it at all seriously, must provide the accused with some reliable means of acquittal. Charges that can neither be proven nor disproven must be seen as meaningless—and should even be counted as strikes against the reputation of the one who levels them.

While this principle runs into serious complications in situations with crimes that are as inherently difficult to prove as they are horrific, a simple rule proscribing any glib application of morally charged labels is a crucial yet all-too-popularly overlooked safeguard against unjust calumny. In this age of viral dissemination, the rapidity with which rumors spread coupled with the absence of any reliable assurances of the validity of messages bearing on the reputations of our fellow citizens demand that we deliberately work to establish as cultural norms the holding to account of those who make accusations based on insufficient, misleading, or spectral evidence—and the holding to account as well, to only a somewhat lesser degree, of those who help propagate rumors without doing due diligence in assessing their credibility.

The commentary attending the publication of anthropologist Napoleon Chagnon’s memoir of his research with the Yanomamö tribespeople in Venezuela calls to mind the insidious “Teach the Controversy” PR campaign spearheaded by intelligent design creationists. Coming out against the argument that students should be made aware of competing views on the value of intelligent design inevitably gives the impression of close-mindedness or dogmatism. But only a handful of actual scientists have any truck with intelligent design, a dressed-up rehashing of the old God-of-the-Gaps argument based on the logical fallacy of appealing to ignorance—and that ignorance, it so happens, is grossly exaggerated.

Teaching the controversy would therefore falsely imply epistemological equivalence between scientific views on evolution and those that are not-so-subtly religious. Likewise, in the wake of allegations against Chagnon about mistreatment of the people whose culture he made a career of studying, many science journalists and many of his fellow anthropologists still seem reluctant to stand up for him because they fear doing so would make them appear insensitive to the rights and concerns of indigenous peoples. Instead, they take refuge in what they hope will appear a balanced position, even though the evidence on which the accusations rested has proven to be entirely spectral.

Chagnon’s Noble Savages: My Life among Two Dangerous Tribes—the Yanomamö and the Anthropologists is destined to be one of those books that garners commentary by legions of outspoken scholars and impassioned activists who never find the time to actually read it. Science writer John Horgan, for instance, has published two blog posts on Chagnon in recent weeks, and neither of them features a single quote from the book. In the first, he boasts of his resistance to bullying, via email, by five prominent sociobiologists who had caught wind of his assignment to review Patrick Tierney’s book Darkness in El Dorado: How Scientists and Journalists Devastated the Amazon and insisted that he condemn the work and discourage anyone from reading it. Against this pressure, Horgan wrote a positive review in which he repeats several horrific accusations that Tierney makes in the book before going on to acknowledge that the author should have worked harder to provide evidence of the wrongdoings he reports on.

But Tierney went on to become an advocate for Indian rights. And his book’s faults are outweighed by its mass of vivid, damning detail. My guess is that it will become a classic in anthropological literature, sparking countless debates over the ethics and epistemology of field studies.

Horgan probably couldn’t have known at the time (though those five scientists tried to warn him) that giving Tierney credit for prompting debates about Indian rights and ethnographic research methods was a bit like praising Abigail Williams, the original source of accusations of witchcraft in Salem, for sparking discussions about child abuse. But that he stands by his endorsement today, saying,

“I have one major regret concerning my review: I should have noted that Chagnon is a much more subtle theorist of human nature than Tierney and other critics have suggested,” as balanced as that sounds, casts serious doubt on his scholarship, not to mention his judgment.

What did Tierney falsely accuse Chagnon of? There are over a hundred specific accusations in the book (Chagnon says his friend William Irons flagged 106 [446]), but the most heinous whopper comes in the fifth chapter, titled “Outbreak.” In 1968, Chagnon was helping the geneticist James V. Neel collect blood samples from the Yanomamö—in exchange for machetes—so their DNA could be compared with that of people in industrialized societies. While they were in the middle of this project, a measles epidemic broke out, and Neel had discovered through earlier research that the Indians lacked immunity to this disease, so the team immediately began trying to reach all of the Yanomamö villages to vaccinate everyone before the contagion reached them. Most people who knew about the episode considered what the scientists did heroic (and several investigations now support this view). But Tierney, by creating the appearance of pulling together multiple threads of evidence, weaves together a much different story in which Neel and Chagnon are cast as villains instead of heroes. (The version of the book I’ll quote here is somewhat incoherent because it went through some revisions in attempts to deal with holes in the evidence that were already emerging pre-publication.)

First, Tierney misinterprets some passages from Neel’s books as implying an espousal of eugenic beliefs about the Indians, namely that by remaining closer to nature and thus subject to ongoing natural selection they retain all-around superior health, including better immunity. Next, Tierney suggests that the vaccine Neel chose, Edmonston B, which is usually administered with a drug called gamma globulin to minimize reactions like fevers, is so similar to the measles virus that in the immune-suppressed Indians it actually ended up causing a suite of symptoms that was indistinguishable from full-blown measles. The implication is clear. Tierney writes,

Chagnon and Neel described an effort to “get ahead” of the measles epidemic by vaccinating a ring around it. As I have reconstructed it, the 1968 outbreak had a single trunk, starting at the Ocamo mission and moving up the Orinoco with the vaccinators. Hundreds of Yanomami died in 1968 on the Ocamo River alone. At the time, over three thousand Yanomami lived on the Ocamo headwaters; today there are fewer than two hundred. (69)

At points throughout the chapter, Tierney seems to be backing off the worst of his accusations; he writes, “Neel had no reason to think Edmonston B could become transmissible. The outbreak took him by surprise.” But even in this scenario Tierney suggests serious wrongdoing: “Still, he wanted to collect data even in the midst of a disaster” (82).

Earlier in the chapter, though, Tierney makes a much more serious charge. Pointing to a time when Chagnon showed up at a Catholic mission after having depleted his stores of gamma globulin and nearly run out of Edmonston B, Tierney suggests the shortage of drugs was part of a deliberate plan. “There were only two possibilities,” he writes,

Either Chagnon entered the field with only forty doses of virus; or he had more than forty doses. If he had more than forty, he deliberately withheld them while measles spread for fifteen days. If he came to the field with only forty doses, it was to collect data on a small sample of Indians who were meant to receive the vaccine without gamma globulin. Ocamo was a good choice because the nuns could look after the sick while Chagnon went on with his demanding work. Dividing villages into two groups, one serving as a control, was common in experiments and also a normal safety precaution in the absence of an outbreak. (60)

Thus Tierney implies that Chagnon was helping Neel test his eugenics theory and in the process became complicit in causing an epidemic, maybe deliberately, that killed hundreds of people. Tierney claims he isn’t sure how much Chagnon knew about the experiment; he concedes at one point that “Chagnon showed genuine concern for the Yanomami,” before adding, “At the same time, he moved quickly toward a cover-up” (75).

Near the end of his “Outbreak” chapter, Tierney reports on a conversation with Mark Papania, a measles expert at the Center for Disease Control in Atlanta. After running his hypothesis about how Neel and Chagnon caused the epidemic with the Edmonston B vaccine by Papania, Tierney claims he responded, “Sure, it’s possible.” He goes on to say that while Papania informed him there were no documented cases of the vaccine becoming contagious he also admitted that no studies of adequate sensitivity had been done. “I guess we didn’t look very hard,” Tierney has him saying (80). But evolutionary psychologist John Tooby got a much different answer when he called Papania himself. In a an article published on Slate—nearly three weeks before Horgan published his review, incidentally—Tooby writes that the epidemiologist had a very different attitude to the adequacy of past safety tests from the one Tierney reported:

it turns out that researchers who test vaccines for safety have never been able to document, in hundreds of millions of uses, a single case of a live-virus measles vaccine leading to contagious transmission from one human to another—this despite their strenuous efforts to detect such a thing. If attenuated live virus does not jump from person to person, it cannot cause an epidemic. Nor can it be planned to cause an epidemic, as alleged in this case, if it never has caused one before.

Tierney also cites Samuel Katz, the pediatrician who developed Edmonston B, at a few points in the chapter to support his case. But Katz responded to requests from the press to comment on Tierney’s scenario by saying,

the use of Edmonston B vaccine in an attempt to halt an epidemic was a justifiable, proven and valid approach. In no way could it initiate or exacerbate an epidemic. Continued circulation of these charges is not only unwarranted, but truly egregious.

Tooby included a link to Katz’s response, along with a report from science historian Susan Lindee of her investigation of Neel’s documents disproving many of Tierney’s points. It seems Horgan should’ve paid a bit more attention to those emails he was receiving.

Further investigations have shown that pretty much every aspect of Tierney’s characterization of Neel’s beliefs and research agenda was completely wrong. The report from a task force investigation by the American Society of Human Genetics gives a sense of how Tierney, while giving the impression of having conducted meticulous research, was in fact perpetrating fraud. The report states,

Tierney further suggests that Neel, having recognized that the vaccine was the cause of the epidemic, engineered a cover-up. This is based on Tierney’s analysis of audiotapes made at the time. We have reexamined these tapes and provide evidence to show that Tierney created a false impression by juxtaposing three distinct conversations recorded on two separate tapes and in different locations. Finally, Tierney alleges, on the basis of specific taped discussions, that Neel callously and unethically placed the scientific goals of the expedition above the humanitarian need to attend to the sick. This again is shown to be a complete misrepresentation, by examination of the relevant audiotapes as well as evidence from a variety of sources, including members of the 1968 expedition.

This report was published a couple years after Tierney’s book hit the shelves. But there was sufficient evidence available to anyone willing to do the due diligence in checking out the credibility of the author and his claims to warrant suspicion that the book’s ability to make it onto the shortlist for the National Book Award is indicative of a larger problem.

*******

With the benefit of hindsight and a perspective from outside the debate (though I’ve been following the sociobiology controversy for a decade and a half, I wasn’t aware of Chagnon’s longstanding and personal battles with other anthropologists until after Tierney’s book was published) it seems to me that once Tierney had been caught misrepresenting the evidence in support of such an atrocious accusation his book should have been removed from the shelves, and all his reporting should have been dismissed entirely. Tierney himself should have been made to answer for his offense. But for some reason none of this happened.

The anthropologist Marshall Sahlins, for instance, to whom Chagnon has been a bête noire for decades, brushed off any concern for Tierney’s credibility in his review of Darkness in El Dorado, published a full month after Horgan’s, apparently because he couldn’t resist the opportunity to write about how much he hates his celebrated colleague. Sahlins’s review is titled “Guilty not as Charged,” which is already enough to cast doubt on his capacity for fairness or rationality. Here’s how he sums up the issue of Tierney’s discredited accusation in relation to the rest of the book:

The Kurtzian narrative of how Chagnon achieved the political status of a monster in Amazonia and a hero in academia is truly the heart of Darkness in El Dorado. While some of Tierney’s reporting has come under fire, this is nonetheless a revealing book, with a cautionary message that extends well beyond the field of anthropology. It reads like an allegory of American power and culture since Vietnam.

Sahlins apparently hasn’t read Conrad’s novel Heart of Darkness or he’d know Chagnon is no Kurtz. And Vietnam? The next paragraph goes into more detail about this “allegory,” as if Sahlins’s conscripting of him into service as a symbol of evil somehow establishes his culpability. To get an idea of how much Chagnon actually had to do with Vietnam, we can look at a passage early in Noble Savages about how disconnected from the outside world he was while doing his field work:

I was vaguely aware when I went into the Yanomamö area in late 1964 that the United States had sent several hundred military advisors to South Vietnam to help train the South Vietnamese army. When I returned to Ann Arbor in 1966 the United States had some two hundred thousand combat troops there. (36)

But Sahlins’s review, as bizarre as it is, is important because it’s representative of the types of arguments Chagnon’s fiercest anthropological critics make against his methods, his theories, but mainly against him personally. In another recent comment on how “The Napoleon Chagnon Wars Flare Up Again,” Barbara J. King betrays a disconcerting and unscholarly complacence with quoting other, rival anthropologists’ words as evidence of Chagnon’s own thinking. Alas, King too is weighing in on the flare-up without having read the book, or anything else by the author it seems. And she’s also at pains to appear fair and balanced, even though the sources she cites against Chagnon are neither, nor are they the least bit scientific. Of Sahlins’s review of Darkness in El Dorado, she writes,

The Sahlins essay from 2000 shows how key parts of Chagnon’s argument have been “dismembered” scientifically. In a major paper published in 1988, Sahlins says, Chagnon left out too many relevant factors that bear on Ya̧nomamö males’ reproductive success to allow any convincing case for a genetic underpinning of violence.

It’s a bit sad that King feels it’s okay to post on a site as popular as NPR and quote a criticism of a study she clearly hasn’t read—she could have downloaded the pdf of Chagnon’s landmark paper “Life Histories, Blood Revenge, and Warfare in a Tribal Population,” for free. Did Chagnon claim in the study that it proved violence had a genetic underpinning? It’s difficult to tell what the phrase “genetic underpinning” even means in this context.

To lend further support to Sahlins’s case, King selectively quotes another anthropologist, Jonathan Marks. The lines come from a rant on his blog (I urge you to check it out for yourself if you’re at all suspicious about the aptness of the term rant to describe the post) about a supposed takeover of anthropology by genetic determinism. But King leaves off the really interesting sentence at the end of the remark. Here’s the whole passage explaining why Marks thinks Chagnon is an incompetent scientist:

Let me be clear about my use of the word “incompetent”. His methods for collecting, analyzing and interpreting his data are outside the range of acceptable anthropological practices. Yes, he saw the Yanomamo doing nasty things. But when he concluded from his observations that the Yanomamo are innately and primordially “fierce” he lost his anthropological credibility, because he had not demonstrated any such thing. He has a right to his views, as creationists and racists have a right to theirs, but the evidence does not support the conclusion, which makes it scientifically incompetent.

What Marks is saying here is not that he has evidence of Chagnon doing poor field work; rather, Marks dismisses Chagnon merely because of his sociobiological leanings. Note too that the italicized words in the passage are not quotes. This is important because along with the false equation of sociobiology with genetic determinism this type of straw man underlies nearly all of the attacks on Chagnon. Finally, notice how Marks slips into the realm of morality as he tries to traduce Chagnon’s scientific credibility. In case you think the link with creationism and racism is a simple analogy—like the one I used myself at the beginning of this essay—look at how Marks ends his rant:

So on one side you’ve got the creationists, racists, genetic determinists, the Republican governor of Florida, Jared Diamond, and Napoleon Chagnon–and on the other side, you’ve got normative anthropology, and the mother of the President. Which side are you on?

How can we take this at all seriously? And why did King misleadingly quote, on a prominent news site, such a seemingly level-headed criticism which in context reveals itself as anything but level-headed? I’ll risk another analogy here and point out that Marks’s comments about genetic determinism taking over anthropology are similar in both tone and intellectual sophistication to Glenn Beck’s comments about how socialism is taking over American politics.

King also links to a review of Noble Savages that was published in the New York Times in February, and this piece is even harsher to Chagnon. After repeating Tierney’s charge about Neel deliberately causing the 1968 measles epidemic and pointing out it was disproved, anthropologist Elizabeth Povinelli writes of the American Anthropological Association investigation that,

The committee was split over whether Neel’s fervor for observing the “differential fitness of headmen and other members of the Yanomami population” through vaccine reactions constituted the use of the Yanomamö as a Tuskegee-like experimental population.

Since this allegation has been completely discredited by the American Society of Human Genetics, among others, Povinelli’s repetition of it is irresponsible, as was the Times failure to properly vet the facts in the article.

Try as I might to remain detached from either side as I continue to research this controversy (and I’ve never met any of these people), I have to say I found Povinelli’s review deeply offensive. The straw men she shamelessly erects and the quotes she shamelessly takes out of context, all in the service of an absurdly self-righteous and substanceless smear, allow no room whatsoever for anything answering to the name of compassion for a man who was falsely accused of complicity in an atrocity. And in her zeal to impugn Chagnon she propagates a colorful and repugnant insult of her own creation, which she misattributes to him. She writes,

Perhaps it’s politically correct to wonder whether the book would have benefited from opening with a serious reflection on the extensive suffering and substantial death toll among the Yanomamö in the wake of the measles outbreak, whether or not Chagnon bore any responsibility for it. Does their pain and grief matter less even if we believe, as he seems to, that they were brutal Neolithic remnants in a land that time forgot? For him, the “burly, naked, sweaty, hideous” Yanomamö stink and produce enormous amounts of “dark green snot.” They keep “vicious, underfed growling dogs,” engage in brutal “club fights” and—God forbid!—defecate in the bush. By the time the reader makes it to the sections on the Yanomamö’s political organization, migration patterns and sexual practices, the slant of the argument is evident: given their hideous society, understanding the real disaster that struck these people matters less than rehabilitating Chagnon’s soiled image.

In other words, Povinelli’s response to Chagnon’s “harrowing” ordeal, is to effectively say, Maybe you’re not guilty of genocide, but you’re still guilty for not quitting your anthropology job and becoming a forensic epidemiologist. Anyone who actually reads Noble Savages will see quite clearly the “slant” Povinelli describes, along with those caricatured “brutal Neolithic remnants,” must have flown in through her window right next to George Jacobs.

Povinelli does characterize one aspect of Noble Savages correctly when she complains about its “Manichean rhetorical structure,” with the bad Rousseauian, Marxist, postmodernist cultural anthropologists—along with the corrupt and PR-obsessed Catholic missionaries—on one side, and the good Hobbesian, Darwinian, scientific anthropologists on the other, though it’s really just the scientific part he’s concerned with. I actually expected to find a more complicated, less black-and-white debate taking place when I began looking into the attacks on Chagnon’s work—and on Chagnon himself. But what I ended up finding was that Chagnon’s description of the division, at least with regard to the anthropologists (I haven’t researched his claims about the missionaries) is spot-on, and Povinelli’s repulsive review is a case in point.

This isn’t to say that there aren’t legitimate scientific disagreements about sociobiology. In fact, Chagnon writes about how one of his heroes is “calling into question some of the most widely accepted views” as early as his dedication page, referring to E.O. Wilson’s latest book The Social Conquest of Earth. But what Sahlins, Marks, and Povinelli offer is neither legitimate nor scientific. These commenters really are, as Chagnon suggests, representative of a subset of cultural anthropologists completely given over to a moralizing hysteria. Their scholarship is as dishonest as it is defamatory, their reasoning rests on guilt by free-association and the tossing up and knocking down of the most egregious of straw men, and their tone creates the illusion of moral certainty coupled with a longsuffering exasperation with entrenched institutionalized evils. For these hysterical moralizers, it seems any theory of human behavior that involves evolution or biology represents the same kind of threat as witchcraft did to the people of Salem in the 1690s, or as communism did to McCarthyites in the 1950s. To combat this chimerical evil, the presumed righteous ends justify the deceitful means.

The unavoidable conclusion with regard to the question of why Darkness in El Dorado wasn’t dismissed outright when it should have been is that even though it has been established that Chagnon didn’t commit any of the crimes Tierney accused him of, as far as his critics are concerned, he may as well have. Somehow cultural anthropologists have come to occupy a bizarre culture of their own in which charging a colleague with genocide doesn’t seem like a big deal. Before Tierney’s book hit the shelves, two anthropologists, Terence Turner and Leslie Sponsel, co-wrote an email to the American Anthropological Association which was later sent to several journalists. Turner and Sponsel later claimed the message was simply a warning about the “impending scandal” that would result from the publication of Darkness in El Dorado. But the hyperbole and suggestive language make it read more like a publicity notice than a warning. “This nightmarish story—a real anthropological heart of darkness beyond the imagining of even a Josef Conrad (though not, perhaps, a Josef Mengele)”—is it too much to ask of those who are so fond of referencing Joseph Conrad that they actually read his book?—“will be seen (rightly in our view) by the public, as well as most anthropologists, as putting the whole discipline on trial.” As it turned out, though, the only one who was put on trial, by the American Anthropological Association—though officially it was only an “inquiry”—was Napoleon Chagnon.

Chagnon’s old academic rivals, many of whom claim their problem with him stems from the alleged devastating impact of his research on Indians, fail to appreciate the gravity of Tierney’s accusations. Their blasé response to the author being exposed as a fraud gives the impression that their eagerness to participate in the pile-on has little to do with any concern for the Yanomamö people. Instead, they embraced Darkness in El Dorado because it provided good talking points in the campaign against their dreaded nemesis Napoleon Chagnon. Sahlins, for instance, is strikingly cavalier about the personal effects of Tierney’s accusations in the review cited by King and Horgan:

The brouhaha in cyberspace seemed to help Chagnon’s reputation as much as Neel’s, for in the fallout from the latter’s defense many academics also took the opportunity to make tendentious arguments on Chagnon’s behalf. Against Tierney’s brief that Chagnon acted as an anthro-provocateur of certain conflicts among the Yanomami, one anthropologist solemnly demonstrated that warfare was endemic and prehistoric in the Amazon. Such feckless debate is the more remarkable because most of the criticisms of Chagnon rehearsed by Tierney have been circulating among anthropologists for years, and the best evidence for them can be found in Chagnon’s writings going back to the 1960s.

Sahlins goes on to offer his own sinister interpretation of Chagnon’s writings, using the same straw man and guilt-by-free-association techniques common to anthropologists in the grip of moralizing hysteria. But I can’t help wondering why anyone would take a word he says seriously after he suggests that being accused of causing a deadly epidemic helped Neel’s and Chagnon’s reputations.

*******

Marshall Sahlins recently made news by resigning from the National Academy of Sciences in protest against the organization’s election of Chagnon to its membership and its partnerships with the military. In explaining his resignation, Sahlins insists that Chagnon, based on the evidence of his own writings, did serious harm to the people whose culture he studied. Sahlins also complains that Chagnon’s sociobiological ideas about violence are so wrongheaded that they serve to “discredit the anthropological discipline.” To back up his objections, he refers interested parties to that same review of Darkness in El Dorado King links to on her post.

Though Sahlins explains his moral and intellectual objections separately, he seems to believe that theories of human behavior based on biology are inherently immoral, as if theorizing that violence has “genetic underpinnings” is no different from claiming that violence is inevitable and justifiable. This is why Sahlins can’t discuss Chagnon without reference to Vietnam. He writes in his review,

The ‘60s were the longest decade of the 20th century, and Vietnam was the longest war. In the West, the war prolonged itself in arrogant perceptions of the weaker peoples as instrumental means of the global projects of the stronger. In the human sciences, the war persists in an obsessive search for power in every nook and cranny of our society and history, and an equally strong postmodern urge to “deconstruct” it. For his part, Chagnon writes popular textbooks that describe his ethnography among the Yanomami in the 1960s in terms of gaining control over people.

Sahlins doesn’t provide any citations to back up this charge—he’s quite clearly not the least bit concerned with fairness or solid scholarship—and based on what Chagnon writes in Noble Savages this fantasy of “gaining control” originates in the mind of Sahlins, not in the writings of Chagnon.

For instance, Chagnon writes of being made the butt of an elaborate joke several Yanomamö conspired to play on him by giving him fake names for people in their village (like Hairy Cunt, Long Dong, and Asshole). When he mentions these names to people in a neighboring village, they think it’s hilarious. “My face flushed with embarrassment and anger as the word spread around the village and everybody was laughing hysterically.” And this was no minor setback: “I made this discovery some six months into my fieldwork!” (66) Contrary to the despicable caricature Povinelli provides as well, Chagnon writes admiringly of the Yanomamö’s “wicked humor,” and how “They enjoyed duping others, especially the unsuspecting and gullible anthropologist who lived among them” (67). Another gem comes from an episode in which he tries to treat a rather embarrassing fungal infection: “You can’t imagine the hilarious reaction of the Yanomamö watching the resident fieldworker in a most indescribable position trying to sprinkle foot powder onto his crotch, using gravity as a propellant” (143).