READING SUBTLY

This

was the domain of my Blogger site from 2009 to 2018, when I moved to this domain and started

The Storytelling Ape

. The search option should help you find any of the old posts you're looking for.

The Mental Illness Zodiac: Why the DSM 5 Won't Be Anything But More Pseudoscience

That the diagnostic categories are necessarily ambiguous and can’t be tied to any objective criteria like biological markers has been much discussed, as have the corruptions of the mental health industry, including clinical researchers who make their livings treating the same disorders they lobby to have included in the list of official diagnoses. What’s not being discussed, however, is the propensity in humans to take on roles, to play parts, even tragic ones, even horrific ones, without being able to recognize they’re doing so.

Thinking you can diagnose psychiatric disorders using checklists of symptoms means taking for granted a naïve model of the human mind and human behavior. How discouraging to those in emotional distress, or to those doubting their own sanity, that the guides they turn to for help and put their faith in to know what’s best for them embrace this model. The DSM has taken it for granted since its inception, and the latest version, the DSM 5, due out next year, despite all the impediments to practical usage it does away with, despite all the streamlining, and despite all the efforts to adhere to common sense, only perpetuates the mistake. That the diagnostic categories are necessarily ambiguous and can’t be tied to any objective criteria like biological markers has been much discussed, as have the corruptions of the mental health industry, including pharmaceutical companies’ reluctance to publish failed trials for their blockbuster drugs, and clinical researchers who make their livings treating the same disorders they lobby to have included in the list of official diagnoses. Indeed, there’s good evidence that prognoses for mental disorders have actually gotten worse over the past century. What’s not being discussed, however, is the propensity in humans to take on roles, to play parts, even tragic ones, even horrific ones, without being able to recognize they’re doing so.

In his lighthearted, mildly satirical but severely important book on self-improvement 59 Seconds: Change Your Life in Under a Minute, psychologist Richard Wiseman describes an experiment he conducted for the British TV show The People Watchers. A group of students spending an evening in a bar with their friends was given a series of tests, and then they were given access to an open bar. The tests included memorizing a list of numbers, walking along a line on the floor, and catching a ruler dropped by experimenters as quickly as possible. Memory, balance, and reaction time—all areas our performance diminishes in predictably as we drink. The outcomes of the tests were well in-keeping with expectation as they were repeated over the course of the evening. All the students did progressively worse the more they drank. And the effects of the alcohol were consistent throughout the entire group of students. It turns out, however, that only half of them were drinking alcohol.

At the start of the study, Wiseman had given half the participants a blue badge and the other half a red badge. The bartenders poured regular drinks for everyone with red badges, but for those with blue ones they made drinks which looked, smelled, and tasted like their alcoholic counterparts but were actually non-alcoholic. Now, were the students with the blue badges faking their drunkenness? They may have been hamming it for the cameras, but that would be true of the ones who were actually drinking too. What they were doing instead was taking on the role—you might even say taking on the symptoms—of being drunk. As Wiseman explains,

Our participants believed that they were drunk, and so they thought and acted in a way that was consistent with their beliefs. Exactly the same type of effect has emerged in medical experiments when people exposed to fake poison ivy developed genuine rashes, those given caffeine-free coffee became more alert, and patients who underwent a fake knee operation reported reduced pain from their “healed” tendons. (204)

After being told they hadn’t actually consumed any alcohol, the students in the blue group “laughed, instantly sobered up, and left the bar in an orderly and amused fashion.” But not all the natural role-playing humans engage in is this innocuous and short-lived.

In placebo studies like the one Wiseman conducted, participants are deceived. You could argue that actually drinking a convincing replica of alcohol or taking a realistic-looking pill is the important factor behind the effects. People who seek treatment for psychiatric disorders aren’t tricked in this way; so what would cause them to take on the role associated with, say, depression, or bipolar? But plenty of research shows that pills or potions aren’t necessary. We take on different roles in different settings and circumstances all the time. We act much differently at football games and rock concerts than we do at work or school. These shifts are deliberate, though, and we’re aware of them, at least to some degree, when they occur. But many cues are more subtle. It turns out that just being made aware of the symptoms of a disease can make you suspect that you have it. What’s called Medical Student Syndrome afflicts those studying both medical and psychiatric diagnoses. For the most part, you either have a biological disease or you don’t, so the belief that you have one is contingent on the heightened awareness that comes from studying the symptoms. But is there a significant difference between believing you’re depressed and having depression? There answer, according to check-list diagnosis, is no.

In America, we all know the symptoms of depression because we’re bombarded with commercials, like the one that uses squiggly circle faces to explain that it’s caused by a deficit of the neurotransmitter serotonin—a theory that had already been ruled out by the time that commercial began to air. More insidious though are the portrayals of psychiatric disorders in movies, TV series, or talk shows—more insidious because they embed the role-playing instructions in compelling stories. These shows profess to be trying to raise awareness so more people will get help to end their suffering. They profess to be trying to remove the stigma so people can talk about their problems openly. They profess to be trying to help people cope. But, from a perspective of human behavior that acknowledges the centrality of role-playing to our nature, all these shows are actually doing is shilling for the mental health industry, and they are probably helping to cause much of the suffering they claim to be trying to assuage.

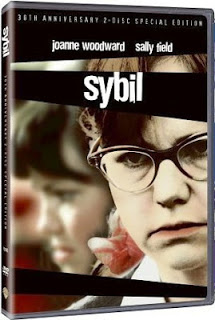

Multiple Personality Disorder, or Dissociative Identity Disorder as it’s now called, was an exceedingly rare diagnosis until the late 1970s and early 1980s when its incidence spiked drastically. Before the spike, there were only ever around a hundred cases. Between 1985 and 1995, there were around 40,000 new cases. What happened? There was a book and a miniseries called Sybil starring Sally Field that aired in 1977. Much of the real-life story on which Sybil was based has been cast into doubt through further investigation (or has been shown to be completely fabricated). But if you’re one to give credence to the validity of the DID diagnosis (and you shouldn’t), then we can look at another strange behavioral phenomenon whose incidence spiked after a certain movie hit the box offices in the 1970’s. Prior to the release of The Exorcist, the Catholic church had pretty much consigned the eponymous ritual to the dustbins of history. Lately, though, they’ve had to dust it off.

The Skeptic’s Dictionary says of a TV series devoted to the exorcism ritual, or the play rather, on the Sci-Fi channel,

The exorcists' only prop is a Bible, which is held in one hand while they talk down the devil in very dramatic episodes worthy of Jerry Springer or Jenny Jones. The “possessed” could have been mentally ill, actors, mentally ill actors, drug addicts, mentally ill drug addicts, or they may have been possessed, as the exorcists claimed. All the participants shown being exorcized seem to have seen the movie “The Exorcist” or one of the sequels. They all fell into the role of husky-voiced Satan speaking from the depths, who was featured in the film. The similarities in speech and behavior among the “possessed” has led some psychologists such as Nicholas Spanos to conclude that both “exorcist” and “possessed” are engaged in learned role-playing.

If people can somehow inadvertently fall into the role of having multiple personalities or being possessed by demons, it’s not hard to imagine them hearing about, say, bipolar, briefly worrying that they may have some of the symptoms, and then subsequently taking on the role, even the identity of someone battling bipolar disorder.

Psychologist Dan McAdams theorizes that everyone creates his or her own “personal myth,” which serves to give life meaning and trajectory. The character we play in our own myth is what we recognize as our identity, what we think of when we try to answer the question “Who am I?” in all its profundity. But, as McAdams explains in The Stories We Live By: Personal Myths and the Making of the Self,

Stories are less about facts and more about meanings. In the subjective and embellished telling of the past, the past is constructed—history is made. History is judged to be true or false not solely with respect to its adherence to empirical fact. Rather, it is judged with respect to such narrative criteria as “believability” and “coherence.” There is a narrative truth in life that seems quite removed from logic, science, and empirical demonstration. It is the truth of a “good story.” (28-9)

The problem when it comes to diagnosing psychiatric disorders is that the checklist approach tries to use objective, scientific criteria, when the only answers they’ll ever get will be in terms of narrative criteria. But why, if people are prone to taking on roles, wouldn’t they take on something pleasant, like kings or princesses?

Since our identities are made up of the stories we tell about ourselves—even to ourselves—it’s important that those stories be compelling. And if nothing ever goes wrong in the stories we tell, well, they’d be pretty boring. As Jonathan Gottschall writes in The Storytelling Animal: How Stories Make Us Human,

This need to see ourselves as the striving heroes of our own epics warps our sense of self. After all, it’s not easy to be a plausible protagonist. Fiction protagonists tend to be young, attractive, smart, and brave—all the things that most of us aren’t. Fiction protagonists usually live interesting lives that are marked by intense conflict and drama. We don’t. Average Americans work retail or cubicle jobs and spend their nights watching protagonists do interesting things on television. (171)

Listen to the ways talk show hosts like Oprah talk about mental disorders, and count how many times in an episode she congratulates the afflicted guests for their bravery in keeping up the struggle. Sometimes, the word hero is even bandied about. Troublingly, the people who cast themselves as heroes spreading awareness, countering stigmas, and helping people cope even like to do really counterproductive things like publishing lists of celebrities who supposedly suffer from the disorder in question. Think you might have bipolar? Kay Redfield Jameson thinks you’re in good company. In her book Touched By Fire, she suggests everyone from rocker Curt Cobain to fascist Mel Gibson is in that same boat-full of heroes.

The reason medical researchers insist a drug must not only be shown to make people feel better but must also be shown to work better than a placebo is that even a sham treatment will make people report feeling better between 60 and 90% of the time, depending on several well-documented factors. What psychiatrists fail to acknowledge is that the placebo dynamic can be turned on its head—you can give people illnesses, especially mental illnesses, merely by suggesting they have the symptoms—or even by increasing their awareness of and attention to those symptoms past a certain threshold. If you tell someone a fact about themselves, they’ll usually believe it, especially if you claim a test, or an official diagnostic manual allowed you to determine the fact. This is how frauds convince people they’re psychics. An experiment you can do yourself involves giving horoscopes to a group of people and asking how true they ring. After most of them endorse their reading, reveal that you changed the labels and they all in fact read the wrong sign’s description.

Psychiatric diagnoses, to be considered at all valid, would need to be double-blind, just like drug trials: the patient shouldn’t know the diagnosis being considered; the rater shouldn’t know the diagnosis being considered; only a final scorer, who has no contact with the patient, should determine the diagnosis. The categories themselves are, however, equally problematic. In order to be properly established as valid, they need to have predictive power. Trials would have to be conducted in which subjects assigned to the prospective categories using double-blind protocols were monitored for long periods of time to see if their behavior adheres to what’s expected of the disorder. For instance, bipolar is supposedly marked by cyclical mood swings. Where are the mood diary studies? (The last time I looked for them was six months ago, so if you know of any, please send a link.) Smart phones offer all kinds of possibilities for monitoring and recording behaviors. Why aren’t they being used to do actual science on mental disorders?

To research the role-playing dimension of mental illness, one (completely unethical) approach would be to design from scratch a really bizarre disorder, publicize its symptoms, maybe make a movie starring Mel Gibson, and monitor incidence rates. Let’s call it Puppy Pregnancy Disorder. We all know dog saliva is chock-full of gametes, right? So, let’s say the disorder is caused when a canine, in a state of sexual arousal of course, bites the victim, thus impregnating her—or even him. Let’s say it affects men too. Wouldn’t that be funny? The symptoms would be abdominal pain, and something just totally out there, like, say, small pieces of puppy feces showing up in your urine. Now, this might be too outlandish, don’t you think? There’s no way we could get anyone to believe this. Unfortunately, I didn’t really make this up. And there are real people in India who believe they have Puppy Pregnancy Disorder.

Also read:

THE STORYTELLING ANIMAL: A LIGHT READ WITH WEIGHTY IMPLICATIONS

And:

THE SELF-TRANSCENDENCE PRICE TAG: A REVIEW OF ALEX STONE'S FOOLING HOUDINI

Why Shakespeare Nauseated Darwin: A Review of Keith Oatley's "Such Stuff as Dreams"

Does practicing science rob one of humanity? Why is it that, if reading fiction trains us to take the perspective of others, English departments are rife with pettiness and selfishness? Keith Oately is trying to make the study of literature more scientific, and he provides hints to these riddles and many others in his book “Such Stuff as Dreams.”

Late in his life, Charles Darwin lost his taste for music and poetry. “My mind seems to have become a kind of machine for grinding general laws out of large collections of facts,” he laments in his autobiography, and for many of us the temptation to place all men and women of science into a category of individuals whose minds resemble machines more than living and emotionally attuned organs of feeling and perceiving is overwhelming. In the 21st century, we even have a convenient psychiatric diagnosis for people of this sort. Don’t we just assume Sheldon in The Big Bang Theory has autism, or at least the milder version of it known as Asperger’s? It’s probably even safe to assume the show’s writers had the diagnostic criteria for the disorder in mind when they first developed his character. Likewise, Dr. Watson in the BBC’s new and obscenely entertaining Sherlock series can’t resist a reference to the quintessential evidence-crunching genius’s own supposed Asperger’s.

In Darwin’s case, however, the move away from the arts couldn’t have been due to any congenital deficiency in his finer human sentiments because it occurred only in adulthood. He writes,

I have said that in one respect my mind has changed during the last twenty or thirty years. Up to the age of thirty, or beyond it, poetry of many kinds, such as the works of Milton, Gray, Byron, Wordsworth, Coleridge, and Shelley, gave me great pleasure, and even as a schoolboy I took intense delight in Shakespeare, especially in the historical plays. I have also said that formerly pictures gave me considerable, and music very great delight. But now for many years I cannot endure to read a line of poetry: I have tried lately to read Shakespeare, and found it so intolerably dull that it nauseated me. I have also almost lost my taste for pictures or music. Music generally sets me thinking too energetically on what I have been at work on, instead of giving me pleasure.

We could interpret Darwin here as suggesting that casting his mind too doggedly into his scientific work somehow ruined his capacity to appreciate Shakespeare. But, like all thinkers and writers of great nuance and sophistication, his ideas are easy to mischaracterize through selective quotation (or, if you’re Ben Stein or any of the other unscrupulous writers behind creationist propaganda like the pseudo-documentary Expelled, you can just lie about what he actually wrote).

One of the most charming things about Darwin is that his writing is often more exploratory than merely informative. He writes in search of answers he has yet to discover. In a wider context, the quote about his mind becoming a machine, for instance, reads,

This curious and lamentable loss of the higher aesthetic tastes is all the odder, as books on history, biographies, and travels (independently of any scientific facts which they may contain), and essays on all sorts of subjects interest me as much as ever they did. My mind seems to have become a kind of machine for grinding general laws out of large collections of facts, but why this should have caused the atrophy of that part of the brain alone, on which the higher tastes depend, I cannot conceive. A man with a mind more highly organised or better constituted than mine, would not, I suppose, have thus suffered; and if I had to live my life again, I would have made a rule to read some poetry and listen to some music at least once every week; for perhaps the parts of my brain now atrophied would thus have been kept active through use. The loss of these tastes is a loss of happiness, and may possibly be injurious to the intellect, and more probably to the moral character, by enfeebling the emotional part of our nature.

His concern for his lost aestheticism notwithstanding, Darwin’s humanism, his humanity, radiates in his writing with a warmth that belies any claim about thinking like a machine, just as the intelligence that shows through it gainsays his humble deprecations about the organization of his mind.

In this excerpt, Darwin, perhaps inadvertently, even manages to put forth a theory of the function of art. Somehow, poetry and music not only give us pleasure and make us happy—enjoying them actually constitutes a type of mental exercise that strengthens our intellect, our emotional awareness, and even our moral character. Novelist and cognitive psychologist Keith Oatley explores this idea of human betterment through aesthetic experience in his book Such Stuff as Dreams: The Psychology of Fiction. This subtitle is notably underwhelming given the long history of psychoanalytic theorizing about the meaning and role of literature. However, whereas psychoanalysis has fallen into disrepute among scientists because of its multiple empirical failures and a general methodological hubris common among its practitioners, the work of Oatley and his team at the University of Toronto relies on much more modest, and at the same time much more sophisticated, scientific protocols. One of the tools these researchers use, The Reading the Mind in the Eyes Test, was in fact first developed to research our new category of people with machine-like minds. What the researchers find bolsters Darwin’s impression that art, at least literary art, functions as a kind of exercise for our faculty of understanding and relating to others.

Reasoning that “fiction is a kind of simulation of selves and their vicissitudes in the social world” (159), Oatley and his colleague Raymond Mar hypothesized that people who spent more time trying to understand fictional characters would be better at recognizing and reasoning about other, real-world people’s states of mind. So they devised a test to assess how much fiction participants in their study read based on how well they could categorize a long list of names according to which ones belonged to authors of fiction, which to authors of nonfiction, and which to non-authors. They then had participants take the Mind-in-the-Eyes Test, which consists of matching close-up pictures of peoples’ eyes with terms describing their emotional state at the time they were taken. The researchers also had participants take the Interpersonal Perception Test, which has them answer questions about the relationships of people in short video clips featuring social interactions. An example question might be “Which of the two children, or both, or neither, are offspring of the two adults in the clip?” (Imagine Sherlock Holmes taking this test.) As hypothesized, Oatley writes, “We found that the more fiction people read, the better they were at the Mind-in-the-Eyes Test. A similar relationship held, though less strongly, for reading fiction and the Interpersonal Perception Test” (159).

One major shortcoming of this study is that it fails to establish causality; people who are naturally better at reading emotions and making sound inferences about social interactions may gravitate to fiction for some reason. So Mar set up an experiment in which he had participants read either a nonfiction article from an issue of the New Yorker or a work of short fiction chosen to be the same length and require the same level of reading skills. When the two groups then took a test of social reasoning, the ones who had read the short story outperformed the control group. Both groups also took a test of analytic reasoning as a further control; on this variable there was no difference in performance between the groups. The outcome of this experiment, Oatley stresses, shouldn’t be interpreted as evidence that reading one story will increase your social skills in any meaningful and lasting way. But reading habits established over long periods likely explain the more significant differences between individuals found in the earlier study. As Oatley explains,

Readers of fiction tend to become more expert at making models of others and themselves, and at navigating the social world, and readers of non-fiction are likely to become more expert at genetics, or cookery, or environmental studies, or whatever they spend their time reading. Raymond Mar’s experimental study on reading pieces from the New Yorker is probably best explained by priming. Reading a fictional piece puts people into a frame of mind of thinking about the social world, and this is probably why they did better at the test of social reasoning. (160)

Connecting these findings to real-world outcomes, Oatley and his team also found that “reading fiction was not associated with loneliness,” as the stereotype suggests, “but was associated with what psychologists call high social support, being in a circle of people whom participants saw a lot, and who were available to them practically and emotionally” (160).

These studies by the University of Toronto team have received wide publicity, but the people who should be the most interested in them have little or no idea how to go about making sense of them. Most people simply either read fiction or they don’t. If you happen to be of the tribe who studies fiction, then you were probably educated in a way that engendered mixed feelings—profound confusion really—about science and how it works. In his review of The Storytelling Animal, a book in which Jonathan Gottschall incorporates the Toronto team’s findings into the theory that narrative serves the adaptive function of making human social groups more cooperative and cohesive, Adam Gopnik sneers,

Surely if there were any truth in the notion that reading fiction greatly increased our capacity for empathy then college English departments, which have by far the densest concentration of fiction readers in human history, would be legendary for their absence of back-stabbing, competitive ill-will, factional rage, and egocentric self-promoters; they’d be the one place where disputes are most often quickly and amiably resolved by mutual empathetic engagement. It is rare to see a thesis actually falsified as it is being articulated.

Oatley himself is well aware of the strange case of university English departments. He cites a report by Willie van Peer on a small study he did comparing students in the natural sciences to students in the humanities. Oatley explains,

There was considerable scatter, but on average the science students had higher emotional intelligence than the humanities students, the opposite of what was expected; van Peer indicts teaching in the humanities for often turning people away from human understanding towards technical analyses of details. (160)

Oatley suggests in a footnote that an earlier study corroborates van Peer’s indictment. It found that high school students who show more emotional involvement with short stories—the type of connection that would engender greater empathy—did proportionally worse on standard academic assessments of English proficiency. The clear implication of these findings is that the way literature is taught in universities and high schools is long overdue for an in-depth critical analysis.

The idea that literature has the power to make us better people is not new; indeed, it was the very idea on which the humanities were originally founded. We have to wonder what people like Gopnik believe the point of celebrating literature is if not to foster greater understanding and empathy. If you either enjoy it or you don’t, and it has no beneficial effects on individuals or on society in general, why bother encouraging anyone to read? Why bother writing essays about it in the New Yorker? Tellingly, many scholars in the humanities began doubting the power of art to inspire greater humanity around the same time they began questioning the value and promise of scientific progress. Oatley writes,

Part of the devastation of World War II was the failure of German citizens, one of the world’s most highly educated populations, to prevent their nation’s slide into Nazism. George Steiner has famously asserted: “We know that a man can read Goethe or Rilke in the evening, that he can play Bach and Schubert, and go to his day’s work at Auschwitz in the morning.” (164)

Postwar literary theory and criticism has, perversely, tended toward the view that literature and language in general serve as a vessel for passing on all the evils inherent in our western, patriarchal, racist, imperialist culture. The purpose of literary analysis then becomes to shift out these elements and resist them. Unfortunately, such accusatory theories leave unanswered the question of why, if literature inculcates oppressive ideologies, we should bother reading it at all. As van Peer muses in the report Oatley cites, “The Inhumanity of the Humanities,”

Consider the ills flowing from postmodern approaches, the “posthuman”: this usually involves the hegemony of “race/class/gender” in which literary texts are treated with suspicion. Here is a major source of that loss of emotional connection between student and literature. How can one expect a certain humanity to grow in students if they are continuously instructed to distrust authors and texts? (8)

Oatley and van Peer point out, moreover, that the evidence for concentration camp workers having any degree of literary or aesthetic sophistication is nonexistent. According to the best available evidence, most of the greatest atrocities were committed by soldiers who never graduated high school. The suggestion that some type of cozy relationship existed between Nazism and an enthusiasm for Goethe runs afoul of recorded history. As Oatley points out,

Apart from propensity to violence, nationalism, and anti-Semitism, Nazism was marked by hostility to humanitarian values in education. From 1933 onwards, the Nazis replaced the idea of self-betterment through education and reading by practices designed to induce as many as possible into willing conformity, and to coerce the unwilling remainder by justified fear. (165)

Oatley also cites the work of historian Lynn Hunt, whose book Inventing Human Rights traces the original social movement for the recognition of universal human rights to the mid-1700s, when what we recognize today as novels were first being written. Other scholars like Steven Pinker have pointed out too that, while it’s hard not to dwell on tragedies like the Holocaust, even atrocities of that magnitude are resoundingly overmatched by the much larger post-Enlightenment trend toward peace, freedom, and the wider recognition of human rights. It’s sad that one of the lasting legacies of all the great catastrophes of the 20th Century is a tradition in humanities scholarship that has the people who are supposed to be the custodians of our literary heritage hell-bent on teaching us all the ways that literature makes us evil.

Because Oatley is a central figure in what we can only hope is a movement to end the current reign of self-righteous insanity in literary studies, it pains me not to be able to recommend Such Stuff as Dreams to anyone but dedicated specialists. Oatley writes in the preface that he has “imagined the book as having some of the qualities of fiction. That is to say I have designed it to have a narrative flow” (x), and it may simply be that this suggestion set my expectations too high. But the book is poorly edited, the prose is bland and often roles over itself into graceless tangles, and a couple of the chapters seem like little more than haphazardly collated reports of studies and theories, none exactly off-topic, none completely without interest, but all lacking any central progression or theme. The book often reads more like an annotated bibliography than a story. Oatley’s scholarly range is impressive, however, bearing not just on cognitive science and literature through the centuries but extending as well to the work of important literary theorists. The book is never unreadable, never opaque, but it’s not exactly a work of art in its own right.

Insofar as Such Stuff as Dreams is organized around a central idea, it is that fiction ought be thought of not as “a direct impression of life,” as Henry James suggests in his famous essay “The Art of Fiction,” and as many contemporary critics—notably James Wood—seem to think of it. Rather, Oatley agrees with Robert Louis Stevenson’s response to James’s essay, “A Humble Remonstrance,” in which he writes that

Life is monstrous, infinite, illogical, abrupt and poignant; a work of art in comparison is neat, finite, self-contained, rational, flowing, and emasculate. Life imposes by brute energy, like inarticulate thunder; art catches the ear, among the far louder noises of experience, like an air artificially made by a discreet musician. (qtd on pg 8)

Oatley theorizes that stories are simulations, much like dreams, that go beyond mere reflections of life to highlight through defamiliarization particular aspects of life, to cast them in a new light so as to deepen our understanding and experience of them. He writes,

Every true artistic expression, I think, is not just about the surface of things. It always has some aspect of the abstract. The issue is whether, by a change of perspective or by a making the familiar strange, by means of an artistically depicted world, we can see our everyday world in a deeper way. (15)

Critics of high-brow literature like Wood appreciate defamiliarization at the level of description; Oatley is suggesting here though that the story as a whole functions as a “metaphor-in-the-large” (17), a way of not just making us experience as strange some object or isolated feeling, but of reconceptualizing entire relationships, careers, encounters, biographies—what we recognize in fiction as plots. This is an important insight, and it topples verisimilitude from its ascendant position atop the hierarchy of literary values while rendering complaints about clichéd plots potentially moot. Didn’t Shakespeare recycle plots after all?

The theory of fiction as a type of simulation to improve social skills and possibly to facilitate group cooperation is emerging as the frontrunner in attempts to explain narrative interest in the context of human evolution. It is to date, however, impossible to rule out the possibility that our interest in stories is not directly adaptive but instead emerges as a byproduct of other traits that confer more immediate biological advantages. The finding that readers track actions in stories with the same brain regions that activate when they witness similar actions in reality, or when they engage in them themselves, is important support for the simulation theory. But the function of mirror neurons isn’t well enough understood yet for us to determine from this study how much engagement with fictional stories depends on the reader's identifying with the protagonist. Oatley’s theory is more consonant with direct and straightforward identification. He writes,

A very basic emotional process engages the reader with plans and fortunes of a protagonist. This is what often drives the plot and, perhaps, keeps us turning the pages, or keeps us in our seat at the movies or at the theater. It can be enjoyable. In art we experience the emotion, but with it the possibility of something else, too. The way we see the world can change, and we ourselves can change. Art is not simply taking a ride on preoccupations and prejudices, using a schema that runs as usual. Art enables us to experience some emotions in contexts that we would not ordinarily encounter, and to think of ourselves in ways that usually we do not. (118)

Much of this change, Oatley suggests, comes from realizing that we too are capable of behaving in ways that we might not like. “I am capable of this too: selfishness, lack of sympathy” (193), is what he believes we think in response to witnessing good characters behave badly.

Oatley’s theory has a lot to recommend it, but William Flesch’s theory of narrative interest, which suggests we don’t identify with fictional characters directly but rather track them and anxiously hope for them to get whatever we feel they deserve, seems much more plausible in the context of our response to protagonists behaving in surprisingly selfish or antisocial ways. When I see Ed Norton as Tyler Durden beating Angel Face half to death in Fight Club, for instance, I don’t think, hey, that’s me smashing that poor guy’s face with my fists. Instead, I think, what the hell are you doing? I had you pegged as a good guy. I know you’re trying not to be as much of a pushover as you used to be but this is getting scary. I’m anxious that Angel Face doesn’t get too damaged—partly because I imagine that would be devastating to Tyler. And I’m anxious lest this incident be a harbinger of worse behavior to come.

The issue of identification is just one of several interesting questions that can lend itself to further research. Oatley and Mar’s studies are not enormous in terms of sample size, and their subjects were mostly young college students. What types of fiction work the best to foster empathy? What types of reading strategies might we encourage students to apply to reading literature—apart from trying to remove obstacles to emotional connections with characters? But, aside from the Big-Bad-Western Empire myth that currently has humanities scholars grooming successive generations of deluded ideologues to be little more than culture vultures presiding over the creation and celebration of Loser Lit, the other main challenge to transporting literary theory onto firmer empirical grounds is the assumption that the arts in general and literature in particular demand a wholly different type of thinking to create and appreciate than the type that goes into the intricate mechanics and intensely disciplined practices of science.

As Oatley and the Toronto team have shown, people who enjoy fiction tend to have the opposite of autism. And people who do science are, well, Sheldon. Interestingly, though, the writers of The Big Bang Theory, for whatever reason, included some contraindications for a diagnosis of autism or Asperger’s in Sheldon’s character. Like the other scientists in the show, he’s obsessed with comic books, which require at least some understanding of facial expression and body language to follow. As Simon Baron-Cohen, the autism researcher who designed the Mind-in-the-Eyes test, explains, “Autism is an empathy disorder: those with autism have major difficulties in 'mindreading' or putting themselves into someone else’s shoes, imagining the world through someone else’s feelings” (137). Baron-Cohen has coined the term “mindblindness” to describe the central feature of the disorder, and many have posited that the underlying cause is abnormal development of the brain regions devoted to perspective taking and understanding others, what cognitive psychologists refer to as our Theory of Mind.

To follow comic book plotlines, Sheldon would have to make ample use of his own Theory of Mind. He’s also given to absorption in various science fiction shows on TV. If he were only interested in futuristic gadgets, as an autistic would be, he could just as easily get more scientifically plausible versions of them in any number of nonfiction venues. By Baron-Cohen’s definition, Sherlock Holmes can’t possibly have Asperger’s either because his ability to get into other people’s heads is vastly superior to pretty much everyone else’s. As he explains in “The Musgrave Ritual,”

You know my methods in such cases, Watson: I put myself in the man’s place, and having first gauged his intelligence, I try to imagine how I should myself have proceeded under the same circumstances.

What about Darwin, though, that demigod of science who openly professed to being nauseated by Shakespeare? Isn’t he a prime candidate for entry into the surprisingly unpopulated ranks of heartless, data-crunching scientists whose thinking lends itself so conveniently to cooptation by oppressors and committers of wartime atrocities? It turns out that though Darwin held many of the same racist views as nearly all educated men of his time, his ability to empathize across racial and class divides was extraordinary. Darwin was not himself a Social Darwinist, a theory devised by Herbert Spencer to justify inequality (which has currency still today among political conservatives). And Darwin was also a passionate abolitionist, as is clear in the following excerpts from The Voyage of the Beagle:

On the 19th of August we finally left the shores of Brazil. I thank God, I shall never again visit a slave-country. To this day, if I hear a distant scream, it recalls with painful vividness my feelings, when passing a house near Pernambuco, I heard the most pitiable moans, and could not but suspect that some poor slave was being tortured, yet knew that I was as powerless as a child even to remonstrate.

Darwin is responding to cruelty in a way no one around him at the time would have. And note how deeply it pains him, how profound and keenly felt his sympathy is.

I was present when a kind-hearted man was on the point of separating forever the men, women, and little children of a large number of families who had long lived together. I will not even allude to the many heart-sickening atrocities which I authentically heard of;—nor would I have mentioned the above revolting details, had I not met with several people, so blinded by the constitutional gaiety of the negro as to speak of slavery as a tolerable evil.

The question arises, not whether Darwin had sacrificed his humanity to science, but why he had so much more humanity than many other intellectuals of his day.

It is often attempted to palliate slavery by comparing the state of slaves with our poorer countrymen: if the misery of our poor be caused not by the laws of nature, but by our institutions, great is our sin; but how this bears on slavery, I cannot see; as well might the use of the thumb-screw be defended in one land, by showing that men in another land suffered from some dreadful disease.

And finally we come to the matter of Darwin’s Theory of Mind, which was quite clearly in no way deficient.

Those who look tenderly at the slave owner, and with a cold heart at the slave, never seem to put themselves into the position of the latter;—what a cheerless prospect, with not even a hope of change! picture to yourself the chance, ever hanging over you, of your wife and your little children—those objects which nature urges even the slave to call his own—being torn from you and sold like beasts to the first bidder! And these deeds are done and palliated by men who profess to love their neighbours as themselves, who believe in God, and pray that His Will be done on earth! It makes one's blood boil, yet heart tremble, to think that we Englishmen and our American descendants, with their boastful cry of liberty, have been and are so guilty; but it is a consolation to reflect, that we at least have made a greater sacrifice than ever made by any nation, to expiate our sin. (530-31)

I suspect that Darwin’s distaste for Shakespeare was borne of oversensitivity. He doesn't say music failed to move him; he didn’t like it because it made him think “too energetically.” And as aesthetically pleasing as Shakespeare is, existentially speaking, his plays tend to be pretty harsh, even the comedies. When Prospero says, "We are such stuff / as dreams are made on" in Act 4 of The Tempest, he's actually talking not about characters in stories, but about how ephemeral and insignificant real human lives are. But why, beyond some likely nudge from his inherited temperament, was Darwin so sensitive? Why was he so empathetic even to those so vastly different from him? After admitting he’d lost his taste for Shakespeare, paintings, and music, he goes to say,

On the other hand, novels which are works of the imagination, though not of a very high order, have been for years a wonderful relief and pleasure to me, and I often bless all novelists. A surprising number have been read aloud to me, and I like all if moderately good, and if they do not end unhappily—against which a law ought to be passed. A novel, according to my taste, does not come into the first class unless it contains some person whom one can thoroughly love, and if a pretty woman all the better.

Also read

STORIES, SOCIAL PROOF, & OUR TWO SELVES

And:

LET'S PLAY KILL YOUR BROTHER: FICTION AS A MORAL DILEMMA GAME

And:

[Check out the Toronto group's blog at onfiction.ca]

The Storytelling Animal: a Light Read with Weighty Implications

The Storytelling Animal is not groundbreaking. But the style of the book contributes something both surprising and important. Gottschall could simply tell his readers that stories almost invariably feature come kind of conflict or trouble and then present evidence to support the assertion. Instead, he takes us on a tour from children’s highly gendered, highly trouble-laden play scenarios, through an examination of the most common themes enacted in dreams, through some thought experiments on how intensely boring so-called hyperrealism, or the rendering of real life as it actually occurs, in fiction would be. The effect is that we actually feel how odd it is to devote so much of our lives to obsessing over anxiety-inducing fantasies fraught with looming catastrophe.

A review of Jonathan Gottschall's The Storytelling Animal: How Stories Make Us Human

Vivian Paley, like many other preschool and kindergarten teachers in the 1970s, was disturbed by how her young charges always separated themselves by gender at playtime. She was further disturbed by how closely the play of each gender group hewed to the old stereotypes about girls and boys. Unlike most other teachers, though, Paley tried to do something about it. Her 1984 book Boys and Girls: Superheroes in the Doll Corner demonstrates in microcosm how quixotic social reforms inspired by the assumption that all behaviors are shaped solely by upbringing and culture can be. Eventually, Paley realized that it wasn’t the children who needed to learn new ways of thinking and behaving, but herself. What happened in her classrooms in the late 70s, developmental psychologists have reliably determined, is the same thing that happens when you put kids together anywhere in the world. As Jonathan Gottschall explains,

Dozens of studies across five decades and a multitude of cultures have found essentially what Paley found in her Midwestern classroom: boys and girls spontaneously segregate themselves by sex; boys engage in more rough-and-tumble play; fantasy play is more frequent in girls, more sophisticated, and more focused on pretend parenting; boys are generally more aggressive and less nurturing than girls, with the differences being present and measurable by the seventeenth month of life. (39)

Paley’s study is one of several you probably wouldn’t expect to find discussed in a book about our human fascination with storytelling. But, as Gottschall makes clear in The Storytelling Animal: How Stories Make Us Human, there really aren’t many areas of human existence that aren’t relevant to a discussion of the role stories play in our lives. Those rowdy boys in Paley’s classes were playing recognizable characters from current action and sci-fi movies, and the fantasies of the girls were right out of Grimm’s fairy tales (it’s easy to see why people might assume these cultural staples were to blame for the sex differences). And the play itself was structured around one of the key ingredients—really the key ingredient—of any compelling story, trouble, whether in the form of invading pirates or people trying to poison babies.

The Storytelling Animal is the book to start with if you have yet to cut your teeth on any of the other recent efforts to bring the study of narrative into the realm of cognitive and evolutionary psychology. Gottschall covers many of the central themes of this burgeoning field without getting into the weedier territories of game theory or selection at multiple levels. While readers accustomed to more technical works may balk at wading through all the author’s anecdotes about his daughters, Gottschall’s keen sense of measure and the light touch of his prose keep the book from getting bogged down in frivolousness. This applies as well to the sections in which he succumbs to the temptation any writer faces when trying to explain one or another aspect of storytelling by making a few forays into penning abortive, experimental plots of his own.

None of the central theses of The Storytelling Animal is groundbreaking. But the style and layout of the book contribute something both surprising and important. Gottschall could simply tell his readers that stories almost invariably feature come kind of conflict or trouble and then present evidence to support the assertion, the way most science books do. Instead, he takes us on a tour from children’s highly gendered, highly trouble-laden play scenarios, through an examination of the most common themes enacted in dreams—which contra Freud are seldom centered on wish-fulfillment—through some thought experiments on how intensely boring so-called hyperrealism, or the rendering of real life as it actually occurs, in fiction would be (or actually is, if you’ve read any of D.F.Wallace’s last novel about an IRS clerk). The effect is that instead of simply having a new idea to toss around we actually feel how odd it is to devote so much of our lives to obsessing over anxiety-inducing fantasies fraught with looming catastrophe. And we appreciate just how integral story is to almost everything we do.

This gloss of Gottschall’s approach gives a sense of what is truly original about The Storytelling Animal—it doesn’t seal off narrative as discrete from other features of human existence but rather shows how stories permeate every aspect of our lives, from our dreams to our plans for the future, even our sense of our own identity. In a chapter titled “Life Stories,” Gottschall writes,

This need to see ourselves as the striving heroes of our own epics warps our sense of self. After all, it’s not easy to be a plausible protagonist. Fiction protagonists tend to be young, attractive, smart, and brave—all of the things that most of us aren’t. Fiction protagonists usually live interesting lives that are marked by intense conflict and drama. We don’t. Average Americans work retail or cubicle jobs and spend their nights watching protagonists do interesting things on television, while they eat pork rinds dipped in Miracle Whip. (171)

If you find this observation a tad unsettling, imagine it situated on a page underneath a mug shot of John Wayne Gacy with a caption explaining how he thought of himself “more as a victim than as a perpetrator.” For the most part, though, stories follow an easily identifiable moral logic, which Gottschall demonstrates with a short plot of his own based on the hypothetical situations Jonathan Haidt designed to induce moral dumbfounding. This almost inviolable moral underpinning of narratives suggests to Gottschall that one of the functions of stories is to encourage a sense of shared values and concern for the wider community, a role similar to the one D.S. Wilson sees religion as having played, and continuing to play in human evolution.

Though Gottschall stays away from the inside baseball stuff for the most part, he does come down firmly on one issue in opposition to at least one of the leading lights of the field. Gottschall imagines a future “exodus” from the real world into virtual story realms that are much closer to the holodecks of Star Trek than to current World of Warcraft interfaces. The assumption here is that people’s emotional involvement with stories results from audience members imagining themselves to be the protagonist. But interactive videogames are probably much closer to actual wish-fulfillment than the more passive approaches to attending to a story—hence the god-like powers and grandiose speechifying.

William Flesch challenges the identification theory in his own (much more technical) book Comeuppance. He points out that films that have experimented with a first-person approach to camera work failed to capture audiences (think of the complicated contraption that filmed Will Smith’s face as he was running from the zombies in I am Legend). Flesch writes, “If I imagined I were a character, I could not see her face; thus seeing her face means I must have a perspective on her that prevents perfect (naïve) identification” (16). One of the ways we sympathize with one another, though, is to mirror them—to feel, at least to some degree, their pain. That makes the issue a complicated one. Flesch believes our emotional involvement comes not from identification but from a desire to see virtuous characters come through the troubles of the plot unharmed, vindicated, maybe even rewarded. Attending to a story therefore entails tracking characters' interactions to see if they are in fact virtuous, then hoping desperately to see their virtue rewarded.

Gottschall does his best to avoid dismissing the typical obsessive Larper (live-action role player) as the “stereotypical Dungeons and Dragons player” who “is a pimply, introverted boy who isn’t cool and can’t play sports or attract girls” (190). And he does his best to end his book on an optimistic note. But the exodus he writes about may be an example of another phenomenon he discusses. First the optimism:

Humans evolved to crave story. This craving has, on the whole, been a good thing for us. Stories give us pleasure and instruction. They simulate worlds so we can live better in this one. They help bind us into communities and define us as cultures. Stories have been a great boon to our species. (197)

But he then makes an analogy with food cravings, which likewise evolved to serve a beneficial function yet in the modern world are wreaking havoc with our health. Just as there is junk food, so there is such a thing as “junk story,” possibly leading to what Brian Boyd, another luminary in evolutionary criticism, calls a “mental diabetes epidemic” (198). In the context of America’s current education woes, and with how easy it is to conjure images of glazy-eyed zombie students, the idea that video games and shows like Jersey Shore are “the story equivalent of deep-fried Twinkies” (197) makes an unnerving amount of sense.

Here, as in the section on how our personal histories are more fictionalized rewritings than accurate recordings, Gottschall manages to achieve something the playful tone and off-handed experimentation don't prepare you for. The surprising accomplishment of this unassuming little book (200 pages) is that it never stops being a light read even as it takes on discoveries with extremely weighty implications. The temptation to eat deep-fried Twinkies is only going to get more powerful as story-delivery systems become more technologically advanced. Might we have already begun the zombie apocalypse without anyone noticing—and, if so, are there already heroes working to save us we won’t recognize until long after the struggle has ended and we’ve begun weaving its history into a workable narrative, a legend?

Also read:

WHAT IS A STORY? AND WHAT ARE YOU SUPPOSED TO DO WITH ONE?

And:

HOW TO GET KIDS TO READ LITERATURE WITHOUT MAKING THEM HATE IT

The Enlightened Hypocrisy of Jonathan Haidt's Righteous Mind

Jonathan Haidt extends an olive branch to conservatives by acknowledging their morality has more dimensions than the morality of liberals. But is he mistaking what’s intuitive for what’s right? A critical, yet admiring review of The Righteous Mind.

A Review of Jonathan Haidt's new book,

The Righteous Mind: Why Good People are Divided by Politics and Religion

Back in the early 1950s, Muzafer Sherif and his colleagues conducted a now-infamous experiment that validated the central premise of Lord of the Flies. Two groups of 12-year-old boys were brought to a camp called Robber’s Cave in southern Oklahoma where they were observed by researchers as the members got to know each other. Each group, unaware at first of the other’s presence at the camp, spontaneously formed a hierarchy, and they each came up with a name for themselves, the Eagles and the Rattlers. That was the first stage of the study. In the second stage, the two groups were gradually made aware of each other’s presence, and then they were pitted against each other in several games like baseball and tug-o-war. The goal was to find out if animosity would emerge between the groups. This phase of the study had to be brought to an end after the groups began staging armed raids on each other’s territory, wielding socks they’d filled with rocks. Prepubescent boys, this and several other studies confirm, tend to be highly tribal.

So do conservatives.

This is what University of Virginia psychologist Jonathan Haidt heroically avoids saying explicitly for the entirety of his new 318-page, heavily endnoted The Righteous Mind: Why Good People Are Divided by Politics and Religion. In the first of three parts, he takes on ethicists like John Stuart Mill and Immanuel Kant, along with the so-called New Atheists like Sam Harris and Richard Dawkins, because, as he says in a characteristically self-undermining pronouncement, “Anyone who values truth should stop worshipping reason” (89). Intuition, Haidt insists, is more worthy of focus. In part two, he lays out evidence from his own research showing that all over the world judgments about behaviors rely on a total of six intuitive dimensions, all of which served some ancestral, adaptive function. Conservatives live in “moral matrices” that incorporate all six, while liberal morality rests disproportionally on just three. At times, Haidt intimates that more dimensions is better, but then he explicitly disavows that position. He is, after all, a liberal himself. In part three, he covers some of the most fascinating research to emerge from the field of human evolutionary anthropology over the past decade and a half, concluding that tribalism emerged from group selection and that without it humans never would have become, well, human. Again, the point is that tribal morality—i.e. conservatism—cannot be all bad.

One of Haidt’s goals in writing The Righteous Mind, though, was to improve understanding on each side of the central political divide by exploring, and even encouraging an appreciation for, the moral psychology of those on the rival side. Tribalism can’t be all bad—and yet we need much less of it in the form of partisanship. “My hope,” Haidt writes in the introduction, “is that this book will make conversations about morality, politics, and religion more common, more civil, and more fun, even in mixed company” (xii). Later he identifies the crux of his challenge, “Empathy is an antidote to righteousness, although it’s very difficult to empathize across a moral divide” (49). There are plenty of books by conservative authors which gleefully point out the contradictions and errors in the thinking of naïve liberals, and there are plenty by liberals returning the favor. What Haidt attempts is a willful disregard of his own politics for the sake of transcending the entrenched divisions, even as he’s covering some key evidence that forms the basis of his beliefs. Not surprisingly, he gives the impression at several points throughout the book that he’s either withholding the conclusions he really draws from the research or exercising great discipline in directing his conclusions along paths amenable to his agenda of bringing about greater civility.

Haidt’s focus is on intuition, so he faces the same challenge Daniel Kahneman did in writing Thinking, Fast and Slow: how to convey all these different theories and findings in a book people will enjoy reading from first page to last? Kahneman’s attempt was unsuccessful, but his encyclopedic book is still readable because its topic is so compelling. Haidt’s approach is to discuss the science in the context of his own story of intellectual development. The product reads like a postmodern hero’s journey in which the unreliable narrator returns right back to where he started, but with a heightened awareness of how small his neighborhood really is. It’s a riveting trip down the rabbit hole of self-reflection where the distinction between is and ought gets blurred and erased and reinstated, as do the distinctions between intuition and reason, and even self and other. Since, as Haidt reports, liberals tend to score higher on the personality trait called openness to new ideas and experiences, he seems to have decided on a strategy of uncritically adopting several points of conservative rhetoric—like suggesting liberals are out-of-touch with most normal people—in order to subtly encourage less open members of his audience to read all the way through. Who, after all, wants to read a book by a liberal scientist pointing out all the ways conservatives go wrong in their thinking?

The Elephant in the Room

Haidt’s first move is to challenge the primacy of thinking over intuiting. If you’ve ever debated someone into a corner, you know simply demolishing the reasons behind a position will pretty much never be enough to change anyone’s mind. Citing psychologist Tom Gilovich, Haidt explains that when we want to believe something, we ask ourselves, “Can I believe it?” We begin a search, “and if we find even a single piece of pseudo-evidence, we can stop thinking. We now have permission to believe. We have justification, in case anyone asks.” But if we don’t like the implications of, say, global warming, or the beneficial outcomes associated with free markets, we ask a different question: when we don’t want to believe something, we ask ourselves, “Must I believe it?” Then we search for contrary evidence, and if we find a single reason to doubt the claim, we can dismiss it. You only need one key to unlock the handcuffs of must. Psychologists now have file cabinets full of findings on “motivated reasoning,” showing the many tricks people use to reach the conclusions they want to reach. (84)

Haidt’s early research was designed to force people into making weak moral arguments so that he could explore the intuitive foundations of judgments of right and wrong. When presented with stories involving incest, or eating the family dog, which in every case were carefully worded to make it clear no harm would result to anyone—the incest couldn’t result in pregnancy; the dog was already dead—“subjects tried to invent victims” (24). It was clear that they wanted there to be a logical case based on somebody getting hurt so they could justify their intuitive answer that a wrong had been done.

They said things like ‘I know it’s wrong, but I just can’t think of a reason why.’ They seemed morally dumbfounded—rendered speechless by their inability to explain verbally what they knew intuitively. These subjects were reasoning. They were working quite hard reasoning. But it was not reasoning in search of truth; it was reasoning in support of their emotional reactions. (25)

Reading this section, you get the sense that people come to their beliefs about the world and how to behave in it by asking the same three questions they’d ask before deciding on a t-shirt: how does it feel, how much does it cost, and how does it make me look? Quoting political scientist Don Kinder, Haidt writes, “Political opinions function as ‘badges of social membership.’ They’re like the array of bumper stickers people put on their cars showing the political causes, universities, and sports teams they support” (86)—or like the skinny jeans showing everybody how hip you are.

Kahneman uses the metaphor of two systems to explain the workings of the mind. System 1, intuition, does most of the work most of the time. System 2 takes a lot more effort to engage and can never manage to operate independently of intuition. Kahneman therefore proposes educating your friends about the common intuitive mistakes—because you’ll never recognize them yourself. Haidt uses the metaphor of an intuitive elephant and a cerebrating rider. He first used this image for an earlier book on happiness, so the use of the GOP mascot was accidental. But because of the more intuitive nature of conservative beliefs it’s appropriate. Far from saying that republicans need to think more, though, Haidt emphasizes the point that rational thought is never really rational and never anything but self-interested. He argues,

the rider acts as the spokesman for the elephant, even though it doesn’t necessarily know what the elephant is really thinking. The rider is skilled at fabricating post hoc explanations for whatever the elephant has just done, and it is good at finding reasons to justify whatever the elephant wants to do next. Once human beings developed language and began to use it to gossip about each other, it became extremely valuable for elephants to carry around on their backs a full-time public relations firm. (46)

The futility of trying to avoid motivated reasoning provides Haidt some justification of his own to engage in what can only be called pandering. He cites cultural psychologists Joe Henrich, Steve Heine, and Ara Noenzayan, who argued in their 2010 paper “The Weirdest People in the World?”that researchers need to do more studies with culturally diverse subjects. Haidt commandeers the acronym WEIRD—western, educated, industrial, rich, and democratic—and applies it somewhat derisively for most of his book, even though it applies both to him and to his scientific endeavors. Of course, he can’t argue that what’s popular is necessarily better. But he manages to convey that attitude implicitly, even though he can’t really share the attitude himself.

Haidt is at his best when he’s synthesizing research findings into a holistic vision of human moral nature; he’s at his worst, his cringe-inducing worst, when he tries to be polemical. He succumbs to his most embarrassingly hypocritical impulses in what are transparently intended to be concessions to the religious and the conservative. WEIRD people are more apt to deny their intuitive, judgmental impulses—except where harm or oppression are involved—and insist on the fair application of governing principles derived from reasoned analysis. But apparently there’s something wrong with this approach:

Western philosophy has been worshipping reason and distrusting the passions for thousands of years. There’s a direct line running from Plato through Immanuel Kant to Lawrence Kohlberg. I’ll refer to this worshipful attitude throughout this book as the rationalist delusion. I call it a delusion because when a group of people make something sacred, the members of the cult lose the ability to think clearly about it. (28)

This is disingenuous. For one thing, he doesn’t refer to the rationalist delusion throughout the book; it only shows up one other time. Both instances implicate the New Atheists. Haidt coins the term rationalist delusion in response to Dawkins’s The God Delusion. An atheist himself, Haidt is throwing believers a bone. To make this concession, though, he’s forced to seriously muddle his argument. “I’m not saying,” he insists,

we should all stop reasoning and go with our gut feelings. Gut feelings are sometimes better guides than reasoning for making consumer choices and interpersonal judgments, but they are often disastrous as a basis for public policy, science, and law. Rather, what I’m saying is that we must be wary of any individual’s ability to reason. We should see each individual as being limited, like a neuron. (90)

As far as I know, neither Harris nor Dawkins has ever declared himself dictator of reason—nor, for that matter, did Mill or Rawls (Hitchens might have). Haidt, in his concessions, is guilty of making points against arguments that were never made. He goes on to make a point similar to Kahneman’s.

We should not expect individuals to produce good, open-minded, truth-seeking reasoning, particularly when self-interest or reputational concerns are in play. But if you put individuals together in the right way, such that some individuals can use their reasoning powers to disconfirm the claims of others, and all individuals feel some common bond or shared fate that allows them to interact civilly, you can create a group that ends up producing good reasoning as an emergent property of the social system. (90)

What Haidt probably realizes but isn’t saying is that the environment he’s describing is a lot like scientific institutions in academia. In other words, if you hang out in them, you’ll be WEIRD.

A Taste for Self-Righteousness

The divide over morality can largely be reduced to the differences between the urban educated and the poor not-so-educated. As Haidt says of his research in South America, “I had flown five thousand miles south to search for moral variation when in fact there was more to be found a few blocks west of campus, in the poor neighborhood surrounding my university” (22). One of the major differences he and his research assistants serendipitously discovered was that educated people think it’s normal to discuss the underlying reasons for moral judgments while everyone else in the world—who isn’t WEIRD—thinks it’s odd:

But what I didn’t expect was that these working-class subjects would sometimes find my request for justifications so perplexing. Each time someone said that the people in a story had done something wrong, I asked, “Can you tell me why that was wrong?” When I had interviewed college students on the Penn campus a month earlier, this question brought forth their moral justifications quite smoothly. But a few blocks west, this same question often led to long pauses and disbelieving stares. Those pauses and stares seemed to say,

You mean you don’t know why it’s wrong to do that to a chicken? I have to explain it to you? What planet are you from? (95)

The Penn students “were unique in their unwavering devotion to the ‘harm principle,’” Mill’s dictum that laws are only justified when they prevent harm to citizens. Haidt quotes one of the students as saying, “It’s his chicken, he’s eating it, nobody is getting hurt” (96). (You don’t want to know what he did before cooking it.)

Having spent a little bit of time with working-class people, I can make a point that Haidt overlooks: they weren’t just looking at him as if he were an alien—they were judging him. In their minds, he was wrong just to ask the question. The really odd thing is that even though Haidt is the one asking the questions he seems at points throughout The Righteous Mind to agree that we shouldn’t ask questions like that:

There’s more to morality than harm and fairness. I’m going to try to convince you that this principle is true descriptively—that is, as a portrait of the moralities we see when we look around the world. I’ll set aside the question of whether any of these alternative moralities are really good, true, or justifiable. As an intuitionist, I believe it is a mistake to even raise that emotionally powerful question until we’ve calmed our elephants and cultivated some understanding of what such moralities are trying to accomplish. It’s just too easy for our riders to build a case against every morality, political party, and religion that we don’t like. So let’s try to understand moral diversity first, before we judge other moralities. (98)

But he’s already been busy judging people who base their morality on reason, taking them to task for worshipping it. And while he’s expending so much effort to hold back his own judgments he’s being judged by those whose rival conceptions he’s trying to understand. His open-mindedness and disciplined restraint are as quintessentially liberal as they are unilateral.

In the book’s first section, Haidt recounts his education and his early research into moral intuition. The second section is the story of how he developed his Moral Foundations Theory. It begins with his voyage to Bhubaneswar, the capital of Orissa in India. He went to conduct experiments similar to those he’d already been doing in the Americas. “But these experiments,” he writes, “taught me little in comparison to what I learned just from stumbling around the complex social web of a small Indian city and then talking with my hosts and advisors about my confusion.” It was an earlier account of this sojourn Haidt had written for the online salon The Edge that first piqued my interest in his work and his writing. In both, he talks about his two “incompatible identities.”

On one hand, I was a twenty-nine-year-old liberal atheist with very definite views about right and wrong. On the other hand, I wanted to be like those open-minded anthropologists I had read so much about and had studied with. (101)

The people he meets in India are similar in many ways to American conservatives. “I was immersed,” Haidt writes, “in a sex-segregated, hierarchically stratified, devoutly religious society, and I was committed to understanding it on its own terms, not on mine” (102). The conversion to what he calls pluralism doesn’t lead to any realignment of his politics. But supposedly for the first time he begins to feel and experience the appeal of other types of moral thinking. He could see why protecting physical purity might be fulfilling. This is part of what's known as the “ethic of divinity,” and it was missing from his earlier way of thinking. He also began to appreciate certain aspects of the social order, not to the point of advocating hierarchy or rigid sex roles but seeing value in the complex network of interdependence.

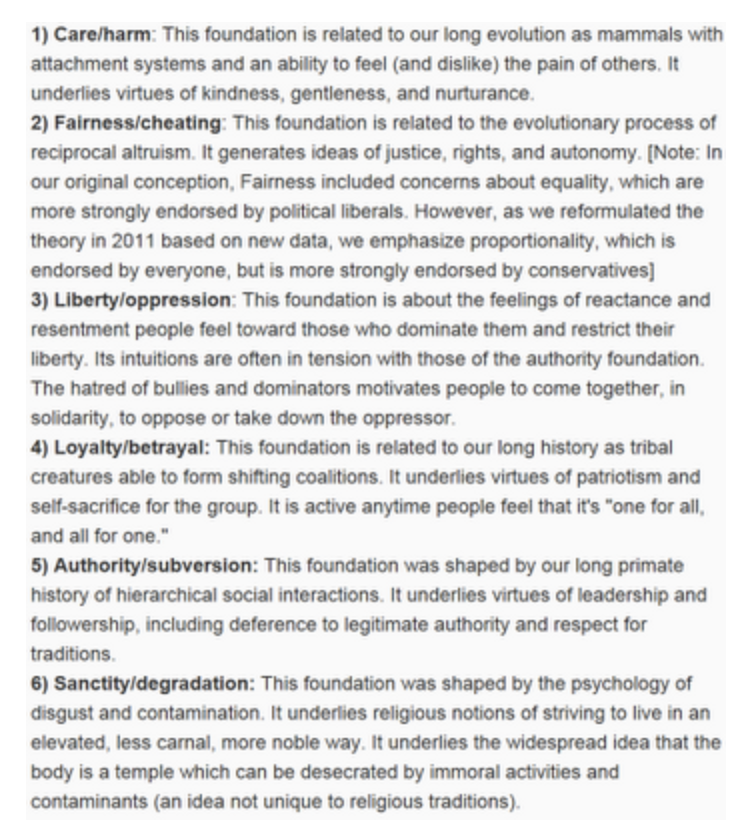

The story is thoroughly engrossing, so engrossing that you want it to build up into a life-changing insight that resolves the crisis. That’s where the six moral dimensions come in (though he begins with just five and only adds the last one much later), which he compares to the different dimensions of taste that make up our flavor palette. The two that everyone shares but that liberals give priority to whenever any two or more suggest different responses are Care and Harm—hurting people is wrong and we should help those in need—and Fairness. The other three from the original set are Loyalty, Authority, and Sanctity, loyalty to the tribe, respect for the hierarchy, and recognition of the sacredness of the tribe’s symbols, like the flag. Libertarians are closer to liberals; they just rely less on the Care dimension and much more on the recently added sixth one, Liberty from Opression, which Haidt believes evolved in the context of ancestral egalitarianism similar to that found among modern nomadic foragers. Haidt suggests that restricting yourself to one or two dimensions is like swearing off every flavor but sweet and salty, saying,

many authors reduce morality to a single principle, usually some variant of welfare maximization (basically, help people, don’t hurt them). Or sometimes it’s justice or related notions of fairness, rights, or respect for individuals and their autonomy. There’s The Utilitarian Grill, serving only sweeteners (welfare), and The Deontological Diner, serving only salts (rights). Those are your options. (113)

Haidt doesn’t make the connection between tribalism and the conservative moral trifecta explicit. And he insists he’s not relying on what’s called the Naturalistic Fallacy—reasoning that what’s natural must be right. Rather, he’s being, he claims, strictly descriptive and scientific.

Moral judgment is a kind of perception, and moral science should begin with a careful study of the moral taste receptors. You can’t possibly deduce the list of five taste receptors by pure reasoning, nor should you search for it in scripture. There’s nothing transcendental about them. You’ve got to examine tongues. (115)

But if he really were restricting himself to description he would have no beef with the utilitarian ethicists like Mill, the deontological ones like Kant, or for that matter with the New Atheists, all of whom are operating in the realm of how we should behave and what we should believe as opposed to how we’re naturally, intuitively primed to behave and believe. At one point, he goes so far as to present a case for Kant and Jeremy Bentham, father of utilitarianism, being autistic (the trendy psychological diagnosis du jour) (120). But, like a lawyer who throws out a damning but inadmissible comment only to say “withdrawn” when the defense objects, he assures us that he doesn’t mean the autism thing as an ad hominem.

From The Moral Foundations Website

I think most of my fellow liberals are going to think Haidt’s metaphor needs some adjusting. Humans evolved a craving for sweets because in our ancestral environment fruits were a rare but nutrient-rich delicacy. Likewise, our taste for salt used to be adaptive. But in the modern world our appetites for sugar and salt have created a health crisis. These taste receptors are also easy for industrial food manufacturers to exploit in a way that enriches them and harms us. As Haidt goes on to explain in the third section, our tribal intuitions were what allowed us to flourish as a species. But what he doesn’t realize or won’t openly admit is that in the modern world tribalism is dangerous and far too easily exploited by demagogues and PR experts.

In his story about his time in India, he makes it seem like a whole new world of experiences was opened to him. But this is absurd (and insulting). Liberals experience the sacred too; they just don’t attempt to legislate it. Liberals recognize intuitions pushing them toward dominance and submission. They have feelings of animosity toward outgroups and intense loyalty toward members of their ingroup. Sometimes, they even indulge these intuitions and impulses. The distinction is not that liberals don’t experience such feelings; they simply believe they should question whether acting on them is appropriate in the given context. Loyalty in a friendship or a marriage is moral and essential; loyalty in business, in the form of cronyism, is profoundly immoral. Liberals believe they shouldn’t apply their personal feelings about loyalty or sacredness to their judgments of others because it’s wrong to try to legislate your personal intuitions, or even the intuitions you share with a group whose beliefs may not be shared in other sectors of society. In fact, the need to consider diverse beliefs—the pluralism that Haidt extolls—is precisely the impetus behind the efforts ethicists make to pare down the list of moral considerations.

Moral intuitions, like food cravings, can be seen as temptations requiring discipline to resist. It’s probably no coincidence that the obesity epidemic tracks the moral divide Haidt found when he left the Penn campus. As I read Haidt’s account of Drew Westen’s fMRI experiments with political partisans, I got a bit anxious because I worried a scan might reveal me to be something other than what I consider myself. The machine in this case is a bit like the Sorting Hat at Hogwarts, and I hoped, like Harry Potter, not to be placed in Slytherin. But this hope, even if it stems from my wish to identify with the group of liberals I admire and feel loyalty toward, cannot be as meaningless as Haidt’s “intuitionism” posits.

Ultimately, the findings Haidt brings together under the rubric of Moral Foundations Theory don’t lend themselves in any way to his larger program of bringing about greater understanding and greater civility. He fails to understand that liberals appreciate all the moral dimensions but don’t think they should all be seen as guides to political policies. And while he may want there to be less tribalism in politics he has to realize that most conservatives believe tribalism is politics—and should be.

Resistance to the Hive Switch is Futile